Leveraging Large Language Models for Detecting Citation Quotation Errors in Biomedical Literature

Abstract

M. Janina Sarol,1 Jodi Schneider,1,2 Halil Kilicoglu1

Objective

An estimated 25% of citations in medical journals are inaccurate.1 If not corrected, these citation quotation errors can propagate misinformation and establish unfounded claims.2 Correcting them before publication is vital, yet citation accuracy is overlooked in the peer review process. The prevalence of citation quotation errors in the biomedical literature also remains unclear, because existing studies rely on manual evaluation on a limited set of articles.

Design

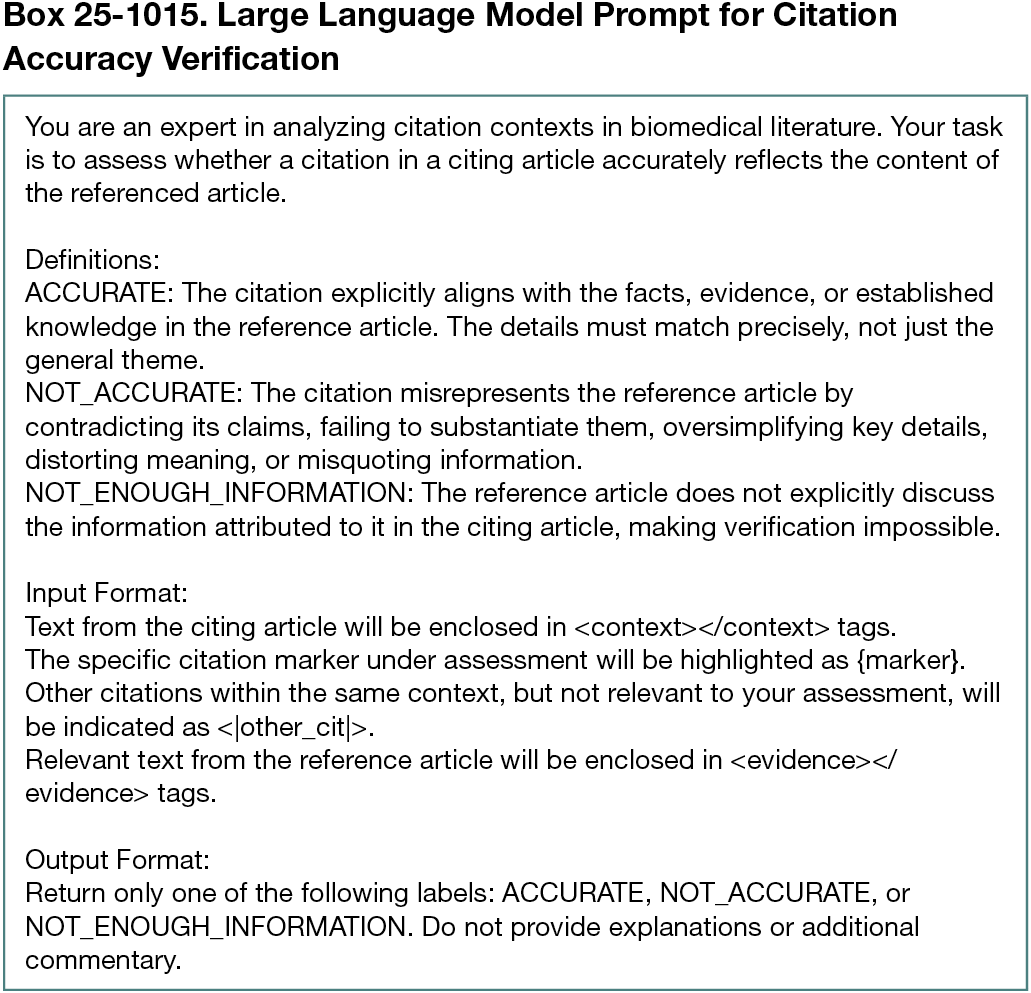

We explored the use of large language models, specifically Gemini 1.5 Pro, GPT-4o (gpt-4o-2024-08-06), and LLaMA-3.1 (8B and 70B), to automatically identify citation quotation errors. We compared 2 approaches. In the first, we supplied the prompt with the citation in question, the paragraph containing the citation, and the full reference article. The second approach consists of a 3-step procedure and mimicked how a human reviewer might perform the task manually by first identifying the citation context, then locating relevant sentences in the reference article, and finally assessing citation accuracy. Specifically, the procedure consists of (1) extracting the citation sentence, (2) retrieving the 50 most relevant sentences using the MedCPT retriever model and reranking them with a fine-tuned MonoT5 model to select the top 5 sentences, and (3) verifying the citation accuracy with the large language model (Box 25-1015). Both approaches were evaluated from November 2024 to January 2025 using a citation quotation error dataset,3 the first publicly available corpus of its kind (Cohen κ for interrater agreement: 0.96 for citation context and 0.31 for accuracy detection). The better-performing approach was applied to assess 2898 citations from 2000 articles to the 100 most cited PubMed Central Open Access (PMC-OA) articles (24 different journals).

Results

Gemini 1.5 Pro yielded best performance, obtaining an accuracy of 69% with both approaches. It outperformed GPT-4o slightly, while LLaMA-3 failed to generate meaningful results with the first approach and performed poorly with the second. Because the first approach only identified 25% (55 of 220) of the erroneous citations and the 3-step procedure identified 51% (113 of 220), the second approach was applied to the subset of PMC-OA articles. In the PMC-OA subset, 34% (981 of 2898) of the citation instances were assessed as erroneous. A total of 37% (742 of 2000) of citing articles were found to contain citation quotation errors; 98% (98 of 100) of reference articles were cited incorrectly at least once.

Conclusions

Our preliminary results confirm the high prevalence of citation quotation errors in the medical literature. We will present a more comprehensive analysis of citation quotation errors in the PMC-OA subset at the conference. Automated verification of citations could help journals and peer reviewers identify questionable citation practices and reduce propagation of misinformation, improving the trustworthiness of scientific evidence.

References

1. Jergas H, Baethge C. Quotation accuracy in medical journal articles: a systematic review and meta-analysis. PeerJ. 2015;3:e1364. doi:10.7717/peerj.1364

2. Greenberg SA. How citation distortions create unfounded authority: analysis of a citation network. BMJ. 2009;339:b2680. doi:10.1136/bmj.b2680

3. Sarol MJ, Ming S, Radhakrishna S, Schneider J, Kilicoglu H. Assessing citation integrity in biomedical publications: corpus annotation and NLP models. Bioinformatics. 2024;40(7):btae420. doi:10.1093/bioinformatics/btae420

1University of Illinois at Urbana-Champaign, IL, US, janinasarol@gmail.com; 2Harvard Radcliffe Institute for Advanced Study, Cambridge, MA, US.

Conflict of Interest

Disclosures Jodi Schneider declares nonfinancial associations with Crossref; Committee on Publication Ethics; International Association of Scientific, Technical and Medical Publishers; the European Association of Science Editors; the International Society of Managing and Technical Editors; the Institute of Electrical and Electronics Engineers; the National Information Standards Organization; and the Center for Scientific Integrity (parent organization of Retraction Watch) and has received data-in-kind from Retraction Watch and Scite and usability testing compensation from the Institute of Electrical and Electronics Engineers. The National Information Standards Organization is a subawardee on her Alfred P. Sloan Foundation grant (G-2022-19409). No other disclosures were reported.

Funding/Support

This study was supported by the Office of Research Integrity (ORI) of the US Department of Health and Human Services (HHS) (ORIIR220073). The contents are those of the authors and do not necessarily represent the official views of, nor an endorsement by, the ORI, Office of the Assistant Secretary of Health, HHS, or the US government. Jodi Schneider was supported in part as the 2024-2025 Perrin Moorhead Grayson and Bruns Grayson Fellow, Harvard Radcliffe Institute for Advanced Study and by US National Science Foundation’s CAREER grant (2046454).

Role of the Funder/Sponsor

The funders had no role in considering the study design or in the collection, analysis, interpretation of data, writing of the report, or decision to submit the article for publication.

Acknowledgment

We thank Shruthan Radhakrishna for his contributions to fine-tuning the MonoT5 model.

Additional Information

Halil Kilicoglu is a co–corresponding author (halil@illinois.edu).