Factors Associated With the Reproducibility of Health Sciences Research: A Systematic Review and Evidence Gap Map

Abstract

Stephana Julia Moss,1 Juliane Kennett,2 Jeanna Parsons Leigh,3 Niklas Bobrovitz,4 Henry T. Stelfox5

Objective

To map the evidence for factors (eg, research practices) associated with the reproducibility of methods and results reported in health sciences research.

Design

Five bibliographic databases were searched from January 2000 to May 2023, followed by supplemental searches of high-impact journals and relevant records. We included health science records of observational, interventional, or knowledge synthesis studies reporting data on factors related to research reproducibility. Factors were operationalized as modifiable or nonmodifiable aspects of study conduct relating to individual-, study-, or institutional-level practices, methods, and processes that could impact the reproducibility of research methods or results.1 Reproducibility was operationalized by 2 mutually exclusive categories: (1) methodological reproducibility (ie, the ability to exactly repeat the methods, including study procedures and data analysis) and (2) results reproducibility (ie, obtaining corroborating results using the same or similar methods).2 We included studies that used surrogate measures for reproducibility (eg, type 1 or 2 error rates) if they (1) explicitly stated their aim to investigate the reproducibility of research and (2) rationalized their choice of surrogate measure.3 Data were coded using inductive qualitative content analysis, and empirical evidence was synthesized with evidence and gap maps. Study risk of bias was assessed using the Quality in Prognostic Studies risk-of-bias tool. Statistical tests of the association between factors and reproducibility outcomes were summarized as reported in the included articles.

Results

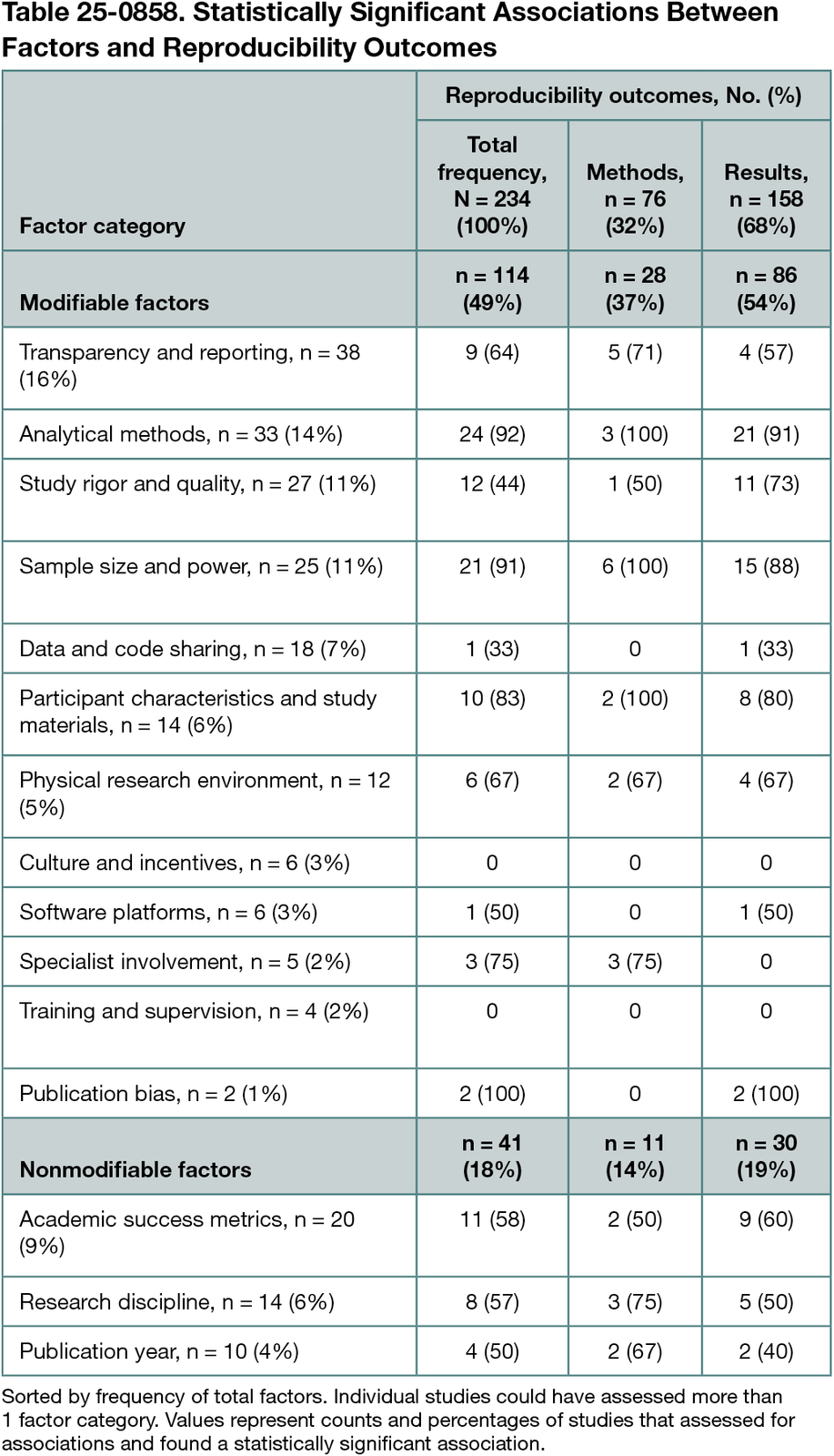

Our review included 148 primarily biomedical and preclinical (n = 62) and clinical (n = 71) studies. Factors were classified into 12 modifiable (eg, sample size and power) and 3 nonmodifiable (eg, publication year) categories. Of 234 reported evaluations of factors, 76 (32%) assessed methodological reproducibility and 158 (68%) assessed results reproducibility. The most frequently reported factor was transparency and reporting (38 of 234 assessments [16%]). A total of 155 factors (66%) were evaluated for statistical associations with reproducibility outcomes (Table 25-0858). Statistical associations were most frequently conducted for analytical methods (24 of 26 reporting significance [92%]), sample size and power (21 of 23 reporting significance [91%]), and participant characteristics and study materials (10 of 12 reporting significance [83%]). Risk-of-bias assessments found low risk of bias for study participation, factor measurement, and statistical analysis, and high risk of bias for confounding.

Conclusions

Our review identified a large body of literature consisting primarily of observational studies of factors associated with the reproducibility of health sciences research. The data suggest that reproducibility may be improved by implementing more stringent statistical testing procedures and thresholds, sample size and power calculations, and improved transparency and completeness of reporting. Experimental studies are needed to test interventions to improve reproducibility. Factors identified in this study with consistent observational support should be prioritized for experimentation. Factors that affect reproducibility in health and social care services and population and public health need to be identified given the paucity of data in these areas.

References

1. Goodman SN, Fanelli D, Ioannidis JP. What does research reproducibility mean? Sci Transl Med. 2016;8(341):341ps12-341ps12. doi:10.1126/scitranslmed.aaf5027

2. Niven DJ, McCormick TJ, Straus SE, et al. Reproducibility of clinical research in critical care: a scoping review. BMC Med. 2018;16:1-12. doi:10.1186/s12916-018-1018-6

3. Clemens MA. The meaning of failed replications: a review and proposal. J Econ Surveys. 2017;31(1):326-342. doi:10.1111/joes.12139

1Faculty of Medicine, Dalhousie University, Halifax, Nova Scotia, Canada, sj.moss@dal.ca; 2Department of Critical Care Medicine, University of Calgary, Calgary, Alberta, Canada; 3Faculty of Health, Dalhousie University, Halifax, Nova Scotia, Canada; 4Department of Emergency Medicine, University of Calgary, Calgary, Alberta, Canada; 5Faculty of Medicine & Dentistry, University of Alberta, Edmonton, Alberta, Canada.

Conflict of Interest Disclosures

None reported.

Funding/Support

This work was funded by the Canadian Institutes of Health Research.

Role of the Funder/Sponsor

The funding body had no role in the design and conduct of the study; collection, management, analysis, and interpretation of the data; preparation, review, or approval of the abstract; or decision to submit the abstract for presentation.