Factor Analysis of Academic Reviewers’ Ratings of Journal Articles on a 38-Item Scientific Quality Instrument

Abstract

Guy Madison,1 Erik J. Olsson2

Objective

High scientific quality produces knowledge and is continuously assessed by the scientific community in multiple ways (eg, reviews, seminars, committees that control funding, publication of research results, and selection of individuals for awards, employment, and other positions). The quality of these assessments affects the quality of the research produced.1,2 So how valid and reliable are they, and what weight do academics attach to different features of the research in their assessment of scientific quality?

Design

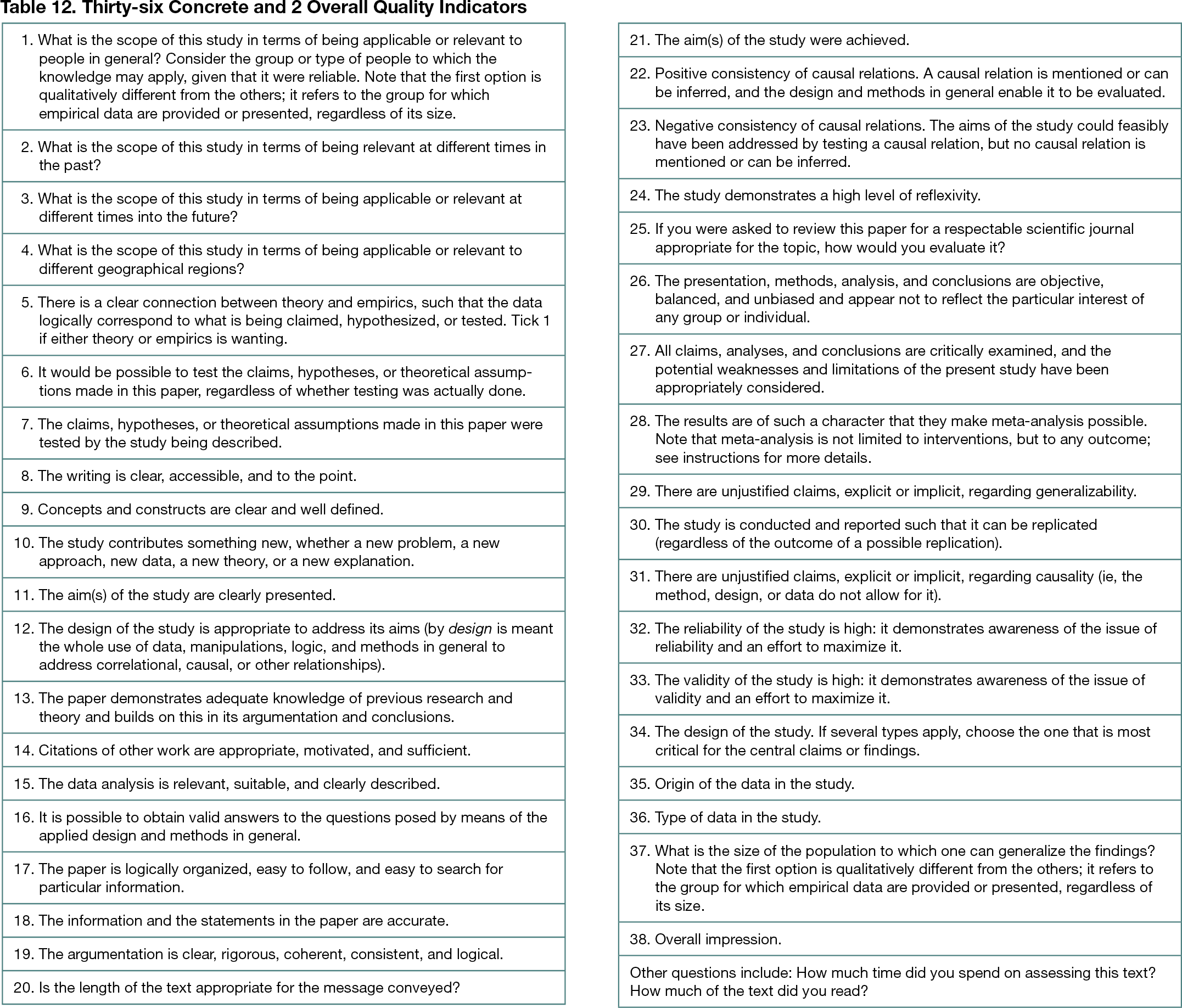

A rating instrument was devised with 36 concrete and 2 overall quality indicators (Table 12), partly based on the evaluation criteria in scientific journals’ instructions for reviewers. Fifteen academics with a PhD in the social sciences were recruited through snowball sampling. They rated 60 randomly selected journal articles from the Swedish Gender Studies List of more than 12,000 publications about sex and gender across many disciplines (eg, gender studies [GS], sociology, history, medicine, and psychology) published between 2000 and 2010. Factor analysis was applied to identify the main dimensions that reviewers consider, and these were compared across research with different levels of GS perspective.3 A high level of GS perspective was assigned to articles authored by those who self-identified as gender scholars or explicitly endorsed a gender perspective, a medium level to articles that reflected these values and beliefs, and a low level to the remaining articles. Statistical tests included Cronbach α for interrater reliability, R2 for factor analysis, and Cohen d and P values for differences between GS groups.

Results

Interrater reliability was high (Cronbach α = 0.75-0.87). Factor analysis suggested 3 dimensions: logic and clarity (eg, “there is a clear connection between theory and empirics, such that the data logically correspond to what is being claimed, hypothesized, or tested”; R2 = 0.44); causality (eg, “a causal relation is mentioned or can be inferred, and the design and methods in general enable this to be evaluated”; R2 = 0.08); and scope (eg, more data, units of analysis, generalizability, and better sampling; R2 = 0.10). The analysis could discriminate between 3 samples of 20 randomly selected articles from each of the populations with (1) a high level of GS perspective, (2) a medium level, and (3) no GS perspective on these 3 dimensions (logic and clarity, d = 0.62, P < .001; causality, d = 0.35, P < .005; and scope, d = 0.44, P < .005).

Conclusions

The scientific quality instrument seems promising in terms of high reliability and convergent validity and can be used in any type of design (eg, experimental, cross-sectional, correlational, or descriptive) to assess overall and specific dimensions of scientific quality. Additional testing of the instrument is needed to compare different bodies of research, funding applications, project plans, and papers before and after peer review; assess group differences across topics, institutions, countries, and time; and compare mainstream vs controversial fields of research to further assess the role of research quality.

References

1. Finkel EJ, Eastwick PW, Reis HT. Replicability and other features of a high-quality science: toward a balanced and empirical approach. J Pers Soc Psychol. 2017;113(2):244-253. doi:10.1037/pspi0000075

2. Tuffaha HW, El Saifi N, Chambers SK, Scuffham PA. Directing research funds to the right research projects: a review of criteria used by research organisations in Australia in prioritising health research projects for funding. BMJ Open. 2018;8(12):e026207. doi:10.1136/bmjopen-2018-026207

3. Söderlund T, Madison G. Objectivity and realms of explanation in academic journal articles concerning sex/gender: a comparison of gender studies and the other social sciences. Scientometrics. 2017;112(2):1093-1109. doi:10.1007/s11192-017-2407-x

1Department of Psychology, Umeå University, Umeå, Sweden, guy.madison@umu.se; 2Department of Philosophy, Lund University, Lund, Sweden

Conflict of Interest Disclosures

None reported.