Abstract

Effect of Positive vs Negative Reviewer-Led Discussion on Herding and Acceptance Rates of Papers Submitted to a Machine Learning Conference: A Randomized Controlled Trial

Ivan Stelmakh,1 Charvi Rastogi,1 Nihar B. Shah,1 Aarti Singh,1 Hal Daumé III2,3

Objective

Many publication venues and grant panels have a discussion stage in their peer review processes. Past research shows that human decisions in contexts involving social interactions (eg, financial markets) are susceptible to “herding,” ie, everyone doing what others are doing.1 In this work, the possible presence of herding in peer review was investigated by testing whether reviewers are biased by the first argument presented in the discussion.

Design

The experiment intervened in the peer review process of the 2020 International Conference on Machine Learning (ICML)—a large, top-tier machine learning conference—and executed a randomized controlled trial involving 1544 submissions and 2797 reviewers. In ICML, discussion happens after reviewers submit their initial reviews. Based on these reviews, a set of borderline papers that had a disagreement between reviewers was chosen. These papers were uniformly at random split into 2 groups: positive and negative. An intervention was then implemented at the level of program chairs by sending the following requests. (1) Positive group: First, the most positive reviewer was asked to start the discussion. Later, the most negative reviewer was asked to contribute to the discussion. (2) Negative group: First, the most negative reviewer was asked to start the discussion. Later, the most positive reviewer was asked to contribute to the discussion. The goal of the intervention was to induce a difference in the order in which reviewers join the discussion across conditions. If herding was present, papers from the positive group were expected to have a higher acceptance rate than papers from the negative group. With this intervention, a permutation test at significance level α = .05 was used to compare final outcomes across groups (test statistic is the difference in acceptance rates). Importantly, it was empirically verified that the intervention did not result in confounding factors: all discussion parameters (other than the order) remained the same across groups.

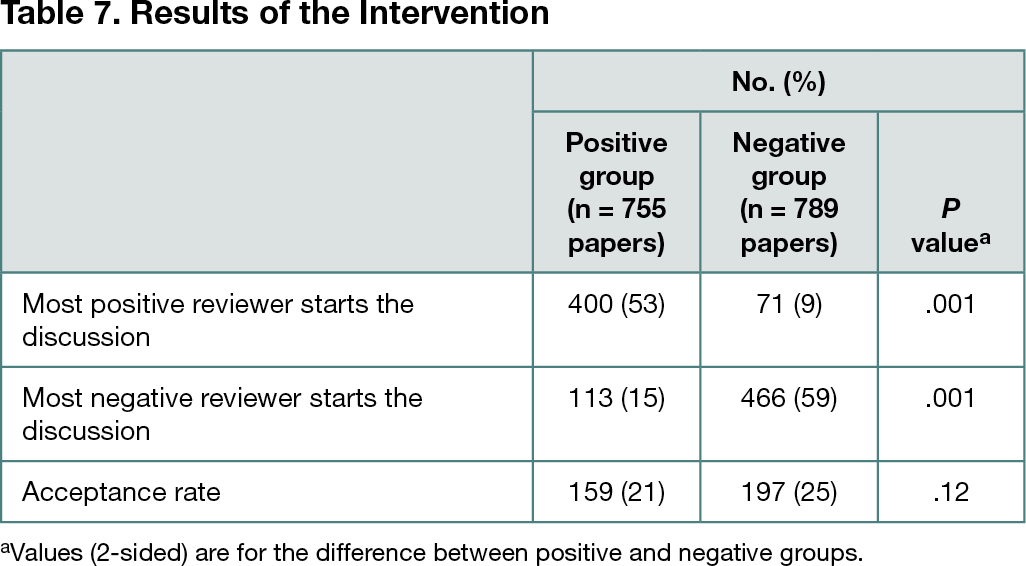

Results

Among 4625 ICML submissions, 1544 borderline papers (33%) were identified and allocated to the positive (755) and negative (789) groups. The intervention created a strong difference in the order in which reviewers joined the discussion (Table 7, rows 1 and 2), thereby confirming a strong detection power of the experiment. However, the difference in the order did not induce a difference in the outcomes (Table 7, row 3). Thus, there is no evidence of herding in peer review discussions of ICML 2020.

Conclusions

Other applications involving discussions suffer from herding and, if present, herding could result in significant unfairness in acceptance of papers and awarding of grants. The finding of this study2 was statistically negative but conveys a positive message: no evidence of herding is found in peer review, and hence no specific measures to counteract herding are needed.

References

1. Banerjee AV. A simple model of herd behavior. Q J Econ. 1992;107(3):797-817. doi:10.2307/2118364

2. Stelmakh I, Rastogi C, Shah NB, Singh A, Daumé H III. A large scale randomized controlled trial on herding in peer-review discussions. arXiv. Preprint posted online November 30, 2020. doi:10.48550/arXiv.2011.15083

1Carnegie Mellon University, Pittsburgh, PA, USA, stiv@cs.cmu.edu; 2University of Maryland, College Park, MD, USA; 3Microsoft Research, New York, NY, USA

Conflict of Interest Disclosures

Ivan Stelmakh reported having interned at Google; Charvi Rastogi reported having interned at IBM.

Funding/Support

This work was supported by the US National Science Foundation (NSF): in part by NSF CAREER award 1942124, which supports research on the fundamentals of learning from people with applications to peer review, and in part by NSF CIF 1763734, which supports research on understanding multiple types of data from people.

Role of the Funder/Sponsor

The NSF had no role in the design and conduct of the study; collection, management, analysis, and interpretation of the data; preparation, review, or approval of the abstract; and decision to submit the abstract for presentation.

Acknowledgements

We thank Edward Kennedy for useful comments on the initial design of this study. We are also grateful to the support team of the Microsoft Conference Management Toolkit (CMT) for their continuous support and help with multiple customization requests. Last, we appreciate the efforts of all reviewers and area chairs involved in the 2020 International Conference on Machine Learning review process.

Additional Information

This study was approved by the Carnegie Mellon University Institutional Review Board.