Abstract

Discrepancies in Reporting Between Trial Publications and Clinical Trial Registries in High-Impact Journals

Sarah Daisy Kosa,1,2 Lawrence Mbuagbaw,1 Victoria Borg Debono,1 Mohit Bhandari,1 Brittany B. Dennis,1 Gabrielle Ene,4 Alvin Leenus,3 Daniel Shi,3 Michael Thabane,1 Thuva Vanniyasingam,1 Chenglin Ye,5 Elgene Yranon,1 Shiyuan Zhang,1 Lehana Thabane1

Objective

It is currently unclear the extent to which key information mandatory for clinical trial registries is reported in published manuscripts. To address this gap in the literature, the primary objective of this study was to examine the percentage of studies where there are discrepancies in reporting of key study conduct items between the clinical trial registry and the manuscript.

Design

We searched PubMed for all randomized clinical trials (RCTs) published between 2012 and 2015 in the top 5 general medicine journals (based on the 2014 impact factor as published by Thomson Reuters), which all required registration of the RCT for publication; 200 full-text publications (50 from each year) were randomly selected for data extraction. Key study conduct items were extracted by 2 independent reviewers for each year. When an item was reported differently or not reported at all in either source, this was considered a discrepancy in reporting between the registry and the full-text publication. Descriptive statistics were calculated to summarize the percentage of studies with discrepancies between the registry and the published manuscript in reporting of key study conduct items. The items of interest were design (ie, randomized control trial, cohort study, case control study, case series), type (ie, retrospective, prospective), intervention, arms, start and end dates (based on month and year where available), use of data monitoring committee, and sponsor, as well as primary and secondary outcome measures.

Results

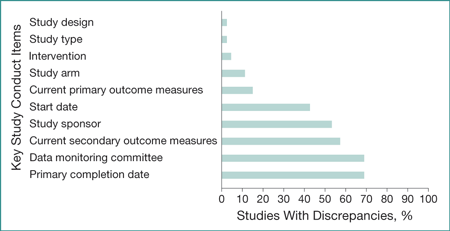

In the sample of 200 RCTs, there were relatively few studies with discrepancies in study design (n=6 [3%]), study type (n=6 [3%]), intervention (n=10 [5%]), and study arm (n=24 [12%]) (Figure). Only 30 studies (15%) had discrepancies in their primary outcomes. However, there were often discrepancies in study start date (n=86 [43%]), study sponsor (n=108 [54%]), and secondary outcome measures (n=116 [58%]). Almost 70% of studies had discrepancies regarding the use of a data monitoring committee and primary completion date reporting.

Figure. Percentage of Studies With Discrepancies in Reporting Between Trial Publications and Clinical Trial Registries in 200 High-Impact Journals

Conclusions

We identified discrepancies in reporting between publications and clinical trial registries. These findings are limited by only being based on a subset of RCTs in the included journals and may not be generalizable to all RCTs within that journal, other disciplines, journals in other languages, or lower-impact journals. Further measures are needed to improve reporting given the potential threats to the quality and integrity of scientific research.

1Department of Health Research Methods, Evidence, and Impact, McMaster University, Hamilton, Ontario, Canada, mbuagblc@mcmaster.ca; 2Toronto General Hospital-University Health Network, Toronto, Ontario, Canada; 3Princess Margaret Hospital-University Health Network, Toronto, Ontario, Canada; 4Bachelor of Health Sciences Program, McMaster University, Hamilton, Ontario, Canada; 5Oncology Biostatistics, Genentech, South San Francisco, CA, USA

Conflict of Interest Disclosures:

None reported.