Abstract

Assessment of Postpublication Critique Policies and Practices at Top-Ranked Journals in 22 Scientific Disciplines

Tom E. Hardwicke,1 Robert T. Thibault,2,3 Jessica E. Kosie,4 Loukia Tzavella,5 Theiss Bendixen,6 Sarah A. Handcock,7 Vivian E. Köneke,7 John P. A. Ioannidis2,8,9,10

Objective

To describe how top-ranked journals across 22 scientific disciplines handle postpublication critique such as letters, commentaries, and online comments.1-3

Design

Cross-sectional assessment of policies and practices related to postpublication critique at 15 journals (top-ranked by impact factor) operating in each of 22 scientific disciplines (defined by Clarivate Essential Science Indicators) assigned to 5 high-level scientific domains (defined by the authors; 330 journals). Policy information was extracted from journal websites in November 2019. For each journal offering postpublication critique, a random sample of 10 research articles published in 2018 (2066 articles) was examined to see if they were linked to postpublication critique on the article’s webpage (1 journal only published 6 articles in 2018). Features of all linked postpublication critiques and associated author replies were recorded.

Results

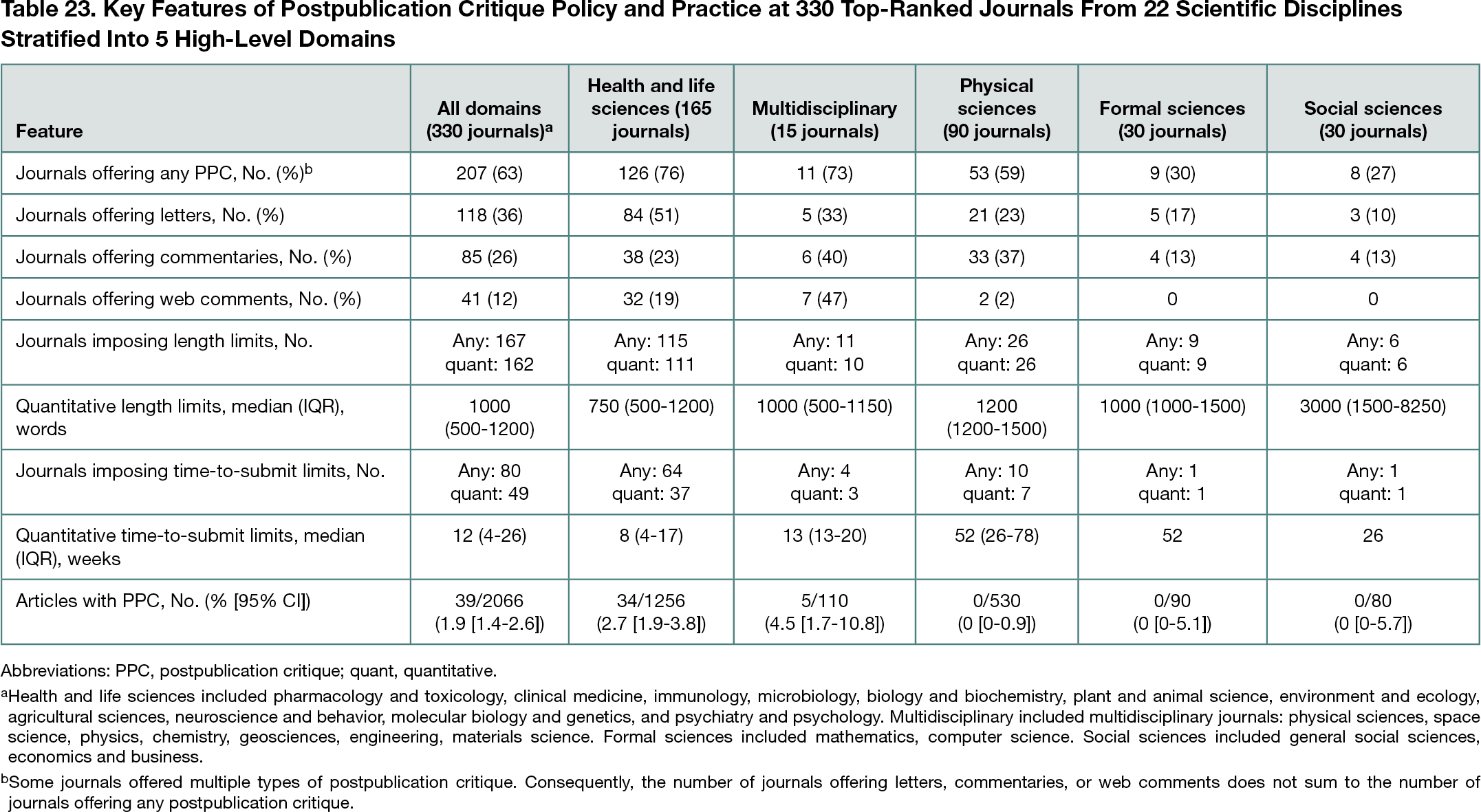

Overall, 207 of 330 journals (63%) offered postpublication critique such as letters (118), commentaries (85), or web comments (41) but often imposed limits on length (median, 1000; IQR, 500-1200 words) and time to-submit (median, 12; IQR, 4-26 weeks). The most restrictive limits were 175 words and 2 weeks; the least restrictive policies had no limits. Seventy-four journal policies implied independent external peer review of postpublication critique. Of a random sample of 2066 research articles published by journals offering postpublication critique, 39 (1.9%; 95% CI, 1.4%-2.6%) were linked to at least 1 postpublication critique (there were 58 postpublication critiques in total). Of these target articles, 34 were from the health and life sciences and 5 were from multidisciplinary journals. Examination of all 58 postpublication critiques found that they addressed issues related to design (19), implementation (3), analysis (19), reporting (10), interpretation (45), and ethics (1); 29 were paywalled; 45 had conflict of interest statements, 15 of which declared a potential conflict; 44 received an author reply, of which 41 asserted that the authors’ conclusions were unchanged. Fifty-one did not include any novel statistical analyses of original or new data, though only 3 target articles stated that data were available. The health and life sciences and multidisciplinary journals offered and published more postpublication critiques relative to other domains (Table 23). Clinical medicine in particular stood out, with the highest prevalence of postpublication critique (13% of 150 articles) and all 15 journals allowing postpublication critique. However, these journals also imposed the strictest limits on length (median, 400; IQR, 400-550 words) and time to submit (median, 4; IQR, 4-6 weeks).

Conclusions

Top-ranked academic journals across scientific disciplines often pose barriers to the cultivation, documentation, and dissemination of postpublication critique. Publication of postpublication critique was rare in most disciplines. Published postpublication critique may have little effect on authors’ conclusions.

References

1. Bastian H. A stronger post-publication culture is needed for better science. doi : https://doi.org/10.1371/journal.pmed.1001772

2.Altman DG. Poor-quality medical research: what can journals do? doi.org/10.1001/jama.287.21.2765

3. Winker MA. Letters and comments published in response to research: whither post publication peer review? Abstract presented at: International Congress on Peer Review and Scientific Publication; September 9, 2013; Chicago, Illinois. https://peerreviewcongress.org/2013-abstracts/

1Department of Psychology, University of Amsterdam, Amsterdam, the Netherlands, hardwickete@gmail.com; 2Meta-Research Innovation Center at Stanford (METRICS), Stanford University, Stanford, CA, USA; 3School of Psychological Science, University of Bristol, Bristol, UK; 4Department of Psychology, Princeton University, Princeton, NJ, USA; 5School of Psychology, Cardiff University, Cardiff, UK; 6Department of the Study of Religion, Aarhus University, Aarhus, Denmark; 7Florey Department of Neuroscience and Mental Health, University of Melbourne, Melbourne, Australia; 8Charité–Universitätsmedizin Berlin, Berlin, Germany; 9Meta-Research Innovation Center Berlin (METRIC-B), QUEST Center for Transforming Biomedical Research, Berlin Institute of Health, Charité–Universitätsmedizin Berlin, Berlin, Germany; 10Departments of Medicine, Epidemiology and Population Health, Biomedical Data Science, and Statistics, Stanford University, Stanford, CA, USA

Conflict of Interest Disclosures

Tom E. Hardwicke receives funding from the European Union’s Horizon 2020 research and innovation programme under the Marie Skłodowska-Curie grant agreement No. 841188. Robert T. Thibault is supported by a general support grant awarded to METRICS from the Laura and John Arnold Foundation and a postdoctoral fellowship from the Fonds de recherche du Québec–Santé. Theiss Bendixen thanks the Aarhus University Research Foundation for support. Jessica E. Kosie received funding from NSF SBE Postdoctoral Research Fellowship 2004983 and NIH F32 National Research Service Award HD103439. Loukia Tzavella was supported by ESRC postdoctoral fellowship ES/V011030/1. No other conflicts were reported.

Funding/Support

The Meta-Research Innovation Center at Stanford (METRICS) is supported by a grant from the Laura and John Arnold Foundation. The Meta-Research Innovation Center Berlin (METRIC-B) is supported by a grant from the Einstein Foundation and Stiftung Charité.

Role of the Funder/Sponsor

The funders had no role in this research.