Assessment of Errors in Peer Reviews Published With Articles in The BMJ

Abstract

Fred Arthur1

Objective

It is possible that most published research findings are false.1 Effective peer review should detect the study error and the mental model error. The study error can be detected by traditional epidemiological/statistical analysis and exists at the level of the study. The mental model error exists at the level of underlying structural intellectual knowledge and beliefs,2 in which accuracy provides the prior probability and thus substantially affects the posterior probability of the study.

Design

The peer review process of The BMJ was assessed for an inclusive sample of articles published with peer review cycles (ie, editor and reviewer and author comments and decision letters) in the research section in July 2021. Likert 5-point scales were used. The peer-review process for each article was rated for the awareness within the process to detect and manage study error and mental model error, with 5 representing high estimated awareness and efficacy. For mental model error detection, higher Likert scores were accorded in peer review cycles containing the following 4 elements: (1) active criticism of the underlying mental model (possible errors in diagnostic categories, underlying beliefs of therapy mechanisms, and the pathological appropriateness of outcome measures) to estimate the final study posterior probability; (2) awareness that studies produce contingent fact claims and that intellectual validity requires analysis of this contingency; (3) awareness that key patient outcomes must exist outside the mental model to minimize outcome contamination by the model and circular reasoning within the model (eg, use of all-cause morbidity and mortality); and (4) awareness that clinicians are most interested in the probability of the mental model because they use the model daily to inform numerous data decisions made in clinical practice (eg, decisions regarding history, physical examination, differential diagnosis, result, treatment planning, and outcome assessments data). A single ranking was determined for the totality of the review process for each article provided by editors and reviewers and author responses. Result populations of study error and mental model error scores were compared to assess the separation of the distributions using the Mann-Whitney test.

Results

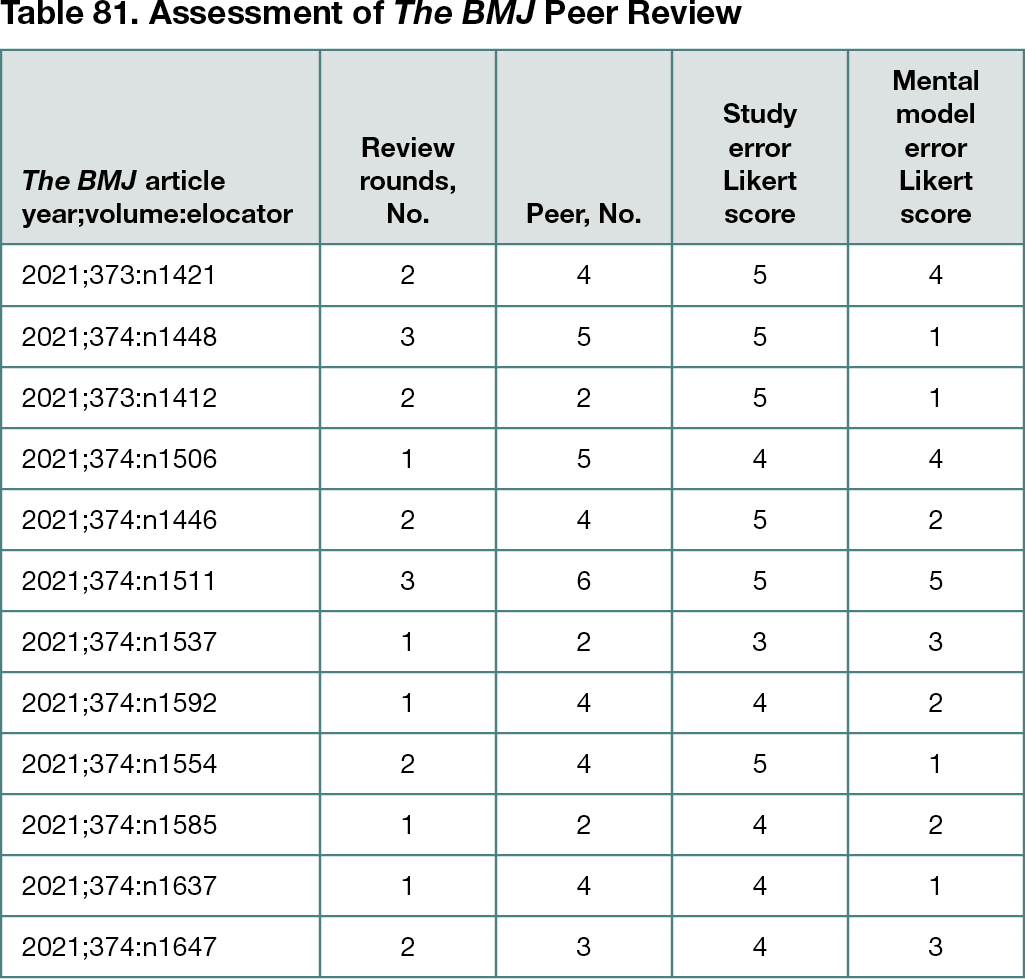

Forty-five peer reviewers produced 21 rounds of review for 12 articles (Table 81). The study error median score was 4.5, and the mental model error median score was 2 with the Mann-Whitney rejection of equal distributions at P < .05.

Conclusions

This preliminary study found The BMJ peer review process to be effective at detecting and managing study errors but much less aware of mental model error problems. Given that the underlying mental model probability may affect each study’s posterior probability, excellent study internal validity may not secure a strong warrant for clinical applicability. Peer review that detects and manages both study error and mental model error may improve clinical study applicability. Preliminary discussions and later guideline formation may help correct this deficiency.

References

1. Ioannidis JP. Why most published research findings are false. PLoS Med. 2005;2(8):e124.

2. Holland JH, Holyoak KJ, Nisbett RE, Thagard PR. Induction: Processes of Inference, Learning, and Discovery. Massachusetts Institute of Technology; 1986.

1Western University, London, ON, Canada, fred.arthur@rogers.com

Conflict of Interest Disclosures

None reported.