Altmetric Footprint of Retracted and Corrected Publications: The Role of Misinformation and Disinformation

Abstract

Ashrah Maleki,1 Niina Sormanen,1 Kim Holmberg1

Objective

While the alternative metric (altmetric) visibility of retracted publications has been studied,1 less is known about how media and social media engage with corrections issued after retractions. This study examines how different types of retracted and corrected scientific publications engage scholarly and public audiences across altmetric platforms, focusing on how the reasons for retraction influence visibility. We distinguish between misinformation (unintentional error), disinformation (intentional deception), and disputed cases and assess their relative representation in altmetric activity.

Design

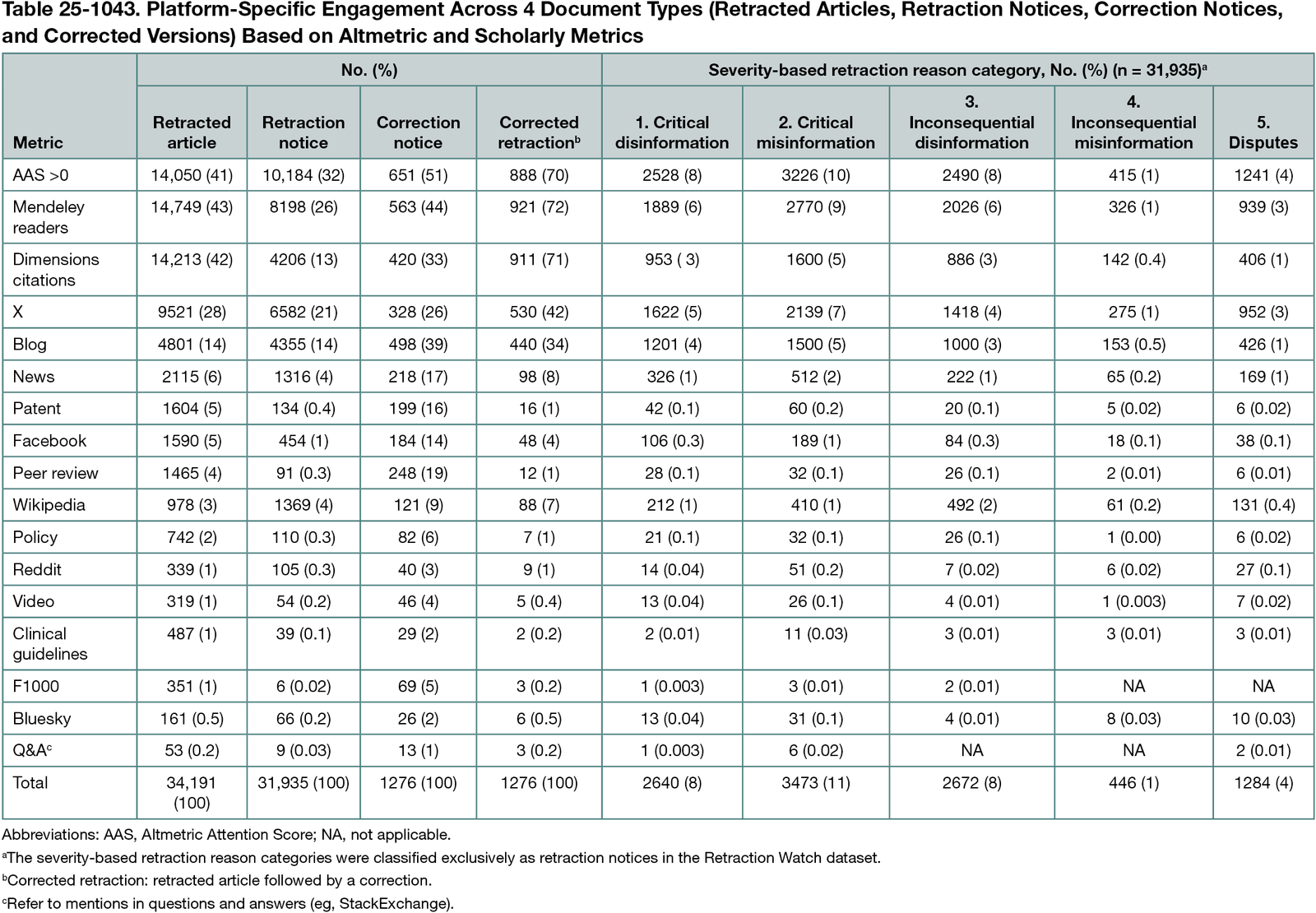

This cross-sectional study analyzes altmetric data of retracted and corrected publications from multiple sources. Data were extracted in November and December 2024 from Retraction Watch, Scopus, and Altmetric.com (and updated in June 2025). The dataset comprised (1) 34,191 DOIs of original articles (later retracted), (2) 31,935 DOIs of retraction notices, (3) 1276 DOIs of correction notices, and (4) corrected versions (ie, retracted articles followed by a correction). To ensure clarity in altmetric attribution, we excluded records in which a single DOI was assigned to both the original article and its corresponding retraction or correction notice. Retraction reasons were coded using a 6-zone classification framework (based on Sormanen et al2) that distinguishes both intent and (nonscholarly) consequence: critical disinformation (eg, data falsification or fabrication), critical misinformation (eg, unreliable analyses or results), inconsequential disinformation (eg, duplication or plagiarism), and inconsequential misinformation (eg, miscommunication or lack of approvals), along with disputed (eg, expressions of concern) and ambiguous cases. Each publication was counted using a severity-exclusive method: when multiple reasons were present, the record was assigned to the most severe applicable category (Table 25-1043). Platform-specific mention counts were analyzed across 16 altmetric platforms, including news media, blogs, X/Twitter, Mendeley, Dimensions, and policy documents. Engagement levels were compared across document types, and how these varied by reason category were examined.

Results

Among the 37,451 records, original articles received the most absolute attention: 41% had an Altmetric Attention Score (AAS) greater than zero, 43% were saved in Mendeley, and 28% appeared on X. Retraction notices were less visible (32% AAS > 0; 26% Mendeley). This means that retracted studies, often based on unreliable or deceptive findings, tend to receive more attention than the notices meant to correct them. Correction-related records showed higher proportional attention (51% of notices and 70% of retractions had an AAS greater than 0) but remained rare and largely absent from news (17% and 8%, respectively) and policy documents (6% and 1%, respectively). Among retraction notices, the most frequent reasons were critical disinformation (27%), inconsequential disinformation (27%), and critical misinformation (21%), yet these categories each saw only 8% to 10% with an AAS greater than 0.

Conclusions

Retractions receive more attention than corrections, even in serious cases. Corrections remain mostly invisible in public-facing media, underscoring the need for better metadata and targeted communication strategies.

References

1. Serghiou S, Marton RM, Ioannidis JP. Media and social media attention to retracted articles according to Altmetric. PLoS One. 2021;16(5):e0248625. doi:10.1371.journal.pone.0248625

2. Sormanen N, Holmberg K, Maleki A. Characterizing retraction reasons: types of mis- and disinformation in the scientific publications. Research in progress.

1Department of Social Research, University of Turku, Turku, Finland, ashraf.maleki@utu.fi.

Conflict of Interest

None reported.

Funding

This research is supported by 2 projects: the European Media and Information Fund, focusing on unreliable science and the role of media regulation, and the Research Council of Finland, examining the impact of scientific misinformation.

Acknowledgment

We sincerely appreciate Professor Mike Thelwall (University of Sheffield) for his support in acquiring and sharing the Retraction Watch dataset.