2009 Abstracts

THURSDAY, SEPTEMBER 10

Authorship and Contributorship

Influence of Authorship Order and Corresponding Author on Perceptions of Authors’ Contributions

Mohit Bhandari,1,2 Jason Busse,2 Abhaya Kulkarni,2 P. J. Devereaux,2 Pamela Leece,2 Sohail Bajammal,1 and Gordon Guyatt2

Objective

The majority of medical journals rely on the order of authors and who is listed as corresponding author to convey authors’ contributions. How readers interpret authors’ roles based on authorship order and corresponding author remains uncertain. We explored how, on the basis of authorship order and designation of corresponding author, academic leaders perceive authors’ contributions to research.

Design

We conducted a cross-sectional survey of chairpersons in the departments of surgery and medicine across North America (259 United States and 32 Canada). We developed hypothetical study and authorship bylines with 5 authors varying the corresponding author as first or last author. Respondents reported their perceptions about the authors’ roles in study conception and design, analysis and interpretation of data, and statistical analysis, and their view of the most prestigious authorship position. We used multinomial regression to explore the effect of corresponding author, surgical versus medical chair, and country of respondent, with respondents’ beliefs about author roles.

Results

One hundred sixty-five chairpersons (144 United States and 21 Canada; overall response rate: 57%) completed our survey. When the last author was designated as corresponding, perceptions of the first author’s role in study concept and design (odds ratio [OR], 0.25; 95% confidence interval [CI], 0.15-0.41, P < .001) and analysis and interpretation of results (OR, 0.22; 95% CI, 0.13-0.38, P < .001) decreased significantly. Overall prestige of the last author position increased significantly when designated as corresponding author (OR, 4.0; 95% CI, 2.4-6.4, P < .001). Respondents varied widely in their inferences about the contributions of the remaining authors irrespective of who was corresponding, with fewer than 40% attributing any particular role to authors 2 to 4. Our findings did not differ significantly by specialty or country of the respondent.

Conclusions

Academic department chairs were influenced substantially by corresponding author designation. We further confirm that without authors’ explicit contributions in research papers, many readers will remain uncertain or draw false conclusions about author credit and accountability.

1Division of Orthopedic Surgery, McMaster University/Hamilton General Hospital, 237 Barton St E, 7 North, Suite 727, Hamilton, Ontario L8L 2X2, Canada, e-mail: bhandam@mcmaster.ca; 2Department of Clinical Epidemiology and Biostatistics, McMaster University, Hamilton, Ontario, Canada; and 3Division of Neurosurgery, University of Toronto, Toronto, Ontario, Canada

Ghost Writers and Honorary Authorship: A Survey From the Chinese Medical Journal

Xiu-yuan HAO, Shou-chu QIAN, Su-ning YOU, and Mou-yue WANG

Objective

To estimate the prevalence of ghost authors and honorary authors among Chinese authors according to the authorship criteria defined by the International Committee of Medical Journal Editors (ICMJE).

Design

The first authors who submitted manuscripts to the Chinese Medical Journal during September 1 to December 31, 2008, were surveyed by questionnaire. The questionnaire was designed with 3 questions: (1) Who made the final decision on the authorship of your manuscript submitted to the journal? (2) Is someone without contribution to the research listed as an author?

(3) Has an English-language native speaker done something for you in preparation of the manuscript? If yes, is the speaker listed in the byline or acknowledgment section? The questionnaire was sent to the authors via e-mail. Authors working abroad or from overseas were excluded from the survey.

Results

Among 268 authors who received the questionnaire, 231 (86%) authors responded and 220 (82%) authors had effective questionnaires. The byline of manuscripts was decided by the corresponding author for 40.9% of authors, by the first author for 33.6%, and by all authors for 25.5%. English-language speakers were involved in the preparation of manuscripts of 21.4% of authors, of whom 7.3% were listed as authors, 3.6% were acknowledged, 10.4% were neither listed in the byline nor acknowledged (ghost writers). Among respondents, 71.4% reported that all authors in the byline satisfied the authorship criteria, and 28.6% were unqualified but listed as authors (honorary authors), of whom 20.0% were heads of departments or institutions, 3.2% were friends of major authors, and 5.5% were others. The prevalence of honorary authorship was almost the same as the settlement of the byline by an individual author (28%) or by a group of authors (28.6%, Χ2 = 0.006, P < .05). The appearance of ghost writers was more common when the byline was decided by an individual author (52.8%) than by a group of authors (41.6%), but the difference was not statistically significant (Χ2 = 0.11, P < .05).

Conclusions

The rates of ghost writers and honorary authors reported by authors who publish in the Chinese Medical Journal are similar to rates previously reported among US general medical journals for ghost authors and honorary authors. Many Chinese authors may be unaware of the authorship criteria defined by ICMJE.

Chinese Medical Journal, Chinese Medical Association, 42 Dongsi Xidajie, Beijing 100710, China, e-mail: scqian@126.com

Prevalence of Honorary and Ghost Authorship in 6 General Medical Journals, 2008

[Updated September 10, 2009]

Joseph Wislar, Annette Flanagin, Phil B. Fontanarosa, and Catherine D. DeAngelis

Objective

Given the increased awareness of authorship responsibility and inappropriate authorship and following new policies of some medical journals to publish individual author contributions, this study was conducted to assess the prevalence of honorary and ghost authors in 6 leading general medical journals in 2008 and compare this to the prevalence reported by author of articles published in 1996.

Design

Online survey of corresponding authors of 896 research articles, review articles, and editorial/opinion articles published in 6 general medical journals in 2008 (Annals of Internal Medicine, JAMA, Lancet, Nature Medicine, New England Journal of Medicine, and PLoS Medicine (selected according to Institute for Scientific Information Journal Citation Report ranking in general medicine category). Based on previously reported prevalence rates of 19% of articles with honorary authorship, 9% with ghost authorship, and 2% with both honorary and ghost authorship, the sample size was determined with alpha at 0.05 and 80% power to detect a 10% difference between prevalence rates in 1998 and 2008. We also analyzed rates of honorary and ghost authors according to article types and compared rates for the 4 journals that publish authorship contributions vs the 2 journals that do not.

Results

A total of 630 (70%) corresponding authors responded to the survey. Included in the final data set were 230 (37%) research articles, 136 (22%) reviews, and 264 (42%) editorials. Based on 545 usable responses on honorary authorship, 112 (21%) articles had honorary authors (range by journal: 14%-32%). This is a non-significant change from 1996 (19%; P = .387). Based on 630 responses, 49 (8%) articles had ghost authors (range by journal: 2%-11%); this is a significant decline from 1996 (12%; P = .024). The prevalence of honorary authors in 2008 was highest in Nature Medicine (32%) and lowest in New England Journal of Medicine (14%). The prevalence of ghost authors was highest in New England Journal of Medicine (11%) and lowest in Nature Medicine (2%). Honorary authors were reported for 26% of original research reports, 17% of reviews, and 17% of editorials. Ghost authors were more prevalent in research articles (12%) than reviews (6%) and editorials (5%). There were no significant differences in rates of honorary and ghost authorship between journals that require author contribution disclosures and those that do not. Further analyses will explore article and author characteristics.

Conclusions

The prevalence of honorary authors has not changed significantly since 1996, but ghost authorship has declined significantly. The prevalence of honorary and ghost authors is still a concern.

JAMA, 515 N State St, Chicago, IL 60654, USA; e-mail: joseph.wislar@jama-archives.org

Ghost Writing: How Some Journals Aided and Abetted the Marketing of a Drug

Lisa Bero and Jenny White

Objectives

To describe the processes by which a pharmaceutical company planned journal publications. To examine variation in policies regarding ghostwriting among the targeted scientific journals.

Design

We conducted a retrospective review of over 1000 internal industry documents concerning the gabapentin (Neurontin) case from the University of California, San Francisco, Drug Industry Document Archive, dated 1996-1999. We searched PubMed and Google Scholar (for gray literature) for articles planned for publication. We selected a set of articles resulting from grants that Parke-Davis gave to Medical Education Systems (MES) in 19961997 to publish 24 scientific articles and letters to the editor on gabapentin in peer-reviewed journals with prestigious “guest authors.” We searched Web sites of and contacted all journals to which these articles were submitted for their former and current policies regarding conflict of interest, ghost authorship, adherence to standards of the International Committee of Medical Journal Editors (ICMJE), independent disclosure verification procedures, and reasons for rejecting the manuscript, if applicable. We also contacted ICMJE to determine if these journals followed the Uniform Requirements for Manuscripts Submitted to Biomedical Journals (as updated in 1997).

Results

Eleven proposed articles were eventually published in 7 of the 24 journals originally targeted. None of these articles disclosed participation of Parke-Davis or MES in authorship. Only 2 articles disclosed funding by Parke-Davis or MES. Nine articles were apparently published in alternative journals, 4 of them in supplement issues. Currently all of these journals have policies requiring disclosure of conflicts of interest, but in a majority of cases ghost authorship is not addressed. Information on disclosure verification is sparse.

Conclusions

Scientific journals have varied in their effectiveness in requiring and verifying full disclosure of conflicts of interest and authorship. Uniform standards must be implemented across all journals, so that companies wishing to conceal this information do not have an outlet to publish in journals with weaker policies. These standards should extend to journal supplements and letters to the editor.

Department of Clinical Pharmacy, Institute for Health Policy Studies, University of California, San Francisco, Suite 420, Box 0613, 3333 California St, San Francisco, CA 94143, USA, e-mail: berol@pharmacy. ucsf.edu

Peer Review

The Natural History of Peer Reviewers: The Decay of Quality

Michael Callaham

Objective

Commonly used methods of training and screening journal peer reviewers have been shown not to improve the quality of reviews. Does individual reviewer performance change over time, and in what ways?

Design

All reviews for Annals of Emergency Medicine from 1994 through 2008 were rated and assessed. We used linear mixed effect models to analyze the rating changes over time, calculating actual and predicted intercept and slope for each reviewer, using the xtmixed routine in Stata (ver 10; StataCorp, College Station, TX) and controlling for editor and manuscript. The hypothesis was that individual reviewer performance changes over time.

Results

All completed reviews (14,808) by 1498 reviewers were rated on a validated 5-point scale by 84 editors. Reviewers (academic clinicians and clinical researchers) had served the journal a mean of 71.8 months (minimum of 2 months [159 reviewers, 11% of the total], maximum of 175 months) completing a mean of 13.4 reviews each (range, 1-100) with an average quality score of 3.6. The average score of the pool did not change during the study period, indicating changes in its application did not occur. Reviewers with persistent unsatisfactory scores were removed from regular reviewing. Those with only 1 review (429 reviewers, 29% of the total) could not demonstrate a trend, so were excluded from the analysis. Individual reviewers deteriorated over time at a mean rate of –0.0402 review points per year (95% confidence interval [CI], –0.04733 to –0.0330), but this was masked by the increased quality of new recruits (0.022 points/year). A very small proportion of reviewers improved at 0.05 points/year or better (<1% of the total), and a much larger group grew worse at the same rate (32% of the total). Even 47 senior reviewers selected for consistent quality and volume grew worse at the rate of –0.023 points per year.

Conclusions

Reviewers who improved over time or deteriorated were identified, which could lead to identifying characteristics of their demographics or experience that could predict future performance. However, the majority of reviewers deteriorated over time, although at a very gradual rate.

Department of Emergency Medicine, Box 0208, University of California, San Francisco, CA 94143-0208, USA, e-mail: michael.callaham@ucsf.edu

Does a Mentoring Program for New Peer Reviewers Improve Their Review Quality? A Randomized Controlled Trial

Debra Houry,1 Michael Callaham,2 and Steven Green3

Objective

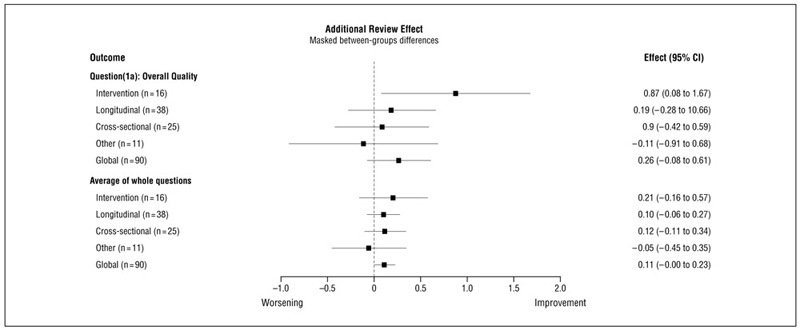

Traditional methods of peer reviewer training and selection have been shown to be ineffective. We instituted a mentoring program, pairing senior reviewers (selected for high quality) and new reviewers on the same manuscripts, to see if this intervention would improve quality as measured by editors’ validated quality scores of all reviews.

Design

Over a 2-year period, all new reviewers were randomly assigned to a control group or an intervention group by blinded technique. The intervention group was invited by e-mail to join our mentoring program and asked to communicate with their assigned senior reviewer mentor (by e-mail or phone) each time they were assigned a manuscript. Mentors were volunteers chosen for consistent timeliness and quality over years. Mentors and mentees (who were paired by topic during the study) were also notified each time either was assigned a manuscript to review; both groups (and editors) were blinded as to the study intervention. The content and amount of communication were left to the mentor and mentee. After 3 reviews, mentees were surveyed regarding their experience. We calculated reviewer-specific and average trend lines for both groups, accommodating for lack of independence of ratings using SAS Proc MIXED models (ver 9.1, SAS Institute, Cary, NC) with a random intercepts and slopes correlation structures. We tested for longitudinal differences in the average trends differences between the 2 groups during the study period.

Results

A total of 17 mentees, 15 controls, and 16 mentors completed 194 reviews. Both mentees and control group reviewers received the same number of invitations, but mentees accepted and completed more reviews than control group reviewers (109 vs 84), and mentee mean scores were higher than control group scores when controlling for within reviewer trends and variations in volume and group trend effects (3.81 vs 3.24; difference: –0.56 [95% confidence interval, –1.048 to –0.078], P =.027). Satisfaction was not assessed since it has previously been shown not to predict performance, but participants were surveyed for their suggestions.

Conclusion

A simple system of pairing newly recruited peer reviewers with volunteer reviewer mentors chosen for consistent quality over time resulted in slightly more reviews accepted and higher review scores.

1Department of Emergency Medicine, Emory University School of Medicine, Atlanta, GA, USA; 2Department of Emergency Medicine, Box 0208, University of California, San Francisco, CA 94143-0208, USA, e-mail: michael.callaham@ucsf.edu; 3Loma Linda University Medical Center, Santa Barbara, CA, USA

Surveys of Current Status in Biomedical Science Grant Review: Funding Organizations and Grant Reviewers’ Perspectives

Sara Schroter,1 Trish Groves,1 and Liselotte Højgaard2

Objective

To describe current status of biomedical grant review and to seek views on developing uniform requirements for the format and peer review of grant proposals.

Design

Online survey to convenience sample of 57 international public and private grant-giving organizations. Nine of these sent an online survey via e-mail to a random sample of grant reviewers.

Results

Of the grant organizations, 28 (49%) from 19 countries responded. Organizations reported as “frequent/very frequent” (and with deterioration over the past 5 years) these problems: declined review requests (54%), late reports (36%), administrative burden (21%), difficulty finding new reviewers (14%), and reviewers not following guidelines (14%). Half reported providing decisions to back to reviewers; 29% gave feedback on usefulness of reviews, and 46% rationed requests to reviewers. Of responding organizations, 57% supported the idea of uniform requirements for grant review, and 61% supported a uniform format of proposals. Of the 371 grant reviewers, 229 (62%) responded from 22 countries. Of these, 48% had reviewed for at least 10 years; 47% said their institutions encouraged grant review, yet only 7% were given protected time, and 75% received no academic recognition for grant review. Reviewers rated as “extremely/very important” the following in deciding to review: 51% supporting external fairness, 48% relevance of topic, 46% professional duty, 43% keeping up to date, and 41% avoiding suppression of innovation. Sixteen percent reported that guidance was very clear; 85% reported not having been trained in grant review, and 63% reported that they would like training. For more than half, lack of recognition and pay were never barriers to reviewing.

Conclusions

Funders reported a growing workload that is getting more difficult to peer review. About two-thirds of grant organizations supported development of uniform requirements for the format and peer review of proposals. Just under half of grant reviewers take part for the good of science and professional development, but many lack academic and practical support and clear guidance. Given these findings, we propose that work starts on developing uniform requirements for grant review.

1BMJ Editorial, BMA House, Tavistock Square, London WC1H 9JR, UK, e-mail: sschroter@bmj.com; 2Rigshospitalet, University of Copenhagen, Denmark

Data Sharing and Conflicts of Interest

Reproducible Research: Biomedical Researchers’ Willingness to Share Information to Enable Others to Reproduce Their Results

Christine Laine,1 Michael Berkwits,1 Cynthia Mulrow,1 Mary Beth Schaeffer,1 Michael Griswold,2 and Steven Goodman2

Objective

“Reproducible research” is a model for communicating research that promotes transparency of methods used to collect, analyze, and present data. It allows independent scientists to reproduce results using the original investigators’ same procedures and data and requires a level of transparency seldom sought or achieved in biomedical research. While the full reproducible research model involves more than data sharing, some form of sharing is a basic requirement. This report describes the willingness of biomedical researchers to share their study materials with others.

Design

Authors of research articles published in Annals of Internal Medicine in 2008 were asked whether and under what conditions they would make available to others their protocols, statistical code, and data. We will ask researchers who stated that they would make materials available to report the number of requests received.

Results

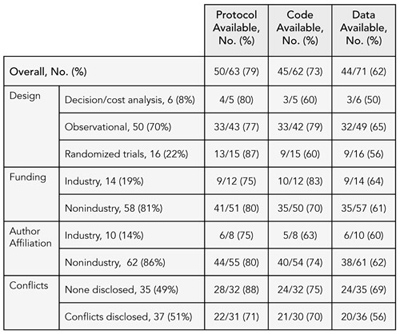

Of 72 articles, authors of 13% stated that protocol was available without conditions, 58% with conditions, and 17% not available. Statistical code was available without conditions for 3%, with conditions for 60%, and unavailable for 24%. Data were available without condition for 4%, with conditions for 57%, and unavailable for 38%. Most authors who said materials were available required interested parties to contact them first and many stated specific conditions for sharing these materials. Authors provided no statement about protocol, statistical code, and data availability for 13%, 14%, and 1%, respectively. Table 1 shows reported availability of study materials by study characteristics.

Table 1. reported availability of study protocol, statistical Code, and data by study Characteristics (N = 72)

Conclusions

While the majority of authors stated that they would make study materials available to others, most would do so only if others contacted them and attached requirements to the sharing of this information. Researchers were most willing to fully share their protocols and least willing to share data. Information on a larger sample and frequency of requests for materials will be available for presentation in September 2009.

1Annals of Internal Medicine, 190 Independence Mall W, Philadelphia, PA 19106, USA, e-mail claine@acponline.org; 2Johns Hopkins University, Baltimore, MD, USA

Investigator Experiences With Financial Conflicts of Interest in Clinical Trials

Paula A. Rochon,1 Melanie Sekeres,2 John Hoey,3 Joel Lexchin,4 Lorraine E. Ferris,2 David Moher,5 Wei Wu,1 Sunila R. Kalkar,1 Marleen Van Laethem,6 Andrea Gruneir,1 Jennifer Gold,7

M. James Maskalyk,2 David L. Streiner,2 and An-Wen Chan8

Objective

To determine the extent to which investigators follow best practices to mitigate financial conflicts of interests in conducting clinical trials. We hypothesized that nonindustry-funded trials may engage in best practices more often than those with commercial funding.

Design

E-mail survey of 1109 investigators from Canadian trial sites listed in 2 international trial registries in November 2006. We asked investigators about their experiences with trials conducted from 2001 to 2006. The main outcome was the frequency of 11 best practices, as defined by expert consensus and the literature, to mitigate financial conflicts of interest in trial preparation, conduct, and dissemination stratified by funding source (industry vs non-industry), and type of regulation (externally vs self-regulated).

Results

A total of 844 investigators responded (76%), and 732 provided information for analysis. Fifty-five percent had been investigators on both industry-and nonindustry-funded trials. Overall, 41 (6%) investigators experienced best practices in all of their trials. The 3 externally regulated best practices (trial registration, institutional review of signed contracts and budgets) were more or equally likely to be followed in the industry relative to the nonindustry funding environment. Self-regulated practices (contracts had no restrictive confidentiality clauses; sponsor did not own the study data; investigator had access to data from all sites; investigator controlled final decisions regarding study design, analysis, interpretation, and manuscript content) were more frequently followed in nonindustry- than industry-funded trials (P < .001). Overall, 269 (37%) investigators reported having personally experienced (n = 85) or witnessed (n = 236) a financial conflict of interest; more than 70% of these situations related to industry-funded trials.

Conclusions

Few investigators report always following best practices to mitigate financial conflict of interest in their clinical trials experience. Compliance was higher when best practices were externally regulated and trials were not industry funded.

1Women’s College Research Institute at Women’s College Hospital, 790 Bay St, Toronto, Ontario M5G 1N8, Canada, e-mail: paula.rochon@ wchospital.ca; 2University of Toronto, Toronto, Ontario, Canada; 3Queen’s University, Kingston, Ontario, Canada; 4York University, Toronto, Ontario, Canada; 5Ottawa Health Research Institute, Ottawa, Ontario, Canada; 6Toronto Rehabilitation Institute, Toronto, Ontario, Canada; 7Baycrest, Kunin-Lunenfeld Applied Research Unit, Toronto, Ontario, Canada; 8Mayo Clinic, Rochester, MN, USA

Acknowledgment of Company Support in Research Publications From Investigator-Sponsored Studies

John M. Ellison, Rosarito P. Jahn, and Kirsten C. Kempe

Objective

Many pharmaceutical and medical device companies provide support for independent research through investigator-sponsored study (ISS) programs (also known as investigator-initiated studies). Typically, a company’s role in ISS projects is limited to providing funding and/or products, with minimal technical input. The investigator initiates and conducts the study and is responsible for complying with all regulations applicable to both investigators and sponsors. The International Committee of Medical Journal Editors (ICMJE) guidelines state that financial and material support should be acknowledged in published research. Our objective was to examine the frequency of acknowledgment in recent publications arising from a medical device company’s ISS program.

Design

Publications (2004 through 2008) traceable to ISS agreements were identified by searching company files, institutional Web sites, and the PubMed database. Each publication was examined by 2 or 3 reviewers for eligibility. Publications not addressing the principal goals of the ISS were excluded. Eligible articles were examined for acknowledgment of company support.

Results

Our preliminary search identified 55 publications (from 20 peer-reviewed medical journals) arising from 18 ISSs. Several ISSs generated multiple publications. Disclosure of company support was found in 31 of the 55 (56%) publications. Acknowledgment was found in 10 of the 14 publications (71%) from ISSs that received company monetary support versus 21 of 41 (51%) of publications from ISSs that received product (a diagnostic device) only. Notably, all of the publications without disclosure came from 6 ISSs; 12 of the 18 (66%) ISSs did acknowledge company support in their publications.

Conclusions

Publications from one-third of the ISSs we evaluated did not acknowledge company support. Authors may fail to acknowledge support when the company provides product (a diagnostic device) because it is rarely the intervention under study. Acknowledgments may also be missing due to journal practices or author preferences. All organizations providing support should be acknowledged regardless of the level or type of support provided. Opportunities exist for authors, editors, and industry to improve the transparency of ISS publications.

LifeScan, Inc, a Johnson & Johnson Company, 1000 Gibraltar Dr, Mailstop 3i, Milpitas, CA 95035, USA, e-mail: jellison@its.jnj.com

Commercial Relationships, Funding, and Full Publication of Randomized Controlled Trials Initially Reported in Conference Abstracts

Isabel Rodriguez-Barraquer, Bonnielin Swenor, Roberta Scherer, and Kay Dickersin

Background

In 1998, the Association for Research in Vision and Ophthalmology (ARVO) started requiring disclosure of author commercial relationships in meeting abstracts.

Objective

To explore a possible association between commercial conflicts of interest and publication of randomized controlled trials (RCTs) initially presented as ARVO conference abstracts.

Design

We identified RCTs presented as ARVO abstracts in years 2001-2003 and extracted data from each abstract, including “author commercial interest” (as defined by ARVO), study funding, and direction of results of primary outcome. Commercial relationships and funding sources were not mutually exclusive. Using PubMed (latest search March 2009) and direct author contact, we identified full reports associated with included abstracts. We will present 2001-2003 data and will also explore the effect of additional author and study characteristics.

Results

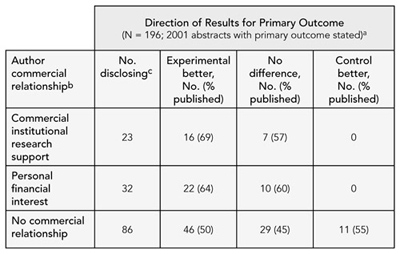

We identified 151 abstract reports, of which 130 reported results for the primary outcome. Sixty-nine abstract reports (53%) had been published in full. Commercial relationships were reported as commercial institutional research support, 23 (18%); personal financial interest, 32 (25%); and no commercial relationship, 86 (66%). Full publication of abstracts with authors having commercial institutional support was 65% (15/23, 95% confidence interval [CI], 46%-85%), for those disclosing personal financial interests was 63% (20/32, 95% CI, 46%-79%), and for those reporting no commercial relationships was 49% (42/86, 95% CI, 38%-59%). Table 2 shows publication rates according to commercial relationship categories and results for the primary outcome. Abstracts noting commercial relationships had a higher full publication rate when the primary outcome result favored the experimental group. Among those studies with results favoring the control group (n = 11), no study disclosed commercial relationships.

Table 2. publication rates according to Commercial relationship Categories

a Direction of the difference between the experimental and comparison group for the primary outcome or main study results

b Author commercial relationship categories not mutually exclusive

c Number of abstracts disclosing relationship

Conclusions

Our preliminary data suggest that studies disclosing commercial relationships show higher publication rates, with results favoring the experimental group, compared with studies reporting no commercial relationship. Further research is needed to explore this association.

Johns Hopkins Bloomberg School of Public Health, Deptartment of Epidemiology, Mailroom W5010, 615 N Wolfe St, Baltimore, MD 21205, USA, e-mail: irodrigu@jhsph.edu

Perceptions and Integration of Conflict of Interest Disclosures Among Peer Reviewers

Suzanne Lippert,1 Michael Callaham,2 and Bernard Lo2

Objective

We investigate how peer reviewers’ understanding of key terms in conflict of interest (COI) statements varies in relation to their demographics and reviewer performance and how peer reviewers believe their understanding influences their assessment of articles.

Design

We conducted an online survey with questions in the following categories: COI knowledge, perception of COI disclosures, personal information, and integration of COI statements into article assessment. A random sample of 146 core reviewers and 264 specialist reviewers for Annals of Emergency Medicine were invited to participate. Survey responses were linked to performance data in the journal files and coded for confidentiality. We provide descriptive statistics of survey responses and examine the relationship of reviewers’ demographics and performance scores with their knowledge of the activities of serving as a consultant or on a speakers bureau and their reported means of integrating that knowledge in article assessments.

Results

Of the 410 invited reviewers, 50% completed the survey. The following percentage of respondents believe it to be likely or very likely that: a company expects the presentation of medical content given by a member of a speakers bureau to be consistent with the company’s marketing message, < 80%; that a typical consultant to a company would be reluctant to jeopardize their relationship with the company, < 70%; that sponsorship of research influences an author’s judgment, < 90%. Seventy-five percent disagree or strongly disagree that authors have unlimited access to the company data without restrictions on publication. Seventy-four percent report that they would read an article more or much more carefully if the author serves on speakers bureau, 80% if the author is a consultant, and 85% if the author owns stock. The majority of respondents, however, report that their recommendation for publication is unchanged if the lead author falls into any of these categories.

Conclusions

The majority of peer-reviewer respondents believe that financial ties to industry influence authors; nevertheless, most also believe that their recommendation of articles authored by physicians in these conflicted roles remains unchanged.

1ACMC, Emergency Medicine, 5416 Bryant Ave, Oakland, CA 94618, USA, e-mail: suzanne.lippert@gmail.com; 2Department of Emergency Medicine, University of California, San Francisco, CA, USA

Editorial Training, Decisions, Policies, and Ethics

Background, Training, and Familiarity With Ethical Standards of Editors of Major Medical Journals

Victoria Wong1 and Michael Callaham2

Objective

To characterize the current demographics, training, experience, and familiarity with scientific publication ethics of editors of medical journals, expanding on a similar survey of journals in the 1994 Science Citation Index.

Design

The 2007 Journal Citation Reports database was used to determine the journals containing the top 50% of total citations within each of 44 medical specialty categories. Category keywords were used to select only journals that a physician might use in making clinical decisions. An electronic survey was sent to the editors in chief of 190 journals (representing 5 million total citations), requesting information about demographics, training, and editorial duties. We included hypothetical scenarios about plagiarism, authorship, conflicts of interest, and peer review to test editorial knowledge.

Results

Surveys have been received from 93 editors for a 49% response rate. Respondents to date include editors in 38 of the 44 medical topic categories, whose journals were cited 2.86 million times in the past year. 92% (81) of respondents were male, 81% (58) participated in some degree of patient care, and 79% (71) spent less than 50% of their work time doing editorial duties. Although 86% (78) of respondents were “confident” or “very confident” in their knowledge of scientific publication ethics when they began the survey, this number dropped to 71% (65) by the end. Performance on the editorial scenarios was poor; correct answers were given by 18% (14) to the question on plagiarism, 30% (27) to authorship, 15% (14) to conflicts of interest, and 16% (15) to peer review. Forty-nine percent (44) believed that additional training in scientific publication ethics would significantly enhance their skills as an editor.

Conclusions

Despite high confidence levels among editors in chief of medical journals participating in this survey, there is still a need as well as a demand for further education in scientific publication ethics.

1University of California Davis Medical Center, Neurology Department, 4860 Y St, Suite 3700, Sacramento, CA 95817, USA, e-mail: vwongmd@ gmail.com; 2Department of Emergency Medicine, University of California, San Francisco, CA, USA

A Qualitative Study of Editorial Decisions About Publication of Randomized Trials at Major Biomedical Journals

Kirby Lee,1 Elizabeth Boyd,2 and Lisa Bero1

Objective

To understand the decision-making process of journal editors when deciding whether to publish randomized controlled trials (RCTs).

Design

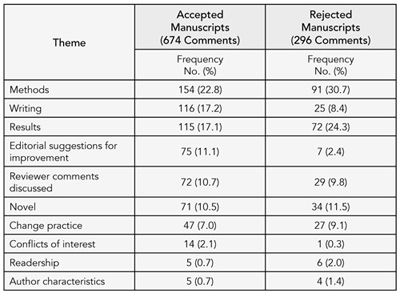

Qualitative study of editorial meeting discussions of randomized trials submitted for publication at 3 major biomedical journals (BMJ, Lancet, and Annals of Internal Medicine) during 2003-2004. A total of 50 RCTs were submitted to the journals of which 13 were accepted and 37 rejected. Editorial meetings consisted of editors, statisticians, and, often, invited experts or consultants. Attendees varied among the journals and among meetings. We audio-recorded all editorial meetings in which these manuscripts were discussed. Audiotapes were transcribed and transcripts were analyzed using grounded theory. Thirteen RCTs accepted for publication were compared to a random sample of 13 RCTs rejected after peer review, stratified by journal. We identified commonly expressed themes and whether they were favorable toward publication or critical of the manuscript.

Results

Ten recurrent themes emerged including methods, writing (the need for more information, clarification, or explanation to interpret methods and results or issues with presentation and style), results, editor discussions for improving the manuscript (eg, revising analyses, reporting data, implications of the findings), discussion of peer review comments, novelty, whether the manuscript would change clinical practice, conflicts of interest, readership, and author characteristics. For accepted manuscripts, approximately 54% of the comments were critical and 46% were favorable. For rejected trials, approximately 75% of the comments were critical and 25% were favorable. The frequency of themes discussed differed between accepted and rejected manuscripts (Table 3).

Conclusions

Discussion of the trial reports’ methods, results, and writing dominated the editorial meetings. For trials that were accepted, editors also devoted a large proportion of the discussion to suggestions for improving the manuscript and communicating its key messages. The peer review process resulted in constructive suggestions for changing the reporting of accepted trials.

Table 3. proportion of Themes discussed during editorial meetings for accepted and rejected manuscripts

1 University of California, San Francisco, Clinical Pharmacy, 3333 California St, Suite 420, San Francisco, CA 94118, USA, e-mail: leek@pharmacy.ucsf.edu; 2University of Arizona, Tuscon, AZ, USA

Editorial Policies of Pediatric Journals: A Survey of Instructions for Authors

Joerg J. Meerpohl,1,2 Robert F. Wolff,1,3 Charlotte M. Niemeyer,2 Gerd Antes,1 and Erik von Elm4

Objective

The continued discussion about ethics and quality of biomedical publishing has led to recommendations for submitting authors. However, it is unclear to what extent these recommendations have been implemented in specialty journals. We studied whether aspects of good publication practice were implemented in the author instructions of pediatric journals.

Design

In the Institute for Scientific Information Journal Citation Report (JCR) 2007, we identified all journals (n = 78) in the subject category “pediatrics” and included those publishing original research articles (n = 69). We accessed the online instructions for authors and extracted information regarding endorsement of the International Committee of Medical Journal Editors (ICMJE) Uniform Requirements for Manuscripts Submitted to Biomedical Journals and of 5 major reporting guidelines including CONSORT and STROBE, disclosure of conflicts of interest (COIs), and trial registration. Two investigators collected data independently.

Results

The ICMJE Uniform Requirements were mentioned in author instructions of 38 of the 69 journals (55%). Endorsement of reporting guidelines was low with CONSORT being referred to most frequently (14 journals, 20%). Each of the other 4 reporting guidelines was mentioned in less than 10% of the author instructions. Fifty-four (78%) journals explicitly required authors to disclose COIs, and 16 (23%) either recommended or required trial registration. The odds of endorsing the ICMJE Uniform Requirements increased by 2.25 (95% confidence interval [CI], 1.17-4.34) per additional impact factor point. Similarly, the odds increased by 2.32 (95% CI, 0.95-5.70) for requiring disclosure of COIs and by 3.66 (95% CI, 1.74-7.71) for requiring trial registration.

Conclusions

According to the author instructions of journals serving the pediatric research community, several recommendations for publication practice are not yet fully implemented. The more widespread endorsement of ICMJE Uniform Requirements and major reporting guidelines could improve the transparency and completeness of pediatric research publications. Many pediatric journals do not have editorial policies regarding trial registration. Disclosure of COIs at the time of manuscript submission should be mandatory.

1German Cochrane Center, University Medical Center, Freiburg, StefanMeier-Strasse 26, Freiburg, 79104 Germany, e-mail: meerpohl@cochrane. de; 2Division of Pediatric Hematology & Oncology, Department of Pediatrics, University Medical Center Freiburg, Freiburg, Germany; 3Kleijnen Systematic Reviews Ltd, York, UK, 4Swiss Paraplegic Research, PO Box, CH-6207 Nottwil, Switzerland, e-mail: erik.vonelm@paranet.ch

Was JAMA‘s Requirement for Independent Statistical Analysis Associated With a Change in the Number of Industry-Funded Studies It Published?

Elizabeth Wager,1,2 Rahul Mhaskar,3 Stephanie Warburton,3 and Benjamin Djulbegovic3

Objective

To determine whether the number of industry-funded trials published by JAMA changed after the July 2005 requirement for independent statistical analysis.

Design

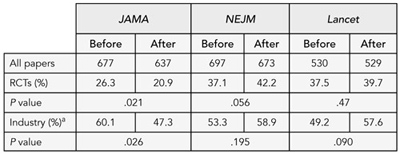

Retrospective before-and-after study. Two investigators independently coded all RCTs published in JAMA from July 1, 2002, to June 30, 2008, (ie, 3 years before and after the policy). They were not blinded to publication date. RCTs were classified as “Industry” if they had any commercial funding or support. Discrepancies were resolved by discussion or further analysis. RCTs published in Lancet and New England Journal of Medicine (NEJM) during the same period provided the control.

Results

The total number of RCTs and the proportion with commercial funding decreased significantly in JAMA after July 2005. In contrast, NEJM published more RCTs, but funding did not change significantly, while Lancet published the same number of RCTs, but the proportion of industry RCTs rose nonsignificantly in the same periods. Alternative categorization of funding sources distinguishing total industry funding (IF) from support (IS) (ie, supplying materials only) or joint industry/noncommercial funding (J) produced a less clear pattern but IF+J studies decreased significantly in JAMA while IS studies (and IF+IS studies) increased significantly in NEJM, and IF studies increased significantly in Lancet (Table 4).

Table 4. Number of Industry-Funded Trials published by JAMA before and after the requirement for Independent statistical analysis

a Studies with commercial funding

1 Committee on Publication Ethics (COPE); 2 Sideview, 19 Station Rd, Princes Risborough, HP27 9DE UK, e-mail: liz@sideview.demon.co.uk; 3 University of South Florida, Tampa, FL, USA

Conclusions

JAMA‘s requirement for independent statistical analysis for industry-funded studies was associated with a change in the pattern of RCTs published. We cannot tell whether the policy affected the number of RCTs submitted, the acceptance rate, or both. The decrease in RCTs and commercial studies was not seen in the control journals.

What Ethical Issues Do Journal Editors Bring to COPE?

Sabine Kleinert1 and Elizabeth Wager2,3

Objective

The Committee on Publication Ethics (COPE) has provided a forum for journal editors to discuss troubling cases since 1997. The keywords assigned to cases were checked and recategorized in 2008 to ensure consistency of use. We analyzed the cases discussed at COPE to identify possible topic trends. In particular, we wondered whether the introduction of the COPE flowcharts in 2006 might be associated with a reduction in the number of straightforward cases.

Design

Analysis of cases on the COPE Web site from 1997 to 2008 according to their keywords (which are assigned by COPE).

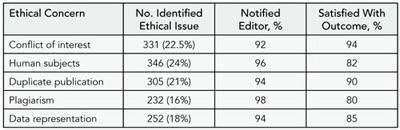

Results

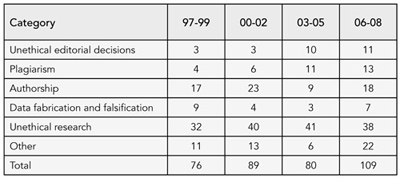

The database comprises 354 cases. The number of cases presented to COPE each year ranges from 15 to 42 but with no clear time trend (eg, there were fewer cases in 2008 than 2007). COPE membership increased steadily from 1997 to 2006 and then more dramatically during 2007 and 2008. The only categories of cases that appeared to increase were plagiarism (perhaps due to increased awareness) and unethical editorial decisions (which has increased since COPE published its code of conduct, perhaps because editors are questioning their practices more). We found no evidence that the flowcharts had reduced the number of straightforward cases submitted. See Table 5.

Table 5. COPE Cases by Category, 1997-2008

Conclusions

While the numbers in each category are small, and therefore do not warrant statistical analysis and should be interpreted with caution, apart from plagiarism and unethical editorial decisions, which appear to have increased, we observed no clear patterns in the types of problems presenting to COPE since 1997.

1Lancet, London, UK; 2Committee on Publication Ethics (COPE); 3Sideview, 19 Station Rd, Princes Risborough, HP27 9DE UK, e-mail: liz@sideview.demon.co.uk

FRIDAY, SEPTEMBER 11

Publication Pathways

Predictors of Time to Publication of Manuscripts Rejected by Major Biomedical Journals

Kirby Lee,1 Nicholas Lehman,2 I’Alla Brodie,3 and Lisa Bero1

Objective

To evaluate publication rates and predictors of time to publication for manuscripts rejected by major biomedical journals.

Design

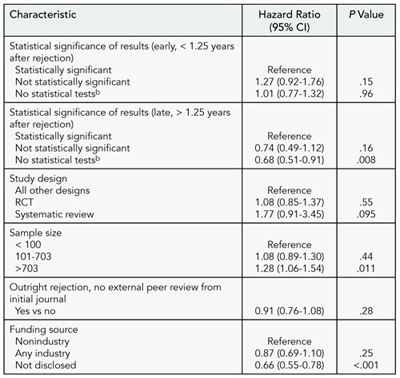

Cohort study of 1008 manuscripts reporting original research submitted for publication and subsequently rejected at 3 major biomedical journals (BMJ, Lancet, and Annals of Internal Medicine) during 2003-2004. Our main predictor of publication was statistical significance of results (P < .05) reported for the primary outcome. We also abstracted manuscript characteristics including study design, sample size, funding source, and whether the manuscript was outright rejected vs rejected after external peer review. The primary outcome of our study was subsequent publication of the rejected manuscripts. We determined publication status and time from rejection to publication in the medical literature by searching PubMed, Cochrane Library, and the Cumulative Index for Nursing and Allied Health Literature through June 30, 2008 (minimum follow-up time of 4.3 years). Predictors of time to publication were analyzed using multivariable Cox proportional hazards regressions. All analyses were planned a priori.

Results

Seventy-six percent (767) of manuscripts were published (median, 1.25 years, range, 0.01-5.32 years). The majority of manuscripts were published in specialty journals (85%, 654/767) with lower impact factors than the original rejecting journal. Manuscripts were more likely to be published if they had larger sample sizes and less likely if they did not disclose a funding source or did not report results using statistical tests for comparisons, particularly after 1.25 years from initial rejection (Table 6).

Conclusions

A quarter of manuscripts initially rejected by major biomedical journals remained unpublished. Although some methodological characteristics and disclosing the funding source were associated with publication, articles with statistically significant results were not more likely to be published.

1University of California, San Francisco, Clinical Pharmacy, 3333 California St, Suite 420, San Francisco, CA 94118, USA, e-mail: leek@pharmacy.ucsf.edu; 2Colby College, Waterville, ME, USA; 3San Diego State University, San Diego, CA, USA

Table 6. multivariable predictors of time to publication (N = 902)

a 106 manuscripts did not report a sample size. Statistical significance of results estimated for early and late time periods due to nonproportionality.

b Studies reporting no formal statistical tests, descriptive statistics only, or qualitative research.

Publication of Research Reports After Rejection by the New England Journal of Medicine in 2 Time Periods

Michael Bretthauer, Pam Miller, Edward W. Campion, and Jeff Drazen

Objective

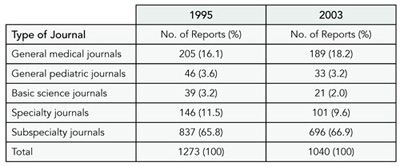

In the past 2 decades, the number of peer-reviewed medical journals that publish original research reports has increased. We compared the subsequent publication of original research manuscripts after rejection at a major general medical journal, the New England Journal of Medicine, with an acceptance rate of less than 10%. Data were obtained for both 1995 and 2003.

Design

All original research manuscripts rejected after external peer review at the index journal during the calendar years 1995 and 2003 were identified from our electronic databases. All manuscripts were tracked for subsequent publication in a medical or scientific journal by searching for similar titles and authors in PubMed. Searches were performed between November 25, 2008, and January 30, 2009, for the 1995 manuscripts. For the 2003 manuscripts, searches were completed in the first week of February 2009. Descriptive statistics were computed by the use of SPSS 15.0.

Results

Of 1423 manuscripts rejected in 1995, we identified 1273 that were subsequently published (89.5%), as compared to 1040 of 1205 (86.3%) for those in 2003. The manuscripts were published in 384 different journals in 1995, as compared to 319 in 2003. The median time from rejection by the index journal to eventual publication in another journal was 437 days (interquartile range [IQR]: 313-652 days) in 1995 vs 398 days (IQR: 286-564 days) in 2003. Table 7 shows the 5 types of journals that published the papers after rejection at the index journal for the 2 time periods.

Table 7. eventual publication by Other Journals After Initial Rejection

Conclusions

The vast majority of research manuscripts that are rejected by a general medical journal after peer review are published elsewhere. However, there is substantial delay in this process. The time from rejection at the index journal to publication elsewhere declined only slightly from 1995 to 2003.

New England Journal of Medicine, 10 Shattuck St, Boston, MA 02115-6094, USA; e-mail: mbretthauer@nejm.org

An Evaluation of Time to Publication for Randomized Trials Submitted to the BMJ

Sara Schroter,1 Douglas G. Altman,2 and John P. A. Ioannidis3

Objective

To evaluate the publication fate of randomized controlled trials (RCTs) submitted to the BMJ and to understand how many and which trials remain unpublished a long time after submission.

Design

We evaluated all 660 reports of RCTs submitted to the BMJ between January 1, 1998, and December 31, 2001. We identified the published articles or, if we could not locate any published reports, we contacted the authors.

Results

We found that 602 of 660 RCTs (91%) were published: 150 (23%) in the BMJ and 452 (68%) in other journals. All except 6 of the trials published elsewhere were in journals with lower impact factor than BMJ (median, 2.13; interquartile range [IQR], 1.53-3.03). The median time from submission to publication was 1.36 years (IQR, 0.89-2.15; and 1.30, IQR, 0.87-1.91, excluding the 40 unpublished trials). About 25% of the RCTs submitted to the BMJ remained unpublished for 2 or more years after submission. Excluding the BMJ-published papers, a higher impact factor was associated with more rapid publication (HR, 1.08 per point, P = .002). Unpublished trials were significantly less likely to go to external review, have external reviews returned, and reach the BMJ editorial “hanging” committee than published trials but were not significantly different in their country of origin. Of the 18 unpublished trials for which authors replied to our survey, 3 authors were still trying to get their paper published, at least half were said to have significant results, 3 said that negative results were perceived as a main reason for nonpublication, and 8 were discouraged by rejections.

Conclusions

Results of all RCTs should be publicly available since they are level I evidence. The large majority of the trial reports submitted to the BMJ get published in time, but about 25% remained unpublished after 2 years. Among those remaining unpublished, negative results are not perceived as a prime reason for failure to publish.

1BMJ Editorial, BMA House, Tavistock Square, London WC1H 9JR, UK, e-mail: sschroter@bmj.com; 2Centre for Statistics in Medicine, Wolfson College Annexe, Oxford, UK; 3Department of Hygiene and Epidemiology, University of Ioannina School of Medicine, Ioannina, Greece

Complexity of Peer Review Evaluations: Twelve-Year Prospective Study on 424 Submitted Papers to a Specialized Journal

Francine Kauffmann,1,2 Klaus Rabe,3 Béatrice Faraldo,1,2 Hélène Tharrault,1,2 Jean Maccario,1,2 and Alan R. Leff4

Objective

To classify reviewer scores and assess whether scores predict the career of a manuscript, beyond the initial decision of acceptance or rejection.

Design

Included manuscripts were edited by 1 associate editor between 1994 and 1999. Subsequent publication through December 2008 was searched for rejected manuscripts. For all published papers, citations were assessed from their publication date through December 2008. Outcomes studied were publication (initial journal [n = 173] or another journal [n = 187]), impact factor for the year of publication, citations (Web of Science). Reviewer scores (1-5) regarding “originality,” “scientific importance,” “adequacy of methods,” “brevity/clarity,” and “adequacy of interpretation” were analyzed as predictors. Analyses on citation rates (geometric mean = 26, range, 1-441) conducted on 329 papers were adjusted for duration of follow-up after publication (9.9±1.6 years; P < .001) and impact factor (66 journals, 3.6±1.7; P < .0001).

Results

Principal component analysis on reviewer scores separated “science” (methods, interpretation) and “journalism” (originality, importance). Two hundred fifty-one papers were rejected (43 without a reject recommendation of at least 1 reviewer) and 173 accepted (13 with a reject recommendation of 1 reviewer). For acceptance or rejection, tree discriminant analysis was performed as all scores were highly predictive of outcome: “interpretation” was the first criteria, followed by “methods” and “scientific importance.” Discriminant analyses showed that every publication after rejection (75%) was driven by “originality” and early (within 2 years after rejection, n = 140 [75%]) vs late publication by “methods.” Reviewer “scientific importance” score was the major determinant for all citations (P = .01), in the first 2 years (P < .01), and after (P = .02). In the first 2 years, poor “interpretation” increased citations (P = .03). Results were similar when restricting the analysis to the 173 accepted papers.

Conclusions

Peer review evaluation can capture various aspects regarding submitted papers, which differentially predicts acceptance, publication of rejected papers, and citations rates. This is an issue relevant for authors, reviewers, editors, and readers.

1INSERM U780, 16 avenue PV Couturier, Villejuif, 94807 France, e-mail: francine.kauffmann@inserm.fr; 2Université Paris-Sud, IFR69, Villejuif, France; 3Department of Pneumology, Leiden University Medical Center, Leiden, the Netherlands; 4Department of Medicine, University of Chicago, Chicago, IL, USA

Publication Bias

Testing for the Presence of Positive-Outcome Bias in Peer Review: A Randomized Controlled Trial

Gwendolyn B. Emerson,1 Richard A. Brand,2 James D. Heckman,3 Winston J. Warme,1 Fredric M. Wolf,4 and Seth S. Leopold1

Objective

To the extent positive-outcome bias exists, it risks undermining evidence-based medicine. We designed a stratified randomized block design trial to test the hypothesis that a significantly greater percentage of peer reviewers for 2 orthopedic journals would recommend publication of a “positive” outcome report compared with a “no-difference” outcome report of an otherwise identical fabricated randomized controlled trial (RCT).

Design

We fabricated 2 versions (“positive” and “no difference”) of a well-designed, CONSORT-conforming, amply-powered, multi-institutional, blinded RCT including 3308 patients evaluating dosage and timing of perioperative antibiotics. The versions were identical except for the direction of the finding on the principal study endpoint (fewer surgical site infections in the “positive” version; no difference in surgical site infections in the “no-difference” version). Both versions were sent to peer reviewers by Journal of Bone and Joint Surgery (JBJS) and Clinical Orthopaedics and Related Research (CORR). The 209 reviewers were randomly allocated to either “positive” or “no-difference” versions, with randomization stratified by journal. Reviewers were informed that a study was ongoing but were not informed of the research question nor that they were reviewing the “test” manuscript.

Results

At JBJS, the “positive” manuscript was significantly more likely to be recommended for publication than the “no-difference” manuscript (98% vs 71%; odds ratio [OR], 20.4; 95% confidence interval [CI], 2.6-161.7). At CORR, the difference was not statistically significant (97% of “positive” manuscripts recommended for publication vs 90% of “no difference” manuscripts; OR, 3.4; 95% CI, 0.6-18.2).

Conclusions

Results suggest positive-outcome bias is variably present during peer review of orthopedic manuscripts submitted for publication, as it was present for 1 journal but not the other. Many of the 209 reviewers have regularly reviewed for both journals; we speculate the difference between journals may relate to historical perceptions about the 2 journals. Journal editors may consider providing reviewers with more explicit guidelines for review of “no-difference” manuscripts.

1Department of Orthopaedics and Sports Medicine, University of Washington, Box 356500, 1959 NE Pacific St, Seattle, WA 98195, USA, e-mail: leopold@u.washington.edu; 2Clinical Orthopaedics and Related Research, Philadelphia, PA, USA; 3Journal of Bone and Joint Surgery, Needham, MA, USA; 4Department of Medical Education and Biomedical Informatics, University of Washington, Seattle, WA, USA

Composite Outcomes Can Be Misleading

Gloria Cordoba,1 Lisa Schwartz,2 Steven Woloshin,2 Harold Bae,2 and Peter Gøtzsche1

Objective

A composite outcome combines several individual outcomes into 1 main outcome. It increases statistical power but can be misleading since the result may be driven by less important components. We compared the composite outcome to the most clinically important outcome.

Design

Systematic review of parallel-group randomized clinical trials published in 2008 reporting a binary composite primary outcome. Two independent coders abstracted the data and a third observer, blinded to the results, selected the most important component.

Results

Of 43 eligible trials, 36 were included (3 were excluded because no component was clearly most important, 4 because of insufficient or inconsistent data). Twenty-seven trials (75%) were about cardiovascular topics, and 25 trials (69%) were either entirely or partly industry funded. Composite outcomes had a median of 3 components (range, 2-9). Death (or cardiovascular death) was the most important component in 30 trials. There were 15,531 events (14% of patients) for the composite outcome and 4,513 (4%) for the most important component. The point estimate for the risk ratio for the composite outcome was equally often lower as larger than that for the important component, and it differed by more than 20% from it in 14 trials (39%). Statistically significant results were reported in 11 trials for only the composite outcome; in 2 trials for only the most important outcome (death or cardiovascular death); and in 1 trial for both but in opposite directions, as the effect was beneficial for the composite of death or nonfatal myocardial infarction and harmful for death.

Conclusions

Composite outcomes may exaggerate perceptions of how well interventions work and, conversely, can hide effects on mortality. The pivotal assumption that the effect of the intervention should be similar for each of the components is often not met.

1Nordic Cochrane Centre, Rigshospitalet, Blegdamsvej 9, Dept 7112, DK-2100 Copenhagen, Denmark, e-mail: pcg@cochrane.dk; 2Dartmouth Institute for Health Policy, Dartmouth Medical School, Hanover, NH, USA

Reporting Biases and Publication Strategy for Off-Label Indications: A Potent Mix

S. Swaroop Vedula,1 Ilyas Rona,2 Palko Goldman,2 Thomas Greene,2 and Kay Dickersin1

Objective

To describe reporting practices in clinical trials sponsored by Parke-Davis/Pfizer in the context of their use of a “publication strategy” to market gabapentin for off-label indications.

Design

One of us (K.D.) was provided internal company documents by plaintiffs’ lawyers as part of legal action against Pfizer. We compared protocols with internal research reports and publications to identify reporting biases. We also examined internal company marketing assessments to identify whether a “publication strategy” or an “indication strategy” (ie, obtaining Food and Drug Administration approval) was recommended. One author (S.V.) extracted data relevant to reporting biases and another (K.D.) verified. K.D. signed an agreement in August 2008 agreeing to be bound by a protective order entered in pending litigation against Pfizer, which limits disclosure of confidential discovered information unless such information is ordered unsealed by the court, or the claim of confidentiality is waived by the claiming party. Through communications with counsel involved in the litigation occurring between August and October 2008, Pfizer agreed to waive any confidentiality claims concerning documents reviewed as part of K.D.’s expert report. As a result, all of the documents reviewed for this study have had their confidentiality claims waived.

Results

We examined 20 clinical trials of gabapentin for 4 off-label indications included as part of the legal action: migraine prophylaxis (n = 3), bipolar disorders (n = 3), neuropathic pain (n = 8), and nociceptive pain (n = 6). Marketing assessments for 3 indications recommended a “publication strategy,” and the fourth recommended multiple strategies. Each trial was associated with 1 or more reporting biases, including failure to publish efficacy results in full (11/20). In the 12 published trials, we also observed selective publication of primary (7/12) and secondary (11/12) outcomes, analyses in selected populations (5/12), possible ghost authorship (3/12), citation bias (5/12), possible time-lag bias (5/12), and a positive “spin” of the findings (8/12) (ie, a discrepancy between results and conclusions).

Conclusions

Combining “publication strategy,” used as a marketing tool, with biased reporting of results represents a potent mix that can create a false perception of a drug’s efficacy. We propose that existence of a publication strategy should be revealed by the trial sponsor to trial participants, investigators, editors, and peer reviewers at appropriate time points, and publicly at trial registration.

1Johns Hopkins Bloomberg School of Public Health, Mailroom W5010, 615 N Wolfe St, Baltimore, MD 21205, USA, e-mail: svedula@jhsph.edu; 2Greene LLP, Boston, MA, USA

Rhetoric

“Spin” in Reports of Randomized Controlled Trials With Nonstatistically Significant Primary Outcomes

Isabelle Boutron,1,3 Susan Dutton,1 Philippe Ravaud,2 and Douglas G. Altman1

Objective

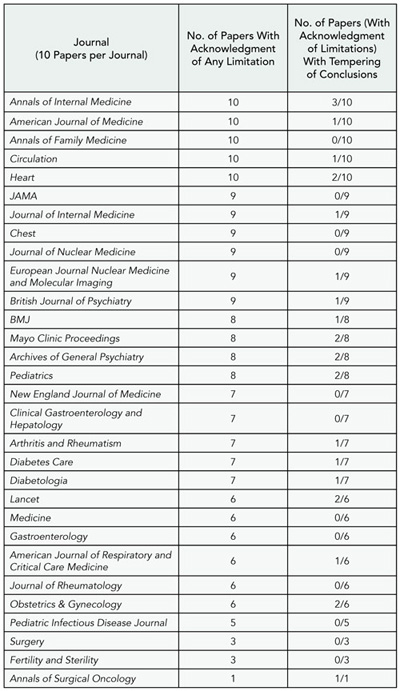

The objectives of this study were to identify the nature and frequency of “spin” (ie, manipulation of the content and rhetoric of reporting to convince the reader of the likely truth of a result) in published reports of randomized controlled trials (RCTs) with nonstatistically significant primary outcome(s).

Design

The Cochrane Highly Sensitive Search Strategy was used to identity reports of RCTs with a primary publication indexed in PubMed in December 2006. Articles were included if the study was a 2-arm RCT with a clearly identified primary outcome that was not statistically significant (ie, P ≥. 05). To systematically evaluate “spin” in reports, 2 readers appraised each selected article using a pretested standardized data abstraction form developed in pilot testing performed on another sample. We used the following preliminary classification scheme to investigate the main text and abstract to assess whether authors (1) interpreted nonstatistical outcomes as if the trial were an equivalence trial; (2) emphasized nonstatistically significant outcomes showing the benefit of treatment (linguistic “spin” or other method); (3) focused on other statistically significant results such as within-group comparisons, secondary outcomes, subgroup analyses, and modified population analyses; and (4) overinterpreted safety. Any other strategies used to influence readers were also collected. All discrepancies were discussed to achieve consensus and a third reader resolved any disagreements.

Results

Of the 1735 PubMed citations retrieved, 616 reports of RCTs were selected based review of the title and abstract. After screening the full text, 74 reports of 2-arm parallel trials with a primary outcome clearly identified and nonstatistically significant were selected. The full results will be presented during the Congress.

Conclusions

These results will provide information on the prevalence and nature of “spin” in a representative sample of published reports of RCTs with nonstatistically significant outcomes.

1Centre for Statistics in Medicine, University of Oxford, Oxford UK; 2Hûpital Bichat, Paris, France; 3Groupe Hospitalier, Bichat-Claude Bernard, Departement d’Epidemiologie, 46 rue Henri Huchard, Paris, Cedex 18 75877 France, e-mail: isabelle.boutron@bch.ap-hop-paris.fr

Rhetoric Used in Reporting Research Results

Lisa Bero and Yolanda Cheng

Objective

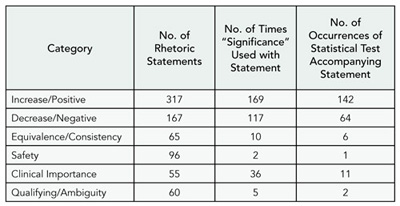

To identify rhetoric used to frame research results reported in drug studies and to determine if statistically significant numerical data support the claims about a drug.

Design

In this observational study, we evaluated 35 published randomized controlled trials for language about a drug’s effect(s). The 35 articles from 24 journals were publications of trials that had been submitted in New Drug Applications (NDAs) where there were discrepancies in conclusion between the published literature and the NDA trial report that favored the drug. For each publication, we assessed the results and conclusion sections for rhetoric that suggested that the drug was more effective or safer than the comparator. An initial group of keywords (eg, significance, statistical, clinical, more, less, effective, safe/well-tolerated) guided the extraction of rhetoric. Additionally, the statistically significant result (eg, P value, 95% confidence interval) was recorded if it was presented in text, tables, or figures. The rhetoric statements were grouped according to 6 categories of effect: increase/ positive, decrease/negative, equivalence/consistency, safety, clinical importance, and qualifying/ambiguity. For each statement, we noted the accompanying use of the term “significance” and a statistical test.

Results

The articles were published between 1993 and 2004, with 30 of 35 published after 2000. Seven of the articles had no sponsorship statement. Three of the 24 journals had an impact factor greater than 10 (range, 0-17.6). For the 35 papers assessed, 695 rhetoric statements were extracted. Forty-nine percent (338/695) of the statements of effect made were not accompanied by any mention of a statistically significant result. Fifty-one percent (357/695) of the rhetoric statements included the term “significance,” where 72% (258/357) were supported by a statistical test result. The majority of the rhetoric statements were classified as increase/positive effect statements, with 84% (142/169) of them having an associated statistical test. Rhetoric regarding the safety of a drug was rarely supported by a statistical result (Table 8).

Table 8. Categorization of Rhetoric Statements

Conclusions

Rhetoric used to frame research results in drug studies overstates the effectiveness of a drug. A limitation of our study is that we did not obtain original data and conduct our own statistical analysis. The text of result and conclusion sections should align more closely with the numerical results.

Department of Clinical Pharmacy, University of California, San Francisco, 3333 California St, Suite 420, Box 0613, San Francisco, CA 94118, USA, e-mail: berol@pharmacy.ucsf.edu

A Propaganda Index for Screening Manuscripts and Articles

Eileen Gambrill

Objective

The propaganda index is designed to serve as a complement to methodological filters such as CONSORT in reviewing manuscripts and published literature. Propaganda regarding problems addressed promotes the medicalization of behaviors and feelings and hinders empirical investigation of well-argued alternative views. Predictions were as follows: (1) reading a definition of propaganda will not facilitate propaganda spotting skills, (2) use of a propaganda index will facilitate this task, and (3) methodological quality is not correlated with a measure of propaganda.

Method

A propaganda index consisting of 32 items was created based on related literature. Items concerned medicalization, use of vague terms, lack of documentation for claims made, and hiding controversy. Twenty articles describing randomized controlled trials (RCTs) regarding social anxiety were selected via an Internet search. Twenty PhD-level consumers of the literature were asked to read 5 articles with authors’ names and journal titles removed and to identify propaganda using a definition of propaganda as “encouraging beliefs and actions with the least thought possible.” They next applied the index to each article and returned this information. One week later they were to again rate the same 5 articles using the index.

Results

Review of the 5 articles by the author revealed a high rate of propaganda: 78 out of 110 opportunities. This review served as the criterion. Preliminary results for 8 participants showed that they detected between 0 and 17 indicators over all 5 articles with an average of 5 before using the index. Percentage agreement of participants with the criterion ratings on the propaganda index for all 5 articles ranged from 57% to 88% with an average of 74%. Further data regarding predictions will be presented at the conference.

Conclusions

Preliminary data suggest that, without prompting, many forms of propaganda remain undetected in reports of RCTs and that detection can be increased by use of a propaganda index.

University of California at Berkeley, School of Social Welfare, 120 Haviland Hall #7400, Berkeley, CA 94720-7400, USA, e-mail: gambrill@ berkeley.edu

Trial Registration

Frequency and Nature of Changes in Primary Outcome Measures

Deborah A. Zarin, Tony Tse, and Rebecca J. Williams

Objective

Prespecification of outcome measures forms the basis of most statistical analyses of clinical trials. Trial registration allows for the tracking of changes to outcome measures from study initiation to eventual publication. Changes may occur any time after study initiation, though there is no standard for distinguishing important vs unimportant changes. The objective of this study was to determine the frequency and type of changes in primary outcome measures (POMs) between entries in ClinicalTrials.gov and associated publications and between initial and current registry entries.

Design

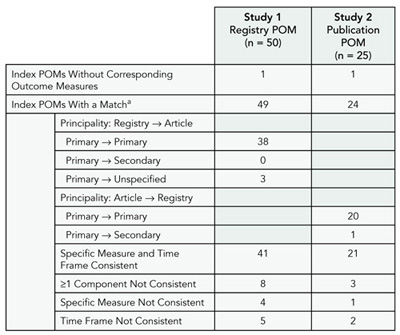

We identified 75 sequential MEDLINE citations with ClinicalTrials.gov entries, generating “registry-publication pairs.” Study 1 (50 pairs) compared the registry POM to outcomes in the publication. Study 2 (25 pairs) compared the publication POM to the registered outcomes. Study 3 examined changes over time for all 75 registry POMs. Each outcome measure was coded for “principality” (primary, secondary, or unspecified), domain (eg, depression), specific measure (eg, HAM-D), and time frame. Pairs were considered matches if the domain was the same. If either specific measure or time frame were not consistent, pairs were “substantively different.”

Results

Sixty-two of the 75 registry-publication pairs were consistent, though differences in level of specificity within pairs were observed (Table 9). One publication POM had been registered as a secondary outcome measure. Eight of 50 Study 1 pairs and 3/25 Study 2 pairs had substantive differences. Six of 75 Study 3 POMs changed substantively after initial registration. Forty-eight of 75 of the ClinicalTrials.gov entries were initially registered more than 3 months after the start date, with some delayed by years.

Table 9. Results of 2 Studies Characterizing primary Outcome measures (pOms)

a Two POMs were considered a match if they had the same domain (eg, pain).

Conclusions

The taxonomy enabled us to categorize POM pairs. Most POM pairs were consistent based on our criteria. Our ability to detect inconsistencies was limited at times by vague registry entries or substantially delayed initial registrations. This taxonomy could be used to develop consensus criteria for tracking and communicating outcome measure changes.

National Library of Medicine, National Institutes of Health, 8600 Rockville Pike, Bldg 38A, Room 75705, Bethesda, MD 20892, USA, e-mail: dzarin@mail.nih.gov

Trial Registration Can Be a Useful Source of Information for Quality Assessment: A Study of Randomized Trial Records Retrieved From the World Health Organization Search Portal

Ludovic Reveiz,1 An-Wen Chan,2 Karmela Krleža-Jerić,3 Carlos Granados,4 Mariona Pinart,5 Itziar Etxeandia,6 Diego Rada,7 Monserrat Martinez,8 and Andres Felipe Cardona9

Objective

We evaluated empirically whether trial registries provide useful information to evaluate the quality of randomized controlled trials (RCTs).

Methods

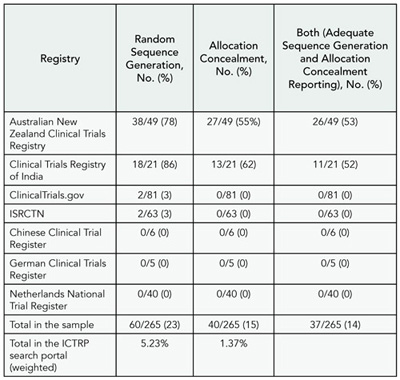

We compared methodological characteristics of a random sample of ongoing RCTs registered in 6 World Health Organization (WHO) primary registries and ClinicalTrials.gov in 2008. As 90% of trials were provided from ClinicalTrials.gov, we ensured adequate representation across registries by including a representative sample of each registry. We assessed the reporting of relevant domains from the Cochrane Collaboration’s “Risk of Bias” tool and other key methodological aspects. Two reviewers independently assessed each record.

Results

A random sample of records of actively recruiting RCTs was retrieved from 7 registries using the WHO International Clinical Trials Registry Platform (ICTRP) search portal. Weighted overall proportions in the ICTRP search portal for adequate reporting of sequence generation, allocation concealment, and blinding (patient-reported outcomes and objective outcomes) were 5.23% (95% confidence interval [CI], 2.55-7.91), 1.37% (95% CI, 0%-2.76%), 8.58% (95% CI, 5.8-11.36), and 8.69% (95% CI, 5.29-12.09), respectively. Most items had insufficient or no information to permit judgment (Table 10). Significant differences in the proportion of adequately reported RCTs were found between registries that had specific methodological fields for describing methods of randomization and allocation concealment compared to registries that did not (random sequence generation, 74% vs 2%, P < .001; allocation concealment, 53% vs 0%, P < .001). Concerning other key methodological aspects, weighted overall proportions of RCTs with adequately reported items were as follows: eligibility criteria (81%), primary outcomes (66%), secondary outcomes (46%), follow-up duration (62%), description of the interventions (53%), and sample size calculation (1%). Final results will be presented at the Congress.

Table 10. Adequate Reporting of Sequence Generation and Allocation Concealment From the WHO ICTRP Search Portal From January 1 to December 12, 2008, by Source Registry

Conclusions

Registries with specific methodological fields obtained more relevant quality information than those with general or coded fields. Critical appraisal of RCTs should include a search for information on trial registries as a complement to journal publications. However, the usefulness of the information will vary across registries due to variable content.

1Research Institute, Sanitas University Foundation, Cochrane Collaboration Branch, Av Calle 127 #21–60 cons 221, Bogota, Colombia, e-mail: lreveiz@yahoo.com; 2Mayo Clinic, Rochester, MN, USA; 3Knowledge Synthesis and Exchange Branch, Canadian Institutes of Health Research, Ottawa, Ontario, Canada; 4Research Institute, National University of Colombia; 5Department of Dermatology Research Unit for Evidence-based Dermatology, Hospital Plató, Barcelona, Spain; 6Clinical Epidemiology Unit, Cruces Hospital, & Osteba-Basque Office for HTA, Department of Health-Basque Country, Spain; 7Department of Physiology, University of the Basque Country (UPV/EHU), Spain; 8Institut de Recerca Biomèdica de Lleida (IRBLLEIDA)-Universitat de Lleida, Catalonia, Spain; 9Grupo Oncología Médica, Instituto Catalán de Oncología, Hospital Universitario Germans Trias i Pujol, Badalona, Spain

Registration Completeness and Changes of Registered Data From ClinicalTrials.gov for Clinical Trials Published in ICMJE Journals After September 2005 Deadline for Mandatory Trial Registration

Mirjana Huic,1,2 Matko Marušić,2,3 and Ana Marušić2,3

Objective

After September 2005, journals represented by the International Committee of Medical Journal Editors (ICMJE) were to publish clinical trials that are timely and adequately registered in approved registries. We assessed how well ICMJE member journals followed their own registration requirement policy.

Design

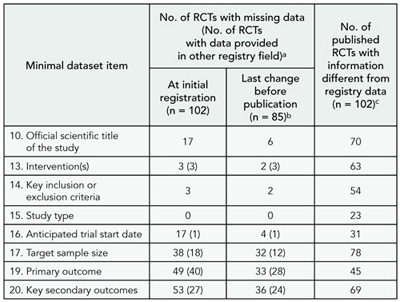

We identified all reports of clinical trials with ClinicalTrials. gov registration number published by ICMJE journals from September 13, 2005, to April 24, 2008 (n = 438). For a random subset of 102 reports (Annals of Internal Medicine = 12/36, BMJ = 12/29, Croatian Medical Journal = 5/5, CMAJ = 1/1, JAMA = 16/100, Lancet = 14/88, New England Journal of Medicine = 42/179), we used the Archive section of ClinicalTrials. gov to analyze the completeness of minimal registration dataset and changes to the registration data elements relevant for the quality of trial reporting .

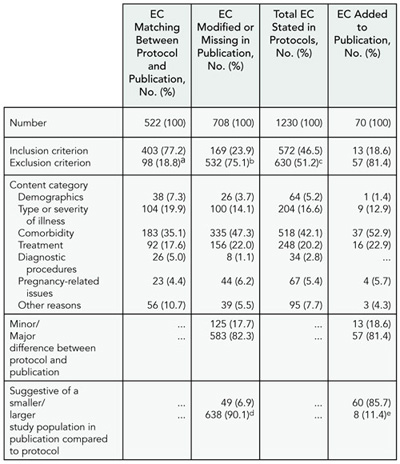

Results