2005 Abstracts

FRIDAY, SEPTEMBER 16

Authorship and Contributorship

In the Eye of the Beholder: Contribution Disclosure Practices and Inappropriate Authorship

Ana Marušić,1,2 Tamara Bates,2 Ante Anić,3 Vesna Ilakovac,4 and Matko Marušić,1,2

Objective

To determine the effects of the structure of contribution disclosure forms on the number of authors not meeting criteria of the International Committee of Medical Journal Editors (ICMJE) and to analyze authors’ contributions for the same article declared first by the corresponding author and then by individual authors.

Design

In a single-blind randomized controlled trial, 1462 authors of 332 manuscripts submitted to the Croatian Medical Journal were sent 3 different contribution disclosure forms: open-ended; categorical, with 11 possible contribution choices; and instructional, instructing how many contributions are needed to satisfy individual ICMJE criteria. Main outcome measure was the number of authors not satisfying ICMJE criteria (honorary authors). In a separate study, corresponding authors of 201 submitted articles, representing 919 authors, received contribution disclosure forms with 11 possible contribution choices to declare contributions of all authors. The same form was then sent to each individual author, including the corresponding author.

Results

In the randomized trial, the group answering the instructional form had significantly fewer authors whose reported contributions did not satisfy ICMJE criteria (18.7%) than did groups answering categorical (62.8%) or open-ended (54.7%) forms (χ22 = 210.8, P < .001). All authors answering the open-ended form, regardless of their compliance with authorship criteria, reported significantly fewer contributions (median, 3; 95% confidence interval [CI], 3-3) than did authors responding to either the categorical (median, 4; 95% CI, 4-4; z score = –7.19; P < .001) or instructional forms (median, 4; 95% CI, 4-5; z score = –13.98; P < .001). Honorary authors answering instructional forms reported more contributions (median, 3; 95% CI, 3-3) than those answering either the categorical (median, 2; 95% CI, 2-3; z score = 2.76; P = .006) or open-ended forms (median, 2; 95% CI, 2-2; z score = 3.38; P < .001). Most honorary authors (39.9%) lacked only the third ICMJE criterion (final approval of manuscript). In the second study, 201 (28.0%) of 718 noncorresponding authors met all 3 ICMJE criteria according to corresponding author’s statement compared with 287 (40.0%) individual author’s declarations (exact McNemar test, S1 = 48.7; P < .001). Disclosure forms filled out twice by corresponding authors for the same manuscript disagreed in 140 cases (69.3%).

Conclusions

The structure of contribution disclosure forms significantly influences the number of contributions reported by authors and their compliance with ICMJE authorship criteria. Discrepancy between what corresponding authors declare on 2 separate occasions about their own contributions to the same manuscript indicate that recall bias may be one of the confounding factors of responsible authorship practices. Journal editors should be aware of the cognitive aspects of survey methodology when they construct self-reports about behavior, such as contribution disclosure forms.

1Croatian Medical Journal, Zagreb, Croatia; 2Zagreb University School of Medicine, Salata 3, HR-10000 Zagreb, Croatia, e-mail: marusica@mef.hr; 3Holy Ghost General Hospital, Zagreb, Croatia; 4Josip Juraj Strossmayer University, School of Medicine, Osijek, Croatia

Declaration of Medical Writing Assistance in International, Peer-Reviewed Publications and Effect of Pharmaceutical Sponsorship

Karen Woolley,1,2 Julie Ely,2 Mark Woolley,2 Felicity Lynch,2 Jane McDonald,3 Leigh Findlay,2 and Yoonah Choi2

Objective

Medical writing assistance may improve manuscript quality and timeliness. Good Publication Practice Guidelines for pharmaceutical companies encourage authors to acknowledge medical writing assistance. The objectives of this study were to determine the proportion of articles from international, high-ranking, peer-reviewed journals that declared medical writing assistance and to explore the association between pharmaceutical sponsorship and medical writing assistance in terms of time to manuscript acceptance.

Design

The acknowledgment sections of 1000 original research articles were reviewed. The sample comprised 100 consecutive articles published up to January 2005 from each of 10 high-ranking (impact factor–based), international, peer-reviewed medical journals from different therapeutic areas. The proportion of articles declaring pharmaceutical sponsorship and medical writing assistance, and the time interval between manuscript submission and acceptance were calculated. Analysis of variance was used to explore associations between sponsorship, writing assistance, and manuscript acceptance time.

Results

Medical writing assistance was declared in only 6% of publications (n = 60). In the pharmaceutical-sponsored studies subset (n = 102 articles), assistance was declared in 10 articles (10%). Disclosure of medical writing assistance was associated with reduced time to acceptance (declared assistance: geometric mean, 83.6 days; vs no declaration: geometric mean, 132.2 days; relative difference, 0.63; 95% confidence interval, 0.40-1.01; P = .053).

Conclusions

Based on this 1000-article sample, the reported use of medical writing assistance appears low (6%). If used, medical writing assistance should be declared. For pharmaceutical-sponsored studies, the time to publication may be faster when medical writers are used.

1University of Queensland, 8 Shipyard Circuit, Noosaville, Queensland 4566, Australia, e-mail: kw@proscribe.com.au; 2ProScribe Medical Communications, Queensland, Australia; 3ProScribe Medical Communications, Tokyo, Japan

Journal Guidelines and Policies

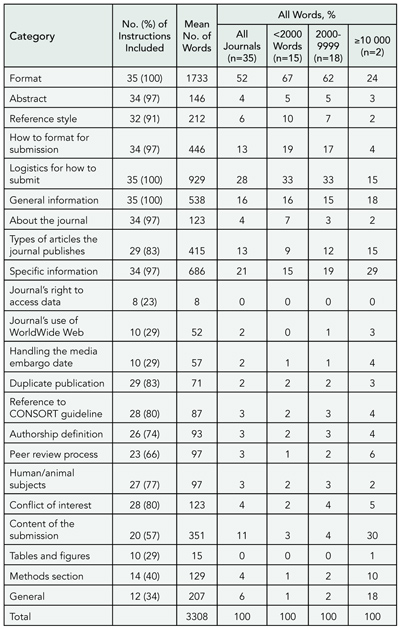

The Statistical and Methodological Content of Journals’ Instructions for Authors

Douglas G. Altman1 and David L. Schriger1,2

Objective

To characterize the methodological and statistical advice provided by the instructions for authors of major medical journals.

Design

Using citation impact factors for 2001, we identified the top 5 journals from each of 33 medical specialties and the top 15 from general and internal medicine that publish original clinical research. The final sample of 166 journals was obtained after examining 232 journals (some journals represent more than 1 specialty). We obtained the online instructions for authors for each journal between January and May 2003 and identified all material relating to 15 methodological and statistical topics. Assessments were performed by reading the instructions for authors and validated by text searches for relevant words.

Results

Fewer than half the journals provided any information on statistical methods (TABLE 1). General journals were more likely to refer to reporting guidelines but less likely to include advice about statistical issues. Few journals (13%) commented on the use of tables and figures. Those instructions that referenced methodology papers cited from 1 to 49 (median, 1). There were many contradictions among instructions (eg, “Report actual P values, rather than ranges or limits” vs “Statistical probability (p) should be reported … at only one of the following levels p < 0.05, 0.01, 0.005, and 0.001” and “tables usually convey more precise numerical information; graphs should be reserved for highlighting changes over time or between treatments” vs “For presentation of data, figures are preferred to tables”). Other instructions were opaque (eg, “In general, statistical treatment of multiple experiments is required and is preferred to representative experiments; we prefer to see the SD unless sets of data from experiments or groups are pooled, in which case the SEM may be used”).

Conclusions

Journal instructions for authors provide little guidance regarding methodological and statistical issues and the advice provided may be unhelpful and at times contradictory.

Table 1. Journal Information for Authors on Statistical and Methodological Issues

Abbreviations: CI, confidence interval; ICMJE, International Committee of Medical Journal Editors; CONSORT, Consolidated Standards of Reporting Trials; QUOROM, Quality of Reporting of Meta-analyses; STARD, Standards for Reporting Diagnostic Accuracy.

1Cancer Research UK/NHS Centre for Statistics in Medicine, University of Oxford, Old Road Campus, Headington, Oxford OX3 7LF, UK, e-mail: doug.altman@cancer.org.uk; 2University of California Los Angeles Emergency Medicine Center, University of California Los Angeles School of Medicine, Los Angeles, CA, USA

Questionnaire Availability From Published Studies in 3 Prominent Medical Journals

Lisa Schilling, Kristy Lundahl, and Robert Dellavalle

Objective

To describe the availability of questionnaires used for research published in 3 high-circulation medical journals.

Design

A MEDLINE search with OVID using the terms “questionnaire” or “survey” identified 368 putative studies published between January 2000 and May 2003 in JAMA, New England Journal of Medicine, or The Lancet. Studies using nonnovel questionnaire instruments (eg, CAGE, Behavioral Risk Factor Surveillance Survey) were excluded. For inclusion, 2 investigators independently judged that the results of a questionnaire constituted the main outcome of the study. For qualifying studies with duplicate corresponding authors, 1 study was randomly selected for inclusion. Eighty-seven qualifying studies remained. Five publications contained reproductions of the administered questionnaire. In June 2004, corresponding authors of the remaining 82 articles were asked via written correspondence to provide a copy of the questionnaire used in their study. Nonresponders were mailed 2 additional requests at 2-week intervals.

Results

Forty-four (54%; 95% confidence interval [CI], 43%-64%) of the requested questionnaires were provided; 38 (46%; 95% CI, 36%-57%) of the requested questionnaires were not provided. Of those not provided, no reason was provided for 26. The following other responses were provided: no access to the questionnaire (n = 3), questionnaire only given to collaborators (n = 2), referred to questionnaire description in the article (n = 2), change in job status (n = 1), no structured questionnaire used (n = 1), returned a questionnaire unrelated to the paper (n = 1), returned partial questionnaire (n = 1), and correct e-mail or postal address unavailable (n = 1).

Conclusions

Our study shows that 6% of novel questionnaires were published, and only 54% of unpublished questionnaires could be obtained via the corresponding author. Reforms, such as mandatory publication or deposition in an open access archive, are needed to guarantee access to the fundamentally indispensable component of questionnaire research, the questionnaire.

Departments of Medicine and Dermatology, University of Colorado at Denver and Health Sciences Center, 2400 E 9th Ave, B-180, Denver, CO 80262, USA, e-mail: lisa.schilling@uchsc.edu

Conflict of Interest Disclosure Policies and Practicesof Peer-Reviewed Biomedical Journals

Richelle J. Cooper,1 Malkeet Gupta,1 Michael S. Wilkes,2and Jerome R. Hoffman1

Objective

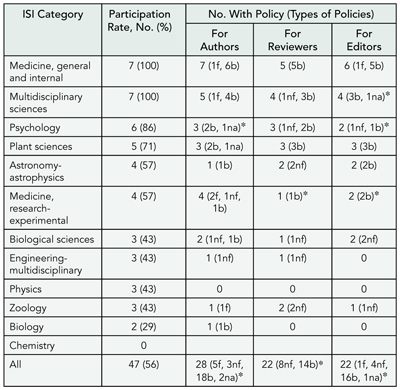

We undertook this investigation to characterize the conflict of interest (COI) policies of biomedical journals with respect to authors, peer-reviewers, and editors and to ascertain what information about COI disclosures is publicly available.

Design

We performed a cross-sectional survey of a convenience sample of 135 peer-reviewed biomedical journal editors. We included a broad range of North American and European, general and specialty medical journals that publish original, clinical research topics, based on impact factor, and the recommendations of experts in the field. We reviewed each journal’s Web page to identify the editors, and each editor in our sample represented a single journal only. We developed and pilot tested a 3-part Web-based survey prior to data collection. The survey included questions about the presence of specific policies for authors, peer-reviewers, and editors, any specific restrictions on authors, peer-reviewers, and editors based on COI, and the public availability of these disclosures. We contacted the journal editor’s with a minimum of 3 requests, to improve response rate, and provided a written version of the survey for those unable to access the Web site.

Results

The response rate for the survey was 91/135 (67%). Eighty-five (93%) journals have an author COI policy. Ten (11%) journals restrict author submissions based on COI (eg, drug company authors’ papers on their products are not accepted). While 77% report collecting COI information on all author submissions, only 57% publish all author disclosures. Of journal respondents, 42/91 (46%) and 36/91 (40%) have a specific policy on peer-reviewer and editorial COI, respectively; 25% and 31% of journals require recusal of peer-reviewers and editors, respectivley, if they report a COI; and 3% publish peer-reviewer COI disclosures and 12% publish editor COI disclosures, but 11% and 24% respectively, report the information is available on request.

Conclusions

While most journals in our sample report having an author COI policy, the disclosures are not collected or published universally. Journals less frequently reported COI policies for peer reviewers and editors, and even less commonly publish those disclosures. Specific policies to restrict authorship, or peer-review and editing, were uncommonly reported in our sample.

1UCLA Emergency Medicine Center, UCLA School of Medicine, 924 Westwood Blvd, #300, Los Angeles, CA, USA, e-mail: richelle@ucla.edu; 2UC Davis School of Medicine, CA, USA

Peer Review Process

Editorial Changes to Manuscripts Published in Major Biomedical Journals

Kirby P. Lee, Elizabeth A. Boyd, and Lisa A. Bero

Objective

To assess the added value of peer review by identifying and characterizing editorial changes made to initial submissions of manuscripts that were accepted and published.

Design

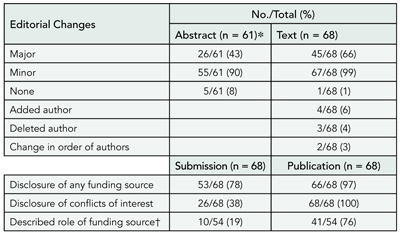

A prospective cohort of original research articles (n = 1107) submitted to the Annals of Internal Medicine, BMJ, and Lancet between January 2003 and April 2003. Experimental and observational studies, systematic reviews, and qualitative, ethnographic, or nonhuman studies were included. Single case reports were excluded. The original submitted manuscript was compared with the final publication. Changes were documented separately for abstract and text and qualitatively categorized. Minor changes were classified as additions or deletions of words that improved the clarity, readability, or accuracy of reporting. Major changes were classified as additions or deletions of data, new or revised statistical analyses, or statements affecting the interpretation of results or conclusions. We also documented changes in authorship and disclosure of any funding soure (including its role) or potential conflicts of interest. Proportions and frequencies were calculated using the manuscript as the unit of analysis.

Results

Of 1107 submitted manuscripts, 68 (6.1%) were accepted for publication. Changes from submission to publication are listed in TABLE 2. Extreme variability in the editorial process was observed ranging from near-verbatim publication of submitted manuscripts to extensive additions and deletions of data or text and revised statistical analyses with new tables and figures. Most changes were minor consisting of simple word modifications, statements, and clarifications. Major changes often included toning down the original Conclusions, emphasizing study limitations, or revising statistical analyses. Disclosure of potential conflicts of interest, any funding source, and its role in the design, conduct, and publication process improved in the final publication.

Conclusions

The editorial process makes a wide variety of contributions to improve the clarity, accuracy, and reporting of results. Authors often do not disclose potential conflicts of interest or the role of the funding source in submitted manuscripts.

Institute for Health Policy Studies, University of California, San Francisco, San Francisco, CA 94118, USA, e-mail: leek@pharmacy.ucsf.edu

Table 2. Editorial Changes to Submitted Manuscripts

Abbreviations: CI, confidence interval; ICMJE, International Committee of Medical Journal Editors; CONSORT, Consolidated Standards of Reporting Trials; QUOROM, Quality of Reporting of Meta-analyses; STARD, Standards for Reporting Diagnostic Accuracy.

*Not all manuscripts contained an abstract.

†Only manuscripts disclosing a funding source other than none (n = 54).

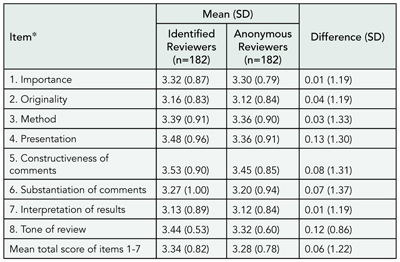

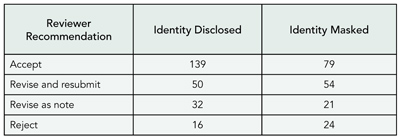

Comparison of Author and Editor Suggested Reviewers in Terms of Review Quality, Timeliness, and Recommendation for Publication

Sara Schroter,1,2 Leanne Tite,1 Andrew Hutchings,2and Nick Black2

Objective

Many journals give authors the opportunity to suggest reviewers to review their paper. We report a study comparing author-suggested reviewers (ASRs) and editor-suggested reviewers (ESRs) of 10 biomedical journals in a range of specialties to investigate differences in review quality, timeliness, and recommendation for publication.

Design

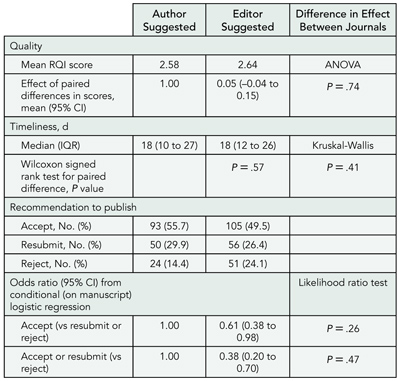

Original research papers sent for external review at 10 participating journals between April 1, 2003, and December 31, 2003, in which the author had suggested at least 1 reviewer were included. Editors were instructed to make decisions about their choice of reviewers in their usual manner. Journal administrators then requested additional reviews from the author’s list of suggestions according to a strict protocol using the journals’ electronic manuscript tracking systems. Review quality was rated independently using the validated Review Quality Instrument by 2 raters blind to reviewer identity and status. Timeliness was calculated as the interval between dates when reviews were solicited and completed. Recommendation was calculated for 6 journals as proportion recommending acceptance (including minor revision), resubmission, or rejection. Reviewers who were suggested by both the editor and the author were treated as ASRs.

Results

There were 788 reviews for 329 manuscripts. Review quality and timeliness did not differ significantly between ASRs and ESRs (TABLE 3). The ESRs were less likely to provide a recommendation of accept and accept or resubmit. There was no evidence that the effect of reviewer status on review quality, timeliness, and recommendation to accept and recommendation to accept or resubmit varied across journals.

Conclusions

Author and editor suggested reviewers of biomedical research in a range of specialties did not differ in the quality of their reviews, but ASRs tended to make more favorable recommendations for publication.

Table 3. Impact of Reviewer Status on Review Quality, Timeliness, and Recommendation to Publish

Abbreviations: ANOVA, analysis of variance; CI, confidence interval; IQR, interquartile range; RQI, Review Quality Instrument.

1BMJ Editorial Office, BMA House, Tavistock Square, London WC1H 9JR, UK, e-mail: sschroter@bmj.com; 2Health Services Research Unit, London School of Hygiene and Tropical Medicine, London, UK

Effect of Authors’ Suggestions Concerning Reviewers on Manuscript Acceptance

Lowell A. Goldsmith,1 Elizabeth Blalock,2 Heather Bobkova,2 and Russell P. Hall3

Objective

To determine the effects of authors’ suggested/excluded reviewers on manuscript acceptance.

Design

The Journal of Investigative Dermatology is ranked first in impact factor in its clinical and basic science specialty. A total of 228 consecutive submissions of original articles, representing one third of 2003 annual submissions, were analyzed for the effect of authors’ suggestions concerning reviewers on peer review outcome. Odds ratios (ORs) were calculated with STATA version 7 and are presented with the 95% confidence intervals (CIs).

Results

Forty percent of submitters neither suggested nor excluded reviewers; 39% suggested only, 16% both suggested and excluded, and 5% excluded only. Fifty-five percent of submitters suggested between 1 and 14 reviewers (mode, 4). Nineteen percent of suggested reviewers were invited; of those, 92% agreed to review; of the 92%, 90% completed reviews. Twenty-one percent of submitters asked to exclude 1 or more reviewers. Of those requesting exclusion of reviewers, 54% named 1; 25% named 2; and 21% named 3 or more. The most common reason was “close competitors,” but usually no reason was given. Authors’ requests for exclusion were followed 93% of the time. Odds ratios for acceptance were 1.64 (95% CI, 0.97-2.79) for authors suggesting reviewers compared with those not suggesting reviewers, and 2.38 (95% CI, 1.19-4.72) for those excluding reviewers. Multivariate analysis of acceptance rate had an OR of 2.17 (95% CI, 1.08-4.38) for excluding reviewers, after correcting for the authors suggesting reviewers. No significant difference (t test) was found in the time-to-first decision for either group.

Conclusions

Authors excluding reviewers had higher acceptance rates by univariate analysis and multivariate analysis. Suggesting reviewers had less of an influence on acceptance than excluding reviewers. The advantage for authors of excluding reviewers requires processes to eliminate bias during peer review.

1Department of Dermatology, University of North Carolina, Chapel Hill, NC, USA; 2Journal of Investigative Dermatology, 920B Airport Rd, Suite 216, Chapel Hill, NC 27514, USA, e-mail: elizabeth_blalock@med.unc.edu; 3Dermatology Division, Duke University, Durham, NC, USA

Assessment of Blind Peer Review on Abstract Acceptancefor Scientific Meetings

Joseph S. Ross,1 Cary P. Gross,1 Yuling Hong,2Augustus O. Grant,3 Stephen R. Daniels,4Vladimir C. Hachinski,5 Raymond J. Gibbons,6Timothy J. Gardner,7 and Harlan M. Krumholz1

Objective

To determine whether blind peer review, a common method for minimizing reviewer bias, affects acceptance of abstracts to scientific meetings when examined by characteristics of the authors’ institutions.

Design

We used American Heart Association (AHA) data, which used open peer review from 2000 to 2001 and blind peer review from 2002 to 2004 for abstracts submitted to its annual Scientific Sessions. Authors’ institutions were categorized by country (United States vs non–United States) and country’s official language (English vs non-English). The US institutions were scored for prestige, combining total National Institutes of Health (NIH) awards (0-2 points) and US News Heart & Heart Surgery hospital ranking (0-2 points), and subsequently categorized as more (3-4 points) or less (0-2 points) prestigious. Data were analyzed using χ2 tests and logistic regression.

Results

On average, the AHA received 13 456 abstracts per year over our study period. A small but significantly lower rate of abstracts were accepted during the blind review period (30% vs 28%, P < .001). Blind peer review was associated with lower acceptance from US institutions (41% vs 33%, P < .001), while acceptance was slightly but significantly greater from non-US institutions (23% vs 24%, P < .001). Among non-US institutions, blind review was associated with slightly greater acceptance from non–English-speaking countries (21% vs 23%, P < .001) and trended toward lower acceptance from English-speaking countries (31% vs 29%, P = .053). Adjusting for US acceptance rates, blind review was associated with a larger decrease in acceptance from more prestigious US institutions (51% vs 38%) than from less prestigious US institutions (37% vs 32%, P < .001), along with lower acceptance from US federal research institutions (65% vs 46%, P < .001). There was no difference in acceptance from corporate institutions (29% vs 28%, P = .79).

Conclusions

Our results suggest there is bias in the open peer review process, favoring prestigious institutions within the United States. These findings argue for universal adoption of blind peer review by scientific meetings.

1Yale University School of Medicine, IE-61 SHM, PO Box 208088, New Haven, CT 06520-8088, USA, e-mail: joseph.s.ross@yale.edu; 2American Heart Association, Dallas, TX, USA; 3Duke University, Durham, NC, USA; 4Cincinnati Children’s Hospital Medical Center, Cincinnati, OH, USA; 5University of Western Ontario, London, Ontario, Canada; 6Mayo Clinic and Mayo Foundation, Rochester, MN, USA; 7Christiana Care Health System, Newark, DE, USA

Peering at Peer Review: Harnessing the Collective Wisdomto Arrive at Funding Decisions About Grant Applications

Nancy E. Mayo,1 James Brophy,1 Mark S. Goldberg,1Marina B. Klein,1 Sydney Miller,2 Robert Platt,1 and Judith Ritchie1

Objective

There is a persistent degree of uncertainty and dissatisfaction with the peer review process around the awarding of grants, underlining the need to validate current procedures. The purpose of our study was to compare the allocation of research funding among 3 different processes for peer review: Classic Structured Scientific In-depth 2-reviewer Critique (CLASSIC), all panel members’ independent ranking method (RANKING), and committee of review of rankings (RE-RANK).

Design

Two consecutive years of a pilot project competition at a major university medical center provided the material for a series of experiments. During the first year of the competition, 11 reviewers rated 32 applications; during the second year, 15 reviewers rated 23 applications. For each of these 2 samples, agreement between the RANKING and CLASSIC methods was compared; the impact of rater on the funding decision was assessed by determining the proportion of projects that would meet the funding cutoff considering all possible pairs of reviewers [n(n-1)/2]; Cronbach α was used to identify the number of reviewers needed for optimal consistency, and associations between pairs of raters were calculated.

Results

Agreement between the CLASSIC and RANKING methods was poor in both samples (κ = 0.36). Depending on the pairings, the top rated project in each stream would have failed the funding cutoff with a frequency of 9% and 35%, respectively. Four of the top 10 projects identified by RANKING had a greater than 50% of not being funded by the CLASSIC ranking. Ten reviewers provided optimal consistency for the RANKING method in the first sample but this was not repeated in the second sample. There were only 3 statistically significant positive associations among the 105 pairings of the 15 raters, and there were many negative correlations, some quite strongly negative. Compared with the CLASSIC method, the RANKING resulted in 18 of 23 projects (78%) changing their rank by more than 3 places; however, disagreement on funding status occurred for only 4 of the projects (17%). RE-RANKing during committee discussion resulted in a change of funding status for 2 of the 23 previously ranked projects (<9%).

Conclusions

The lack of concordance among reviewers on the relative merits of individual research grants indicates that under the classical, small number-reviewer process, there is a risk that funding outcome will depend on who is assigned as reviewers rather than the merits of the project. Prior RANKING appears to be a way of harnessing the collective wisdom and producing some stability in funding decisions across time.

1Division of Clinical Epidemiology R4.29, McGill University Health Center, RVH Site, 687 Pine Ave W, Montreal, Quebec, H3A 1A1, Canada, e-mail: nancy.mayo@mcgill.ca; 2Concordia University, Montreal, Quebec, Canada

A Second Order of Peer Review:Peer Review for Clinical Practitioners

Brian Haynes, Chris Cotoi, Leslie Walters, Jennifer Holland, Nancy Wilczynski, Dawn Jedraszewski, James McKinlay, and Ann McKibbon; for the PLUS project

Objective

Clinical journals serve many lines of communication, including scientist-to-scientist, clinician-to-scientist, scientist-to-clinician, and clinician-to-clinician. We describe a second order of peer review to serve individual clinician readers.

Design

We developed an online clinical peer review system to identify articles of highest interest for each of a broad range of clinical disciplines. Practicing clinicians are recruited to the McMaster Online Rating of Evidence (MORE) system and register according to their clinical discipline. Research staff reviewed more than 110 clinical journals to select each article that meets critical appraisal criteria for diagnosis, treatment, cause, course, and economics of health care problems. An automated system assigns each qualifying article to 4 clinical raters for each pertinent discipline, and records their online assessments of the article’s relevance and newsworthiness. Rated articles are transferred to a database that is used to select articles for 3 evidence-based journals and to feed online alerting services: McMaster PLUS and BMJUPDATES+. Users of these services receive alerts and can search the database according to peer ratings for their own discipline. McMaster PLUS is being evaluated in a cluster randomized trial.

Results

To date, MORE has 2015 clinical raters, with 34 974 ratings collected for 6573 articles. Ratings for articles of potential interest to both primary care physicians and specialists reflect the different interests of these groups (P < .05). Preliminary results from the McMaster PLUS trial (n = 203 physicians) show an increase in the use of evidence-based information resources (mean difference, 0.43 logins per month; 95% confidence interval, 0.02-0.16; P < .02).

Conclusions

A peer review system to suit the information interests of specific groups is clearly feasible. Preliminary findings show that it differentiates between clinical disciplines and stimulates the users of the services it creates to use more evidence-based resources, such as original and systematic review articles.

Health Information Research Unit, Michael G. DeGroote School of Medicine, McMaster University, 1200 Main St W, Room 2C10b, Hamilton, Ontario L8N 3Z5, Canada, e-mail: bhaynes@mcmaster.ca

Scientific Misconduct

Retractions in the Research Literature: Misconduct or Mistakes?

Benjamin G. Druss,1 Sara Bressi,2 and Steven C. Marcus2

Objective

While considerable attention has been directed to cases of scientific misconduct in the scientific literature, far less is known about the number and nature of unintentional research errors. To better understand this issue, we examined the characteristics of retracted articles indexed in MEDLINE, focusing on comparisons between misconduct and mistakes.

Design

All retractions of publications indexed in MEDLINE between 1982 and 2002 were extracted and categorized by 2 reviewers as representing misconduct (fabrication, falsification, or plagiarism), unintentional error (mistakes in sampling or data analysis, failure to reproduce findings, or omission of information), or other causes. Our analyses compared the characteristics of the retracted article with the timing of the subsequent notification of retraction between articles that were retracted due to unintentional error and scientific misconduct.

Results

Of a total of 395 articles retracted during the study period, only 107 (27.1%) reflected scientific misconduct. A total of 244 retractions (61.8%) represented unintentional errors and 44 (11.1%) represented other issues or had no information on the cause of the retraction. Compared with unintentional errors, cases of misconduct were more likely to be written by a single author (10.5% vs 5.7%). Mistakes were more likely to be reported by an author of the initial manuscript (90.2% vs 35.2%), to be in a manuscript with no reported funding source (59.4% vs 40.5%), and to have a shorter time lapse between the initial publication and the retraction (mean, 2 vs 3.3 years). There were no differences in types of retractions based on the date of publication (before or after 1991) or the type of research (human subjects vs basic research).

Conclusions

The findings suggest that unintentional mistakes are a substantially more common cause of retractions in the biomedical literature than scientific misconduct. Substantial differences exist between these 2 categories in publication characteristics, authorship, and reporting.

1Rollins School of Public Health, 1518 Clifton Rd NE, Room 606, Atlanta, GA 30322, USA, e-mail: bdruss@emory.edu; 2University of Pennsylvania School of Social Work, Philadelphia, PA, USA

Citation of Literature Flawed by Scientific Misconduct

A. Victoria Neale, Justin Northrup, Judith Abrams,and Rhonda Dailey

Objective

To determine the extent to which authors cite articles identified in official reports as affected by scientific misconduct, and to characterize the nature of such citations.

Design

We identified 102 articles from either the National Institutes of Health (NIH) Guide for Grants and Contracts, Findings of Scientific Misconduct, or the US Office of Research Integrity annual reports between 1993 and 2001 as needing retraction or correction, and determined the type of corrigenda posted in PubMed. Using the Web of Science, we conducted bibliometric analyses to identify subsequent citations of these affected/problem articles. A stratified random sample of 604 was drawn from the population of 5164 citing articles for a content analysis to determine how the affected articles were used by subsequent researchers.

Results

Of the 102 articles flawed by misconduct, 4 were not indexed in PubMed; 47 were tagged in PubMed with a retraction notice, 26 with an erratum, and 12 with a comment correction. Ten articles only had a link to the NIH Guide Findings of Scientific Misconduct and 3 articles had no corrigendum whatsoever. Most problem articles were basic science studies (68% in vitro and 7% animal) and 25% were clinical studies. The problem articles had a median of 26 subsequent citations (range, 0-592). The problem article was embedded in a string of references in 61% of citing articles; there was specific reference to the problem article in 39%. Few citing articles (9%) used the problem article as direct support or contrast, 54% used it as indirect support or contrast, and 33% did not address invalid information in the problem article. Only 4% of citing articles referenced the corrigendum.

Conclusions

Although most articles named in misconduct investigations have an identifiable corrigenda, few citing articles reference the corrigenda in their bibliography. There is scant evidence of awareness of misconduct in most citing articles.

Department of Family Medicine, Wayne State University, 101 E Alexandrine, Detroit, MI 48201, USA, e-mail: vneale@med.wayne.edu

For Which Cases of Suspected Misconduct Do Editors Seek Advice? An Observational Study of All Cases Submitted to COPE

Sabine Kleinert,1 Jeremy Theobald,2 Elizabeth Wager,3and Fiona Godlee4

Objective

To describe the nature and main concerns of all cases discussed at the Committee of Publication Ethics (COPE) from its inception in April 1997 to September 2004.

Design

Observational study reporting number of all cases and breakdown of main problems discussed by COPE. Analysis of cases as published in COPE reports and, for 2004, from COPE committee meeting minutes.

Results

From 1997 to September 2004, 212 cases were submitted to COPE. The most frequent problem presented was duplicate or redundant publication or submission (58 cases), followed by authorship issues (26 cases), lack of ethics committee approval (25 cases), no or inadequate informed consent (22 cases), falsification or fabrication (19 cases), plagiarism (17 cases), unethical research or clinical malpractice (15 cases), and undeclared conflict of interest (8 cases). Six cases of reviewer misconduct and 3 cases of editorial misconduct were discussed. A total of 132 cases (63%) were relating to papers before publication and 72 (34%) to papers that had already been published; in 8 cases, the question or concern was not related to a particular paper. In the majority of cases (n = 159 [75%]), the committee felt that there were sufficient grounds to pursue cases further. In 79 cases, outcomes were reported following advice by COPE and actions by editors. In 16 cases (20%), authors were exonerated, 15 (19%) could not be resolved satisfactorily, and in 23 (29%), the authors’ institution was asked to investigate. Investigations took longer than 1 year in 36 cases (46%).

Conclusions

Editors are confronted with a wide spectrum of suspected research and publication misconduct before and after publication and have a duty to pursue such cases. COPE provides a forum for discussion and advice among editors and a repository of cases for educational purposes. Reports of outcomes suggest that, in many cases, investigations take a long time and a substantial number of cases cannot be adequately resolved.

1The Lancet, 32 Jamestown Rd, London NW1 7BY, UK, e-mail: sabine.kleinert@lanet.com; 2John Wiley & Sons Ltd, London, UK; 3Sideview, Prines Risborough, UK; 4BMJ, London, UK

SATURDAY, SEPTEMBER 17

Publication Bias and Funding/Sponsorship

Are Authors’ Financial Ties With Pharmaceutical Companies Associated With Positive Results or Conclusions in Meta-analyses on Antihypertensive Medications?

Veronica Yank,1 Drummond Rennie,2,3 and Lisa A. Bero3

Objective

To determine whether authors’ financial ties with pharmaceutical companies are associated with positive results or conclusions in meta-analyses on antihypertensive medications.

Design

Meta-analyses published January 1966 to June 2002 that evaluated antihypertensive medications in nonpregnant adults were included. We plan to update our search to include meta-analyses published through December 2004. Meta-analyses were identified by electronically searching PubMed and the Cochrane Database and by hand-searching the reference lists of identified meta-analyses. Duplicate meta-analyses were excluded. Non–English-language articles have not yet been evaluated for inclusion. Financial ties were defined as author affiliation and funding source, as disclosed in the article. Results and conclusions were separately categorized as positive (significantly in favor of study drug), negative (significantly against study drug), not significant/neutral, or unclear. The data extraction tool was pretested, and the quality of each meta-analysis was assessed using a validated instrument. A pilot study demonstrated good reliability between the 3 authors in data extraction and quality assessment.

Results

Seventy-one eligible meta-analyses were identified. Twenty-three of these (32%) had drug industry financial ties. We found no difference between meta-analyses with or without financial ties in the proportion of positive results. In contrast, conclusions in meta-analyses with financial ties were positive in 91%, unclear in 4%, neutral in 4%, and negative in none, whereas conclusions in meta-analyses without financial ties were positive in 72%, unclear in 2%, neutral in 17%, and negative in 8%. The mean quality scores for each group were similar (0.36 and 0.34 for meta-analyses with and without financial ties, respectively). We will perform multiple logistic regression analyses to determine whether other factors are associated with positive conclusions.

Conclusions

Preliminary data suggest that meta-analyses with and without disclosed financial ties to the pharmaceutical industry are similar in direction of results and quality. But those with financial ties have a higher proportion of positive conclusions in favor of the study drug.

1University of Washington, 4411 4th Ave NE, Seattle, WA 98105, USA, e-mail: vyank@u.washington.edu; 2JAMA, Chicago, IL, USA; 3Institute for Health Policy Studies, University of California, San Francisco, San Francisco, CA, USA

Sponsorship, Bias, and Methodology: Cochrane Reviews Compared With Industry-Sponsored Meta-analyses of the Same Drugs

Anders W. Jørgensen and Peter C. Gøtzsche

Objective

Trials sponsored by the pharmaceutical industry are often biased. One would therefore expect systematic reviews sponsored by the industry to be biased. We studied whether Cochrane Reviews and industry-sponsored meta-analyses of the same drugs differed in methodological quality and conclusions.

Design

We searched MEDLINE, EMBASE, and the Cochrane Library (issue 1, 2003) to identify pairs of meta-analyses—1 Cochrane Review and 1 industry-sponsored review—that compared the same 2 drugs in the same disease and were published within 2 years of each other. Data extraction and quality assessment were performed independently by 2 observers using a validated scale to judge the scientific quality of the reviews and a binary scale to grade conclusions.

Results

One hundred seventy-five of 1596 Cochrane Reviews had a meta-analysis that compared 2 drugs. We found 24 paper-based meta-analyses that matched the Cochrane Reviews; 8 were industry-sponsored, 9 had unknown support, and 7 had no support or were supported by nonindustry sources. On a scale from 0 through 7, the overall median quality score was 7 for Cochrane Reviews, 2 for industry-sponsored reviews (P < .01), and 2 for reviews with unknown support (P < .01). Compared with industry-sponsored reviews, more Cochrane Reviews had stated their search methods, had comprehensive search strategies, used more sources to identify studies, made an effort to avoid bias in the selection of studies, reported criteria for assessing the validity of the studies, used appropriate criteria, and described methods of allocation concealment, excluded patients, and excluded studies. All reviews supported by industry recommended the experimental drug without reservations vs none of the Cochrane Reviews (P < .001). Reviews with unknown support and reviews with not-for-profit support or no support also had cautious conclusions.

Conclusions

Systematic reviews of drugs should not be sponsored by industry. And if they are, they should not be trusted.

The Nordic Cochrane Centre, Rigshospitalet, Dept 7112, Blegdamsvej 9, DK-2100 Copenhagen, Denmark, e-mail: pcg@cochrane.dk

Is Everything in Health Care Cost-effective?Reported Cost-effectiveness Ratios in Published Studies

Chaim M. Bell,1,2,4 David R. Urbach,1,3,4 Joel G. Ray,1,2Ahmed Bayoumi,1,2 Allison B. Rosen,5 Dan Greenberg,6and Peter J. Neumann7

Objective

Cost-effectiveness analysis can inform policy makers about the efficient allocation of resources. Incremental cost-effectiveness ratios are used commonly to quantify the value of a diagnostic test or therapy. We investigated whether published studies tend to report favorable cost-effectiveness ratios (less than $20 000, $50 000, and $100 000 per quality-adjusted life year [QALY] gained) and evaluated study characteristics associated with this phenomenon.

Design

We reviewed published English-language cost-effectiveness analyses cited in MEDLINE between 1976 and 2001. We included original cost-effectiveness analyses that measured health effects in QALYs. All incremental cost-effectiveness ratios were measured in US dollars set to the year of publication (we did not adjust them to constant dollars because we tested whether ratios targeted certain thresholds, such as $50 000/QALY, in the year of publication). For all 533 articles, we used generalized estimating equations to determine study characteristics associated with incremental cost-effectiveness ratios below 3 threshold values ($20 000, $50 000, and $100 000/QALY), including journal impact factor in the year prior to publication, disease category, country of origin, funding source, and assigned quality score.

Results

About half (712/1433) of all reported incremental cost-effectiveness ratios were less than $20 000/QALY. Industry-funded studies were more likely to report ratios less than $20 000/QALY (adjusted odds ratio [OR], 2.2; 95% confidence interval [CI], 1.4-3.4), $50 000/QALY (OR, 3.5; 95% CI, 2.0-6.1), and $100 000/QALY (OR, 3.4; 95% CI, 1.6-7.0) than non–industry-funded studies. Conversely, studies of higher methodological quality (OR, 0.58; 95% CI, 0.37-0.91) and those conducted in Europe (OR, 0.59; 95% CI, 0.33-1.1) and the United States (OR, 0.44; 95% CI, 0.26-0.76) were less likely to report incremental cost-effectiveness ratios less than $20 000/QALY than those conducted elsewhere.

Conclusions

The majority of published cost-effectiveness analyses report highly favorable incremental cost-effectiveness ratios. Awareness of potentially influential factors can enable health journal editors as well as policy makers to better judge cost-effectiveness analyses.

1University of Toronto, Toronto, Ontario, Canada; 2St Michael’s Hospital, 30 Bond St, Toronto, Ontario M5B 1W8, Canada, e-mail: bellc@smh.toronto.on.ca; 3University Health Network, Toronto, Ontario, Canada; 4Institute for Clinical Evaluative Sciences, Toronto, Ontario, Canada; 5University of Michigan, Ann Arbor, MI, USA; 6Health Systems Management, Ben-Gurion University of the Negev, Beersheba, Israel; 7Center for Risk Analysis, Harvard School of Public Health, Cambridge, MA, USA

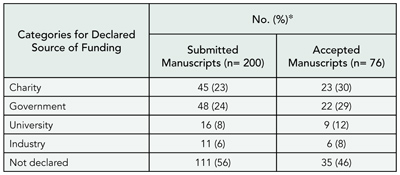

Publication Bias and Journal Factors

Characteristics of Accepted and Rejected Manuscripts at Major Biomedical Journals: Predictors of Publication

Kirby P. Lee, Elizabeth A. Boyd, Peter Bacchetti, and Lisa A. Bero

Objective

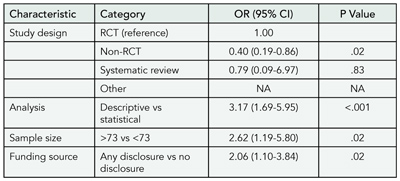

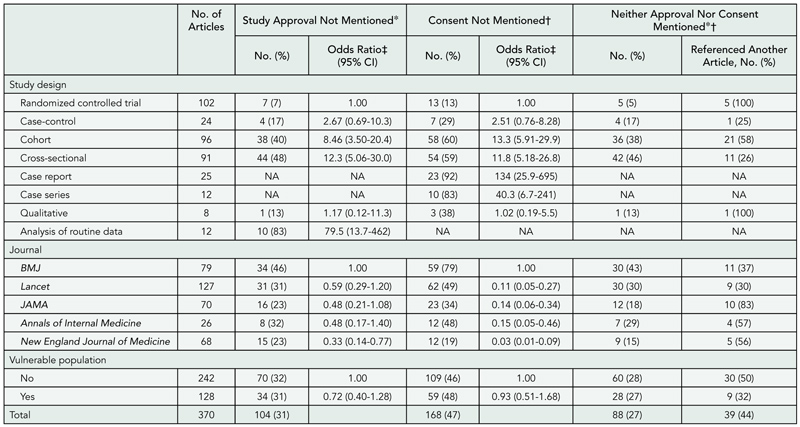

To identify characteristics of submitted manuscripts that are associated with acceptance for publication at major biomedical journals.

Design

Prospective cohort of original research articles (n = 1107) submitted for publication January 2003-April 2003 at 3 leading biomedical journals. Studies of experimental and observational design, systematic reviews, and qualitative, ethnographic, or nonhuman studies were included. Case reports of single patients were excluded. Characteristics identified for each manuscript included research focus, study design, analytic methods (statistical/quantitative or descriptive/qualitative), clearly stated hypothesis, statistical significance of primary outcome (P < .05 or not P > .05), sample size (dichotomized at median, n = 73), description of participants, funding source, country of origin and institutional affiliation of corresponding author, and sex and academic degree of authors. Multivariate logistic regression was used to model predictors of publication.

Results

Of 1107 manuscripts submitted, 68 (6.1%) were published. Characteristics associated with publication are listed in TABLE 4. When added to the model shown, a statistically significant primary outcome did not appear to improve chance of publication (significant vs not; odds ratio, 0.55; 95% confidence interval, 0.24-1.30; P = .18)

Conclusions

We found no evidence of editorial bias favoring studies with statistically significant results. Submitted manuscripts with randomized, controlled study design or systematic reviews, analyses using descriptive or qualitative methods, sample sizes of 73 or more, and disclosure of the funding source were more likely to be published.

Institute for Health Policy Studies, University of California, San Francisco, 3-333 California St, Suite 420, Box 0613, San Francisco, CA 94118, USA, email: leek@pharmacy.ucsf.edu

Table 4. Characteristics Associated With Manuscripts Published in 3 Leading Biomedical Journals

Abbreviations: CI, confidence interval; NA, not available because numbers too small for analysis; OR, odds ratio; RCT, randomized controlled trial.

Rethinking Publication Bias: Developing a Schema for Classifying Editorial Discussion

Kay Dickersin and Catherine Mansell

Objective

To identify new features of publication bias (ie, favorable factors associated with decision to publish) in editorial decision making.

Design

Cross-sectional, qualitative analysis of discussions at manuscript meetings of JAMA. One of us (K.D.) attended 12 editorial meetings in 2003 and took notes recording discussion surrounding 102 manuscripts. In addition, editors attending the meetings noted the “negative” (not favoring publication) and “positive” (favoring publication) factors associated with each manuscript considered. We extracted unique sentences and phrases used by the editors to describe the manuscripts reviewed and entered them into an Excel spreadsheet; one of us (C.M.) subsequently coded the phrases using NVivo2 qualitative analysis software.

Results

From the list of phrases, we sorted terms such as author characteristics (eg, highly regarded), the manuscript (eg, well-written), and the topic, as well as peer reviewer opinion and scientific aspects of the work (eg, sample size). We determined that most discussion could be categorized into 3 broad categories: scientific features, journalistic goals, and writing. We revised our classification system iteratively, using internal discussion and peer input until we established 27 subgroups. Phrases related to scientific merit (design, measures, population) predominated. Phrases relating to the journalistic goals (readership needs, timeliness) were nearly as common, followed by phrases related to the writing. Although statistical significance of study findings was rarely explicitly discussed, terms related to the concept of strength and direction of findings were relatively frequent.

Conclusions

Studies of editorial publication decision making should assess the relative impact of factors related to scientific merit, journalistic goals, and writing, in addition to statistical significance of results.

Brown University, Center for Clinical Trials and Evidence-based Healthcare, 169 Angell St, Box G-S2, Providence, RI 02912, USA, e-mail: kay_dickersin@brown.edu

Is Publication Bias Associated With Journal Impact Factor?

Yuan-I Min,1, Aynur Unalp-Arida,2 Roberta Scherer,3and Kay Dickersin4

Objective

To evaluate the association between statistically significant results for the primary outcome and the journal impact factor of published clinical trials.

Design

An existing data set from a retrospective follow-up study (1988-1989) of 3 cohorts of initiated studies evaluating publication bias was used for this analysis. Only trials with full publication (defined as an article with ≥3 pages) after study enrollment had been completed, at the time of the follow-up, were included (n = 219). Results for primary outcomes and publication history of the trials were based on investigator interviews. Journal impact factor of published trials was assessed using the 1988 Science Citation Index (impact factors of 0 were assigned to journals not in the Science Citation Index). We used the journal impact factor of the first full publication after study enrollment was completed for all analyses. If more than 1 article was published in the same year, the best journal impact factor was used. The association between journal impact factor and statistical significance of the primary results was examined using the odds ratio.

Results

Journal impact factors in our cohorts of trials ranged from 0 to 21.148 (published in 1967-1989). The numbers of journals in each journal impact factor quartile were 45 (first quartile), 35 (second quartile), 26 (third quartile), and 13 (fourth quartile). The median journal impact factors for trials with statistically significant primary results (n = 133) and those with nonsignificant primary results (n = 86) were 2.73 and 1.91, respectively (P = .02). Compared with the lowest journal impact factor quartile, the odds ratios of having a significant primary result, for each journal impact factor quartile from low to high (≤0.672, >0.672 and ≤2.241, >2.241 and ≤4.482, >4.482), were 1.00, 1.24 (95% confidence interval [CI], 0.57-2.70), 1.39 (95% CI, 0.66-2.93), and 2.54 (95% CI, 1.14-5.63), respectively (P = .02 for trend).

Conclusions

Trials with statistically significant results were more likely to be published in a high-impact journal. Our results suggest publication bias at the journal level. Data from other cohorts of trials are needed to generalize our results.

1MedStar Research Institute, Department of Epidemiology and Statistics, 6495 New Hampshire Ave, Suite 201, Hyattsville, MD, USA, e-mail: nancy.min@medstar.net; 2Johns Hopkins University, Center for Clinical Trials, Baltimore, MD, USA; 3University of Maryland, Department of Epidemiology and Preventive Medicine, Baltimore, MD, USA; 4Brown University, Department of Community Health, Providence, RI, USA

Effect of Indexing, Open Access, and Journal “Phenomena” on Submissions, Citations, and Impact Factors

Impact of SciELO and MEDLINE Indexing on the Submission of Articles to a Non–English-Language Journal

Danilo Blank, Claudia Buchweitz, and Renato S. Procianoy

Objective

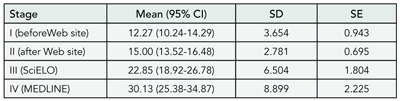

To evaluate the impact of Scientific Library Online (SciELO) and MEDLINE indexing on the number of articles submitted to Jornal de Pediatria, a Brazilian bimonthly pediatrics journal with a Portuguese print version and a bilingual (Portuguese/English) free-access, full-text, online version.

Design

Analysis of total article submission, submission of articles from countries other than Brazil, and acceptance data from 2000 through 2004. Since there were no changes in the editorial board or in the methods of manuscript submission during this period, 3 events were considered as having a potential impact on submission rates: launch of the bilingual Web site, indexing in the SciELO, and MEDLINE indexing. Thus, data analysis was divided into 4 stages: stage I, pre-Web site (January 2000-March 2001 [15 months]); stage II, Web site (April 2001-July 2002 [16 months]); stage III, SciELO (August 2002-August 2003 [13 months]); and stage IV, MEDLINE (September 2003-December 2004 [16 months]). Simple regression was used for trend analysis, 1-way ANOVA was used on rank-transformed data with the Duncan post hoc test to compare the number of submissions in each period, and the Fisher exact test with Finner-Bonferroni P value adjustment was used to compare submissions from countries other than Brazil in the 4 periods.

Results

There was a significant trend toward linear increase in the number of submissions along the study period (P = .009). The numbers of manuscripts submitted in stages I through IV were 184, 240, 297, and 482, respectively. The numbers of submissions were similar in stages I and II (P = .15) but statistically higher in stage III (P < .001 vs stage I and P = .006 vs stage II) and stage IV (P < .001 vs stages I and II and P < .05 vs stage III). The variation in mean monthly submissions became more pronounced in stages III and IV (TABLE 5). The rate of article acceptance decreased during the study period. The number of original articles published has been stable since the 2001 March/April issue (n = 10), when the journal reached a printed page limit, leading to stricter judgment criteria and a relative decrease in acceptance rate. The numbers of manuscript submissions from countries other than Brazil in stages I through IV were 1, 2, 0, and 17, respectively, with P < .001 for the comparison of stage IV with previous stages.

Conclusions

These results suggest that SciELO and MEDLINE indexing contributed to increased manuscript submission to Jornal de Pediatria. SciELO indexing was associated with an increase in Brazilian submissions, whereas MEDLINE indexing led to an increase in both Brazilian and international submissions.

Jornal de Pediatria, Brazilian Society of Pediatrics, Rua Gen Jacinto Osorio 150/201, Porto Alegre, RS, CEP 90040-290, Brazil, e-mail: blank@ufrgs.br

Table 5. Jornal de Pediatria: Mean Monthly Submissions per Analysis Stage, 2000 through 2004

Abbreviations: CI, confidence interval; SciELO, Scientific Library Online.

Effect of Open Access on Citation Rates for a Small Biomedical Journal

Dev Kumar R. Sahu, Nithya J. Gogtay, and Sandeep B. Bavdekar

Objective

Articles published in print journals with limited circulation are cited less frequently than those printed in journals with larger circulations. Open access has been shown to improve citation rates in the fields of physics, mathematics, and astronomy. The impact of open access on smaller biomedical journals has not been studied. We assessed the influence of open access on citation rates for the Journal of Postgraduate Medicine, a small, multidisciplinary journal that adopted open access without article submission or article access fee.

Design

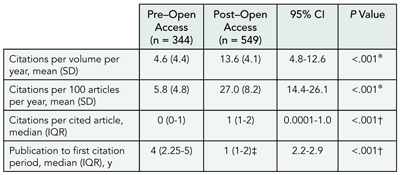

The full text of articles published since 1990 were made available online in 2001. Citations for these articles as retrieved using Web of Science, SCOPUS, and Google Scholar were divided into 2 groups: the pre–open-access period (1990-2000) and the post–open-access period (2001-2004). Citations for the articles published between 1990 through 1999 during these 2 periods were compared using the unpaired t test or Mann-Whitney test.

Results

Of the 553 articles published between 1990 through 1999, 327 articles received 893 citations between 1990 and 2004 (TABLE 6). The 4-year, post–open-access period accounted for 549 (61.5%) of these citations, and 164 articles (50.1%) received their first citations only after open access was provided in 2001. For every volume studied (1990 through 1999), the maximum number of citations per year was received after 2001. None of the articles published during 1990 through 1999 received any citation in the year of publication. In contrast, articles published in 2002, 2003, and 2004 received 3, 7, and 22 citations, respectively, in the year of publication itself.

Conclusions

Open access was associated with an increase in the number of citations received by the articles. It also decreased the lag time between publication and the first citation. For smaller biomedical journals, open access could be one of the means for improving visibility and thus citation rates.

Journal of Postgraduate Medicine, 12, Manisha Plaza M. N. Rd, Kurla (W). Mumbai 400070, India, e-mail: dksahu@vsnl.com

Table 6. Citations to Articles Published Before and After Open Access to the Small Biomedical Journal Was Provided in 2001

Abbreviations: CI, confidence interval; IQR, interquartile range.

*By unpaired t test.†By Mann-Whitney test.‡Time from open access to first citation for articles first cited after 2001

More Than A Decade in the Life of the Impact Factor

Mabel Chew,1 Martin Van Der Weyden,1and Elmer V. Villanueva2

Objective

To analyze trends in the impact factor of 7 general medical journals (Ann Intern Med, BMJ, CMAJ, JAMA, Lancet, Med J Aust and N Engl J Med) over 11 years and to ascertain the views of these journals’ past and present Editors-in-Chief regarding major influences on their journal’s impact factor.

Design

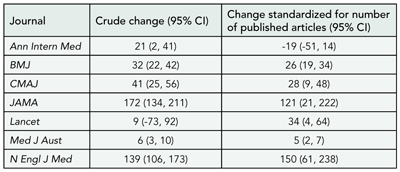

Retrospective analysis of impact factor data from Institute for Scientific Information (ISI) Journal Citation Reports, Science Edition, 1994 to 2004, and interviews with the 10 editors-in-chief of these journals (except Med J Aust) who had served between 1999 and 2004, conducted from November 2004 to February 2005.

Results

Impact factors generally rose over the 11-year period. However, the relative changes in impact factors (averaged over 11 years) ranged from 6% to 172% per year. The impact factor is calculated yearly by dividing the number of citations that year to any article published by that journal in the previous 2 years (the numerator) by the number of eligible articles published by that journal in the previous 2 years (the denominator). In general, the numerator for most journals tended to rise over this period while the denominators tended to drop. When standardized for the denominator, the average relative changes fell naturally into 2 groups: those that remained constant (ie, increases in crude impact factors were due largely to rises in numerators) and those that did not (ie, increase in crude impact factors were due largely to falls in denominators) (TABLE 7). Nine of 10 editors-in-chief were contactable, and all agreed to be interviewed. Possible reasons given for rises in citation counts included active recruitment of “high-impact” articles by courting researchers, the inclusion of more journals in the ISI database, and increases in citations in articles. Boosting the journal’s media profile was also thought to attract first-class authors and, therefore, “citable” articles. Many believed that going on-line had not made a difference to citations. Most had no deliberate policy to publish fewer articles (thus altering the impact factor denominator), which was sometimes the unintended result of redesign, publication of longer research articles (ie, fewer could be “fit” in each issue) or of editors being “choosier.” However, 2 editors did have such a policy, as they realized impact factors were important to authors. Concerns about the accuracy of ISI counting for the impact factor denominator prompted a few editors to routinely check their impact factor data with ISI. All had mixed feelings about using impact factors to measure journal quality, particularly in academic culture, and mentioned the tension between aiming to improve impact factors and “keeping their constituents [clinicians] happy.”

Conclusions

Impact factors of the journals studied rose in the 11-year period due to rising numerators and/or falling denominators, to varying extents. This phenomenon was perceived by editors-in-chief to occur for various reasons, sometimes including editorial policy. However, all considered the impact factor a mixed blessing—attractive to researchers but not the best measure of clinical impact.

1Medical Journal of Australia, Locked Bag 3030, Strawberry Hills, NSW 2012, Australia, e-mail: mabel@ampco.com.au; 2National Breast Cancer Centre, Camperdown, NSW 2050, Australia

Table 7. Average Annual Relative Changes (%) in Impact Factors for 7 Journals

Abbreviation: CI, confidence interval.

Quality and Influence of Citations and Dissemination of Scientific Information to the Public

Use and Persistence of Internet Citations in Scientific Publications: An Automated 5-Year Case Study of Dermatology Journals

Kathryn R. Johnson,1 Jonathan D. Wren,2 Lauren F. Heilig,1Eric J. Hester,1 David M. Crockett1, Lisa M. Schilling,1Jennifer M. Myers,3 Shayla Orton Francis,4and Robert P. Dellavalle1,5

Objective

To examine Internet address and uniform resource locator (URL) citation characteristics in medical subspecialty literature using dermatology journals as a case study.

Design

An automated computer program systematically extracted and analyzed Internet addresses cited in the 3 dermatology journals rated highest for scientific impact: Journal of Investigative Dermatology, Archives of Dermatology, and Journal of the American Academy of Dermatology, published between January 1999 and September 2004. Corresponding authors of articles with inaccessible URLs were contacted regarding the content and importance of the inaccessible information.

Results

Overall, 7337 journal articles contained 1113 URL citations, of which 18% were inaccessible. The percentage of articles containing at least 1 Internet citation increased from 2.3% in 1999 to 13.5% in 2004. Internet citation inactivity also increased significantly with time since publication, from 11% of those published in 2004 to 35% in 1999 (P < .001). The URL inaccessibility was highest in the Journal of Investigative Dermatology (22%) and lowest in Archives of Dermatology (15%) (P = .03). Archives of Dermatology was the only journal of the 3 examined with a stated Internet referencing policy in the instructions for authors. For all years, URL accessibility was significantly associated with top-level domain and directory depth but not associated with the presence of an accession date or a tilde in the URL. Of the 204 inaccessible URLs, at least some content was recoverable for 59% via the Internet Archive (http://www.archive.org). Thirty-nine of 100 randomly selected citations included accession dates. Results from surveying authors regarding inaccessible URL content and importance will be reported.

Conclusions

Internet citations increasingly used and lost in dermatology journals likely reflect URL use and loss across all medical subspecialty literature. Policy changes are needed to stem the loss of cited Internet information in scientific publications.

Table 8. Reviewers’ Verdicts on Manuscripts According to Citations to Their Own Work: Analysis of 1641 Reviewer Reports on 637 Manuscripts Submitted to the International Journal of Epidemiology

1Department of Dermatology, University of Colorado at Denver and Health Sciences Center (UCDHSC), 1055 Clermont St, Box 165, Aurora, CO 80045, USA, e-mail: robert.dellavalle@uchsc.edu; 2University of Oklahoma, Norman, OK, USA; 3Louisiana State University School of Medicine, New Orleans, LA, USA; 4UCDHSC School of Medicine, Aurora, CO, USA; 5Dermatology Service, Department of Veterans Affairs Medical Center, Denver, CO, USA

Are Reviewers Influenced by Citations of Their Own Work? Evidence From the International Journal of Epidemiology

Matthias Egger,1,2 Lesley Wood,1 Erik von Elm,2 Anthony Wood,1 Yoav Ben Shlomo,1 and Margaret May1

Objective

To examine whether reviewers’ assessments of manuscripts are influenced by citations to their own work.

Design

The International Journal of Epidemiology receives about 700 manuscripts each year, of which about 30% are sent out to reviewers. Reviewers are asked to make a decision using 4 categories (accept as is, minor revision, major revision, and reject). We examined whether the reviewers’ decision was influenced by the number of citations to their work or other manuscript characteristics by abstracting data using a standardized proforma. Data were analyzed with logistic regression, accounting for the clustered nature of the data. We included manuscripts refereed from 2003 to February 2005. The study is ongoing: the prespecified sample size is 800 manuscripts.

Results

We analyzed 1641 reviewer reports on 637 manuscripts. The median number of reviewers per manuscript was 2.6 (interquartile range [IQR], 2-3) and the median number of citations in manuscripts was 28 (IQR, 22-39). Work of 606 reviewers (37%) was cited. Among manuscripts with at least 1 citation the median number of citations to papers authored by a reviewer was 1.8 (IQR, 1-2; range, 1-9). TABLE 8 shows the reviewers’ verdicts on manuscripts according to citations to their own work. The odds of rejecting a paper were reduced when the manuscript cited the reviewer’s work: odds ratios compared with manuscripts not citing the reviewer were 0.87 (95% confidence interval, 0.65-1.18) for manuscripts citing 1 paper and 0.74 (95% confidence interval, 0.49-1.11) for manuscripts citing 2 or more papers (P = .11 for trend).

Conclusions

There may be truth in the old professor’s adage that you should cite the work of likely reviewers in your papers. Alternative explanations include chance and confounding by other factors. The final analysis, which will be based on a larger number of manuscripts and reviewer reports, will provide more robust evidence.

1Editorial Office, International Journal of Epidemiology, Department of Social Medicine, University of Bristol, UK; 2Department of Social and Preventive Medicine, University of Berne, Finkenhubelweg 11, CH-3012, Berne, Switzerland, e-mail: egger@ispm.unibe.ch

How the News Media Report on Research Presented at Scientific Meetings: More Caution Needed

Steven Woloshin and Lisa M. Schwartz

Objective

Scientific meeting presentations garner new media attention, despite the fact that the underlying research is often preliminary and has undergone limited peer review. We examined whether media stories report basic study facts and caveats, specifically regarding the preliminary nature of the research.

Design

Three physicians with clinical epidemiology training analyzed front-page newspaper stories (n = 34), other newspaper stories (n = 140), and television/radio stories (n = 13) identified in a Lexis-Nexis/ProQuest search for research reports from 5 scientific meetings in 2003 (American Heart Association, 12th World AIDS Conference, American Society of Clinical Oncology, Society for Neuroscience, and the Radiological Society of North America).

Results

Basic facts were often missing: 34% of the 187 stories did not mention study size, 18% did not mention study design (when mentioned, 41% were ambiguous enough that expert readers could not be certain about the design), and 40% did not quantify the main result (when quantified, 35% presented relative change statistics without a base rate—a format known to exaggerate the perceived magnitude of findings). Important study caveats were often missing. Among the 124 stories covering studies other than randomized trials, 85% failed to mention (explicitly mention or imply) any relevant study limitation (eg, imprecision of small studies, no comparison group in case series, confounding in observational studies). Among the 61 stories reporting on animal studies, case series, or studies with fewer than 30 people, 67% did not highlight their limited relevance to human health. While 12 stories mentioned a corresponding “in press” medical journal article, only 3 of the remaining 175 noted that the findings were either unpublished, might not have undergone peer review, or might change as the study matured.

Conclusions

News stories about scientific meeting research presentations often omit basic study facts and caveats. Consequently, the public may be misled about the validity and relevance of the science presented.

VA Outcomes Group (111B), Department of Veterans Affairs Medical Center, White River Junction, VT 05009, USA, e-mail: steven.woloshin@dartmouth.edu

Reading Between the Ads: Assessing the Quality of Health Articles in Top Magazines

Brad Hussey,1 Diane Miller,2 and Alejandro R. Jadad3

Objective

This research examines the quality of health-related reporting published in the top 100 US consumer magazines, using a validated index of the quality of written consumer health information.

Design

The authors purchased and reviewed consumer publications published in August 2003 and likely to include health-related information from the Audit Bureau of Circulations top 100 magazine rankings based on average paid circulation figures. Articles were included if they made overt claims about the effect of interventions of any kind on health outcomes. Disagreements on article inclusion were settled by consensus. The first eligible article in each section of each publication was reviewed to ensure consistency. Articles were evaluated individually by all 3 authors using 7 questions of the DISCERN evaluation tool that address factual information. Each question was answered in terms of whether the article met or did not meet specific criteria. Consensus was reached for all answers.

Results

Thirty-two magazines, with a combined number of more than 100 million subscribers, met the inclusion criteria and 57 articles were deemed eligible for review. Only 2% of the articles dated the source of information quoted, 7% provided references for each claim made, and 14% gave additional contact information for sources. Most articles failed to acknowledge uncertainty about the participant interventions (74%), risks associated with the interventions (74%), or other possible treatment choices (56%).

Conclusions

Health articles as found in top consumer magazines fail to meet basic quality criteria and could mislead the public. Publishers of consumer magazines are missing an opportunity to increase the current low levels of functional health literacy of the public, at little or no cost. There is an opportunity for peer-reviewed biomedical publications to provide support to, and help define standards for, the lay press, thereby protecting the public.

1Dundas, Ontario, Canada; 2Ontario Hospital Association, Toronto, Ontario, Canada; 3Centre for Global eHealth Innovation, University Health Network and University of Toronto, R. Fraser Elliott Building, 4th Floor, 190 Elizabeth St, Toronto, Ontario M5G 2C4, Canada, e-mail: ajadad@uhnres.utoronto.ca

SUNDAY, SEPTEMBER 18

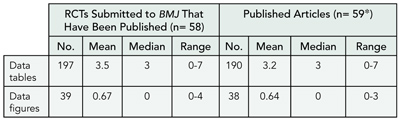

Reporting of Studies: Abstracts and Publication After Meeting Presentations

Trials Reported in Abstracts: The Need for a Mini-CONSORT

Sally Hopewell and Mike Clarke

Objective

To assess the need for a better reporting standard (such as a mini-CONSORT) for trials reported in abstracts.

Design

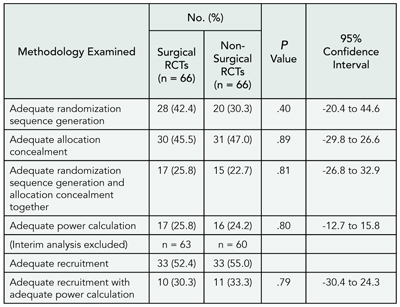

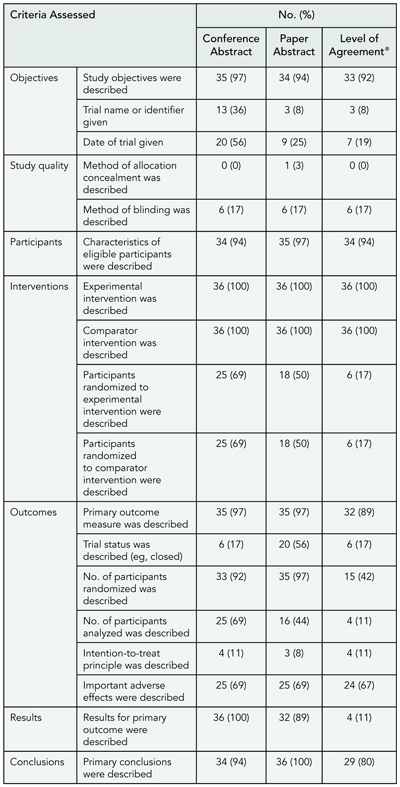

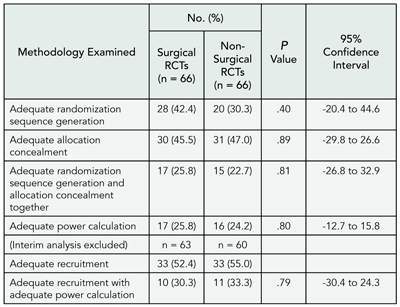

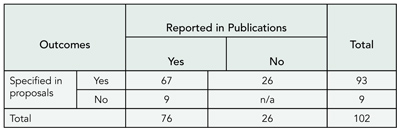

A total of 209 randomized trials were identified from the proceeding of the American Society of Clinical Oncology conference in 1992. Full publications were identified for 125 trials by searching The Cochrane Central Register of Controlled Trials and PubMed (median time to publication 27 months; interquartile range, 15-43). A sample of 40 trials was selected within a specific area of cancer; 4 trials were later excluded. A checklist (based on CONSORT) was used to compare information reported in the 36 conference abstracts with that reported in the corresponding abstract of the subsequent full publications. Steps were taken to blind the source of each abstract.

Results

Some aspects of trials were well-reported in the conference abstract and full publication abstract: 92% (n = 33) of study Objectives, 94% (n = 34) of participant eligibility, 100% (n = 36) of trial interventions, and 89% (n = 32) of primary outcomes were the same in both abstracts (TABLE 9). Other areas were more discrepant: 92% (n = 33) of conference abstracts and 97% (n = 35) of full publication abstracts reported number of participants randomized; but these numbers were the same only 42% (n = 15) of the time. Likewise, 69% (n = 25) of conference abstracts and 44% (n = 16) of full publication abstracts reported number of participants analyzed, and these numbers were the same only 11% (n = 4) of the time. This is partly because the conference abstracts were often preliminary reports. Lack of information was a major problem in assessing trial quality; 0% of conference abstracts and 3% (n=1) of full publication abstracts reported method of allocation concealment, 11% (n = 4) of conference abstracts and 8% (n = 3) of full publication abstracts reported intention-to-treat analyses.

Conclusions

Previous research has shown that trials presented as conference abstracts are poorly reported. However, this study suggests that they may contain as much or more useful information than the abstract of a full publication. The quality of reporting of trials in abstracts, both in the proceedings of scientific meetings and in journals, needs to be improved because this may be the only accessible information to which someone appraising a trial has access.

Table 9. Reporting Criteria Assessed for Trials Reported in Abstracts

*Percentage of agreement between what was reported in the conference abstract and in the abstract of the full publication.

The UK Cochrane Centre, Summertown Pavilion, Middle Way, Oxford, OX2 7LG, UK, shopewell@cochrane.co.uk

Are Relative Risks and Odds Ratios in Abstracts Believable?

Peter C. Gøtzsche

Objective

An abstract should give the reader a quick and reliable overview of the study. I studied the prevalence of significant P values in abstracts of randomized controlled trials and observational studies that reported relative risks or odds ratios and checked whether reported P values in the interval .04 to .06 were correct.

Design

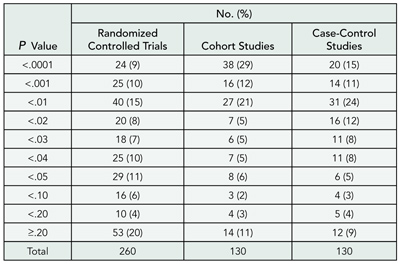

I searched MEDLINE and included all abstracts of articles published in 2003 that contained the words “relative risk” or “odds ratio” and appeared to report results from a randomized controlled trial (N=260). For comparison, random samples of 130 abstracts from cohort studies and 130 abstracts from case-control studies were selected. I noted the P value for the first relative risk or odds ratio that was mentioned, or calculated it if it was not reported.

Results

The first result in the abstract was reported to be statistically significant in 70% of randomized controlled trials, 84% of cohort studies, and 84% of case-control studies (TABLE 10). Many of these significant results were derived from subgroup or secondary analyses. P values were more extreme in observational studies (P < .001), and more extreme in cohort studies than in case-control studies (P = .04, Mann-Whitney test). The distribution of P values around P = .05 was skewed for randomized controlled trial; P values ranged from .04 to .05 for 29 trials, and from .05 to .06 for only 5 trials. I could verify the calculations for 23 and 4 of these trials, respectively. The 4 nonsignificant results were all correct, whereas 17 of the 23 significant results (74%) appeared to be wrong (11 of the 17 were easy to verify, because the authors used Fisher exact test, and 6 were highly doubtful). Recalculation was rarely possible for observational studies because the presented results had been adjusted for confounders.

Conclusions

Significant results in abstracts are exceedingly common but are often misleading because of erroneous calculations and probably also repetitive trawling of the data.

Table 10. Reporting of P Values in Abstracts of Studies

The Nordic Cochrane Centre, Rigshospitalet, Department 7112, Blegdamsvej 9, DK-2100 Copenhagen Ø, Denmark, e-mail: pcg@cochrane.dk

Do Clinical Trials Get Published After Presentation at Biomedical Meetings? A Systematic Review of Follow-up Studies

Erik von Elm1 and Roberta Scherer2

Objective

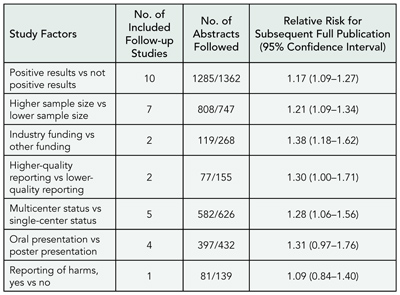

To determine the rate at which abstracts describing results of randomized or controlled clinical trials are subsequently published in full and to investigate study factors associated with full publication.

Design