Abstract

Use of an Artificial Intelligence–Based Tool for Detecting Image Duplication Prior to Manuscript Acceptance

Daniel S. Evanko1

Objective

Image reuse is a common problem with the integrity of image data reported in scientific articles. Considerable resources are expended by the community in trying to detect, communicate, and respond to potential image reuse. These efforts are manually intensive and often take place after publication, when resolution is difficult and time-consuming. The study objective was to evaluate the use of an artificial intelligence–based tool to identify potential image reuse prior to acceptance and address problems then.

Design

In this cross-sectional study designed to systematically identify and act on instances of image reuse in manuscripts submitted to 9 journals published by the American Association for Cancer Research, a machine-assisted process was implemented for detecting potential image duplication between images in a manuscript. Prior to issuing a provisional acceptance decision, an internal editor selected original research manuscripts containing images susceptible to duplication and detection and uploaded them to a commercial tool (Proofig). The tool’s assessment was followed by editor evaluation and refinement of the results and communication of potentially problematic image duplications to the authors using a standard report generated by the tool. Author responses and the outcomes of these queries were recorded in a tracking sheet using a standardized classification system.

Results

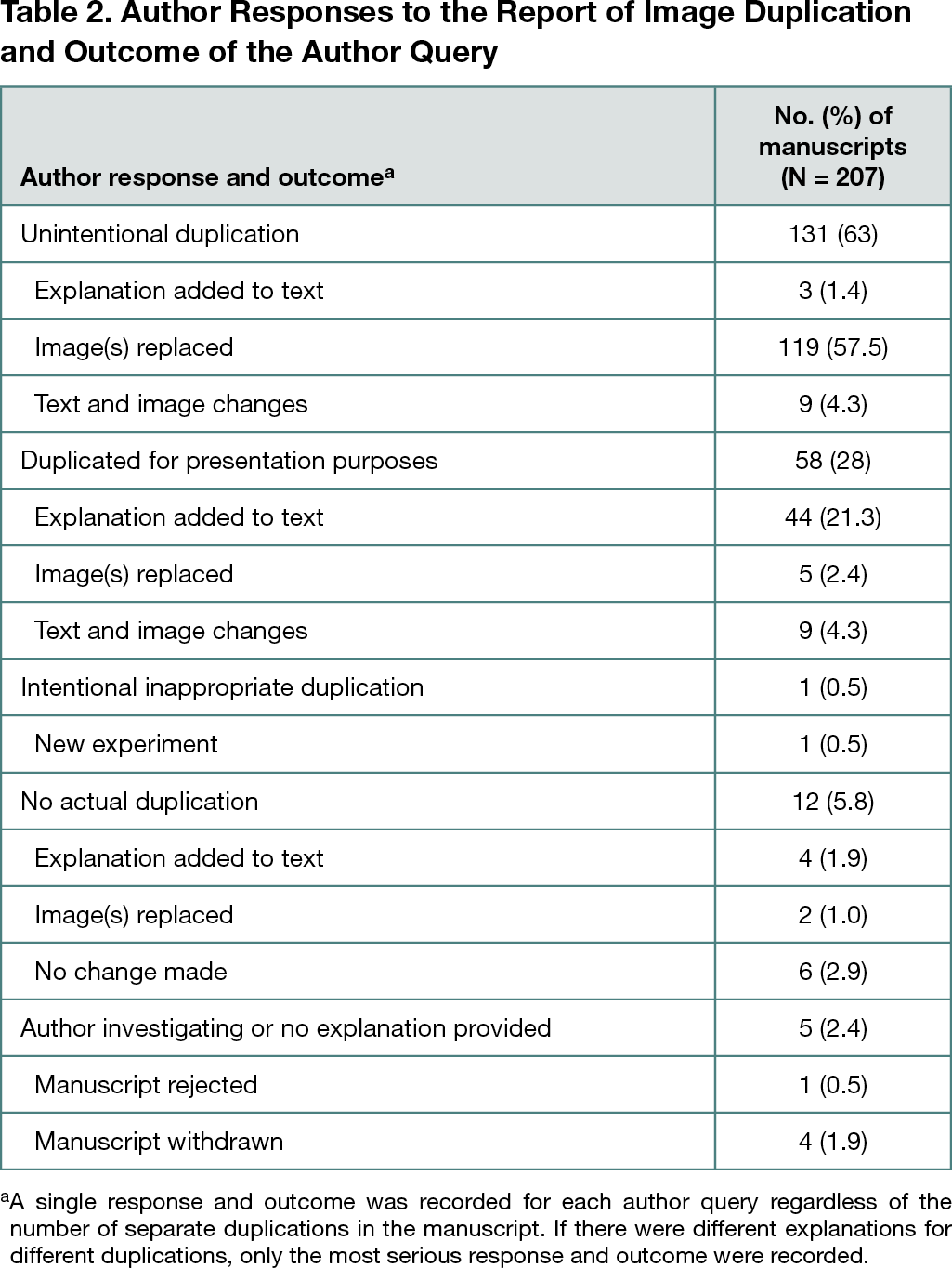

From January 2021 through May 2022, a total of 207 image duplication queries were sent to authors for their response. This represented 9.3% of 2220 original research manuscripts that reached this editorial stage and 15% of the 1367 manuscripts selected for analysis. The distribution of duplicate quantity per manuscript was 104 (50%) with 1 duplicate, 46 (22%) with 2 duplicates, 19 (9%) with 3 duplicates, 28 (14%) with 4 to 10 duplicates, and 11 (5%) with 11 or more duplicates. Responses from authors indicated that 63% (n = 131) of duplications were unintentional, for example, from general image mishandling, and 28% (n = 58) were intentional duplications for presentation purposes (see Table 2 for details). In 2% (n = 5) of cases the author said they were investigating or provided no explanation. These manuscripts were withdrawn or rejected. In all other cases, changes were made to address the duplication(s). Only in 12 cases did the potential duplication turn out not to be a duplication. To compare the time required to identify seemingly real duplications for communication to authors, 5 editors first analyzed 27 manuscripts purely manually and then using the tool followed by manual evaluation and refinement. The mean time per manuscript for tool-assisted analysis was 4.4 minutes (11 duplications identified in 8 manuscripts) vs 8 minutes (5 duplications identified in 3 manuscripts) for manual analysis.

Conclusions

The use of this artificial intelligence–based tool effectively identified real duplications between and within figures in 14% (195/1367) of manuscripts intended for acceptance that contained images susceptible to duplication and detection. This allowed these problems to be addressed prior to publication with minimal manual effort.

1American Association for Cancer Research, Philadelphia, PA, USA, daniel.evanko@aacr.org

Conflict of Interest Disclosures

Daniel S. Evanko is a member of the Peer Review Congress Advisory Board but was not involved in the review or decision for this abstract.