The Gap Between Reviewers’ Recommendations and Editorial Decisions in a Medical Education Journal

Abstract

José J. Naveja,1,2 Daniel Morales-Castillo,1 Teresa Fortoul,1 Melchor Sánchez-Mendiola,1 Carlos Gutiérrez-Cirlos1

Objective

To assess the items in a questionnaire and identify critical points that might be associated with manuscript final decisions. Usually, peer reviewers’ input is in the form of a series of free-format suggestions for the manuscript under consideration.1 Typically, journals ask reviewers a few questions about the overall quality of the work.2 The validity evidence and psychometric properties of this type of instrument in the editorial process are not commonly evaluated, and the goal of the study was to assess them in the Mexican journal Investigación en Educación Médica.

Design

In this cohort and instrument analysis, during the peer review process of the journal, a 15-item questionnaire was used, with dichotomous response referring to the manuscript subsections: abstract (1 item), introduction (3 items), methods (2 items), results (1 item), discussion and/or conclusions (5 items), and general evaluation (3 items). The questionnaires for manuscripts that finished peer review from January 2020 to December 2021 were analyzed. Cronbach α was used to measure reliability. An item-response-theory model fit was used to identify the best items for discriminating publishable manuscripts.

Results

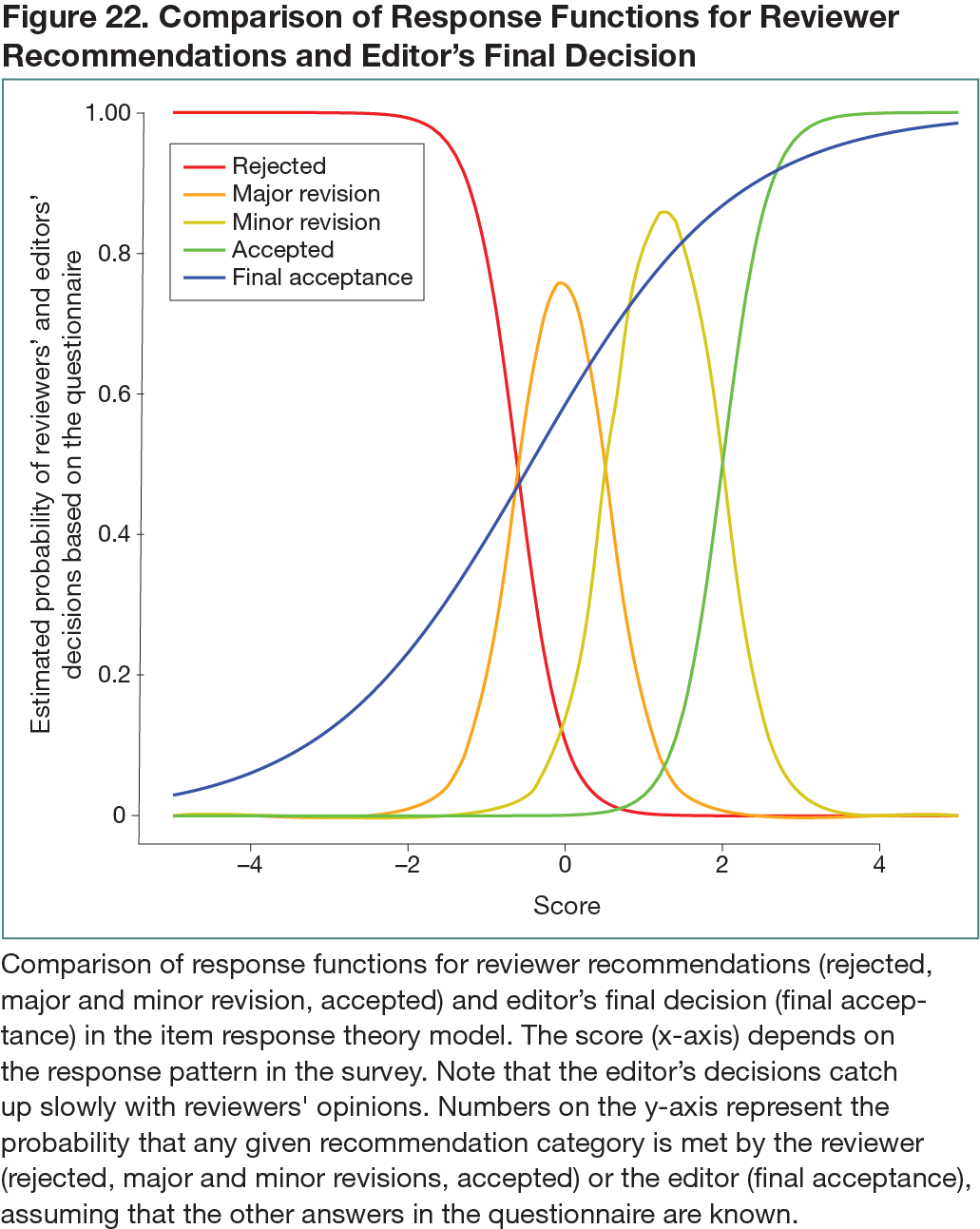

A total of 169 reviewer reports were collected from 85 manuscripts that underwent peer review with a final editorial decision (mean, 1.99 reviews per manuscript). Missing data were found in 2.7% of the responses. The Cronbach α score for reliability was 0.86, when considering the questionnaire and including the reviewer’s final recommendation, and the Cronbach α score for the editor’s decision was 0.88. A 2-parameter item-response-theory model fitted the data well (root mean square error of approximation, 0.05; Tucker-Lewis index, 0.97). This means that a latent variable (ie, the overall reviewers’ impression of the manuscript) seemed to be associated with the response to the items. The model allowed the integration of all responses into a combined score, and some items were more likely to be marked false than others when the overall score was low (Figure 22). The variability identified in the estimated item discrimination parameters showed that some were more informative than others. The highest discrimination was observed in items related to the validity of inferences and conclusions. The correlation between instrument scores and reviewers’ recommendations was high (r = 0.88) but translated poorly into editors’ final decisions. For instance, the discrimination parameter for the editors’ decisions was relatively small (Cronbach α score, 0.77).

Conclusions

The study adds internal validity evidence about an instrument that provides editors with an overview of the manuscript. The model transformed the questionnaire responses into a score that better captured the reviewers’ impressions. Discrepancies between reviewers and editors cannot be avoided.3 The reviewers’ questionnaire is a useful starting point when reaching an editorial decision after peer review. Journals that include a brief questionnaire for assessing the manuscripts can benefit from analyzing these responses. A general questionnaire cannot replace the specific comments written by reviewers for the final editorial decision.

References

1. Sun M. Peer review comes under peer review. Science. 1989;244(4907):910-912. doi:10.1126/science.2727683

2. Parrish E. Peer review process. Perspect Psychiatr Care. 2021;57(1):7-8. doi:10.1111/ppc.12724

3. Snell L, Spencer J. Reviewers’ perceptions of the peer review process for a medical education journal. Med Educ. 2005;39(1):90-97. doi:10.1111/j.1365-2929.2004.02026.x

1Faculty of Medicine, National Autonomous University of México, Mexico City, Mexico, cirlos@hotmail.com;2Johannes Gutenberg University, Mainz, Germany

Conflict of Interest Disclosures

None reported.

Additional Information

Melchor Sánchez-Mendiola is a co–corresponding author.