Abstract

Assessment of the Pros and Cons of Posting Preprints Online Before Submission to a Double-Anonymous Review Process in Computer Sciences

Charvi Rastogi,1 Ivan Stelmakh,1 Xinwei Shen,2 Marina Meila,3 Federico Echenique,4 Shuchi Chawla,5 Nihar B. Shah1

Objective

Authors posting preprints online before review in double-anonymous peer review is a widely debated issue of policy as well as authors’ personal choice.1,2 Authors in a disadvantaged group posting preprints online can gain visibility but may lose the benefits of double-anonymous review.1 In this work, this debate was substantiated by quantifying (1) how frequently reviewers deliberately search for their assigned paper online and (2) the correlation between the visibility of preprints posted online and the prestige of the associated authors’ affiliations.

Design

Surveys were conducted in 2 top-tier computer science conferences that review full papers, are terminal publication venues, and are considered on par with journals: the 2021 Association for Computing Machinery’s Conference on Economics and Computation (EC) and 2021 International Conference on Machine Learning (ICML). To address this work’s first objective, after the initial review period, an anonymized survey was conducted (by invitation and with validation to protect from spurious responses), with the following question sent to each reviewer: “During the review process, did you search for any of your assigned papers on the internet?” It was clarified that accidental discovery of a paper on the internet (eg, through searching for related works) did not count as a positive case for this question. To address the second objective, a list was compiled of papers that were submitted to ICML or EC and found available online before review. Relevant reviewers were surveyed during the review process on whether they had seen some of these papers online outside of a reviewing context. The visibility of a paper was set to 1 if the reviewer responded yes; otherwise, it was set to 0. To quantify prestige, each paper was assigned a prestige metric based on the ranking of the authors’ affiliations in widely used world rankings such as QS (Quacquarelli Symonds) rankings and Computer Science rankings. Finally, a Kendall τ b correlation coefficient was computed between papers’ visibility and prestige metrics.

Results

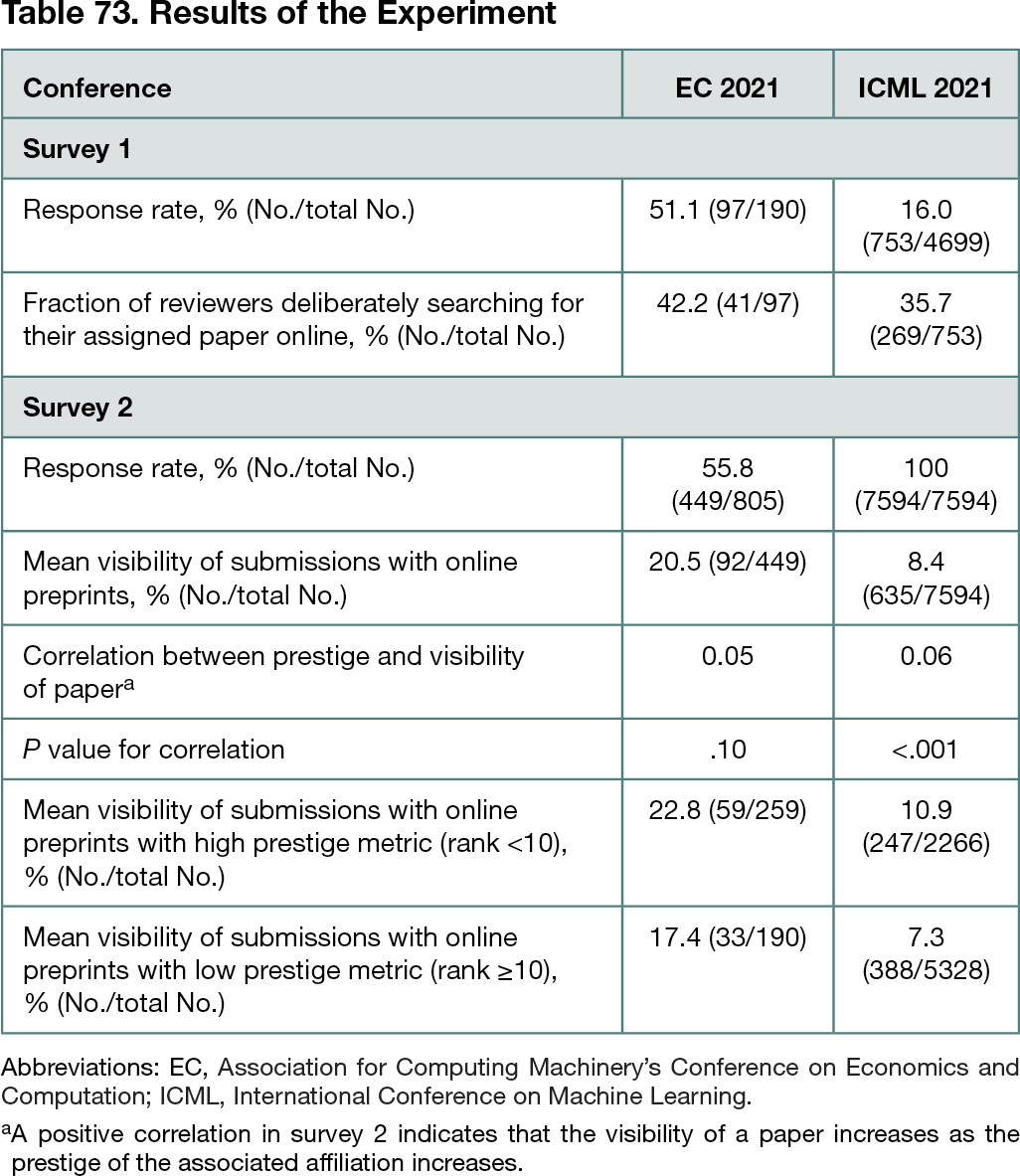

For the first objective, more than 35% of the survey respondents self-reported searching online for their assigned paper in ICML and EC. A weakly positive correlation was observed in the visibility of preprints posted online and their prestige metric, which was statistically significant in ICML but not in EC. To interpret the correlation, the mean visibility of papers with high prestige was compared with that of the remaining papers (Table 73). The results for the second objective were based on preprints posted online. Further analysis was conducted to account for this fact, wherein correlation between papers’ prestige metric and final decision was computed for papers that were and were not posted online.

Conclusions

Based on this work,3 peer review organizers and authors posting preprints online should account for the finding that a substantial fraction of reviewers searched for their assigned papers online in EC 2021 and ICML 2021.

References

1. Tomkins A, Zhang M, Heavlin WD. Reviewer bias in single- versus double-blind peer review. Proc Natl Acad Sci USA. 2017;114(48):12708-12713. doi:10.1073/pnas.1707323114

2. Blank RM. The effects of double-blind versus single-blind reviewing: experimental evidence from the American Economic Review. Am Econ Rev. 1991;81(5):1041-1067.

3. Rastogi C, Stelmakh I, Shen X, et al. To ArXiv or not to ArXiv: a study quantifying pros and cons of posting preprints online. arXiv. Preprint posted online March 31, 2022. Accessed July 13, 2022. https://arxiv.org/abs/2203.17259

1Carnegie Mellon University, Pittsburgh, PA, USA, crastogi@cs.cmu.edu; 2Hong Kong University of Science and Technology, Hong Kong; 3University of Washington, Seattle, WA, USA; 4California Institute of Technology, Pasadena, CA, USA; 5The University of Texas at Austin, Austin, TX, USA

Conflict of Interest Disclosures

Ivan Stelmakh reports a collaboration with Google Research through a summer research internship. Charvi Rastogi reports a collaboration with IBM Research New York through a summer research internship. These interests did not play any role in the submitted research.

Funding/Support

This work was supported by the US National Science Foundation (NSF) in part by NSF CAREER award 1942124, which supports research on the fundamentals of learning from people with applications to peer review. Marina Meila was supported by NSF MMS Award 2019901. Federico Echenique was supported by NSF awards SES 1558757 and CNS 1518941.

Role of the Funder/Sponsor

The funder did not play a role in any of the following: design and conduct of the study; collection, management, analysis, and interpretation of the data; preparation, review, or approval of the abstract; and decision to submit the abstract for presentation.

Acknowledgment

The authors gratefully acknowledge Tong Zhang, program co-chair of the International Conference on Machine Learning 2021 jointly with Marina Meila, for his contribution in designing the workflow, designing the reviewer questions, facilitating access to relevant summaries of anonymized data, and supporting the polling of the reviewers, as well as for many other helpful discussions and interactions; and Hanyu Zhang, workflow co-chair jointly with Xinwei Shen, for his contributions to the workflow and many helpful interactions.

Additional Information

Nihar B. Shah is a co–corresponding author.