2013 Abstracts

Sunday, September 8

Authorship

Too Much of a Good Thing? A Study of Prolific Authors

Elizabeth Wager,1 Sanjay Singhvi,2 Sabine Kleinert3

Objective

Authorship of unfeasibly large numbers of publications may indicate guest authorship, plagiarism, or fabrication (eg, the discredited anesthetist Fujii published 30 trials in 1 year). However, it is difficult to accurately assess an individual’s true publication history in databases such as MEDLINE using searches for author name alone. We therefore used a bespoke, semiautomated tool, which considers additional author characteristics, to identify authorship patterns for a descriptive study of prolific authors.

Design

Publications from a 5-year period (2008-2012) across 4 topics were selected from MEDLINE to provide a varied sample. The bespoke tool was used to disambiguate individual authors by analyzing characteristics such as affiliation, past publication history, and coauthorships, as well as author name. Focusing on 4 discrete topics also reduced the chance of double-counting publications from authors with similar names. Type of publication and authorship position were assessed for the most prolific authors in each topic.

Results

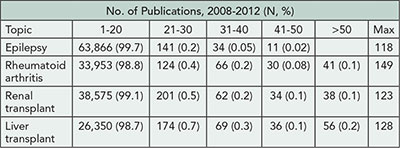

The number of publications per topic are shown in Table 1. Distinct publication patterns could be identified (eg, individuals who were often first author [max 56%] or last author [max 89%]). The maximum number of publications per year was 43 (for any type) and 15 (for trials). Of the 10 most prolific authors for each topic, 24/40 were listed on ≥1 publication per 10 working days in a single year.

Table 1. Total Number of MEDLINE Publications per Individual for 2008-2012 for Selected Topics

Conclusions

Analytical software may be useful to identify prolific authors from public databases with greater accuracy than simple name searches. Although such findings always need careful interpretation, these techniques might be useful to journal editors and research institutions in cases of suspected misconduct or to screen for potential problems (eg, prolific last authors might be guest authors). When measuring productivity, institutions and funders should be alert not only to unproductive researchers but also to unfeasibly prolific ones.

1Sideview, Princes Risborough, UK, liz@sideview.demon.co.uk; 2System Analytic, London, UK; 3 Lancet, London, UK

Conflict of Interest Disclosures

Sanjay Singhvi is a director of System Analytic Ltd, which provides expert identification/mapping services, the tools from which were used for this study. Elizabeth Wager has acted as a consultant to System Analytic—this work represents less than 1% of her total income. Sabine Kleinert reports no conflicts of interest.

Funding/Support

No external funding was obtained for this project. System Analytic provided the tools, analysis, and staff time. Elizabeth Wager is self-employed and received no payment for this work.

Deciding Authorship: Survey Findings From Clinical Investigators, Journal Editors, Publication Planners, and Medical Writers

Ana Marušić,1 Darko Hren,2 Ananya Bhattacharya,3 Matthew Cahill,4 Juli Clark,5 Maureen Garrity,6 Thomas Gesell,7 Susan Glasser,8 John Gonzalez,9 Samantha Gothelf,10 Carolyn Hustad,4 Mary-Margaret Lannon,11 Neil Lineberry,12 Bernadette Mansi,13 LaVerne Mooney,14 Teresa Pena15

Objective

Low awareness, variable interpretation, and inconsistent application of guidelines can lead to a lack of transparency when recognizing contributors in industry-sponsored clinical trial publications. We sought to identify how different groups who participate in the publication process determine authorship.

Design

Interviews with clinical investigators, journal editors, publication planners, and medical writers identified difficult-toresolve authorship scenarios when applying ICMJE guidelines, such as authorship for significant patient recruitment or medical writing contribution. Seven scenarios were converted into a case-based, online survey to identify how these groups determine appropriate recognition and provide rationale and confidence for their decision. Respondents also indicated their awareness and use of authorship guidelines. A sample of at least 96 participants per group enabled estimates with a 10% margin of error for a 100,000 population. The online survey remained open until all groups surpassed this sample size by at least 10%.

Results

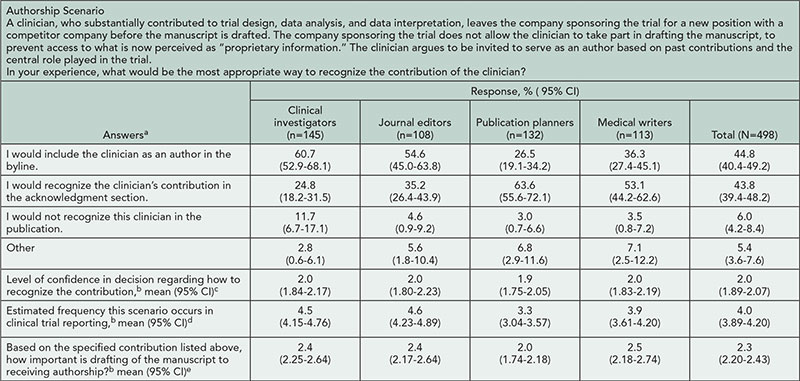

We analyzed 498 responses from a global audience of 145 clinical investigators, 132 publication planners, 113 medical writers, and 108 journal editors. Overall, types of recognition chosen for each scenario varied both within and across respondent groups (see Table 2

for example case results). Despite acknowledged awareness and use of authorship criteria, respondents often adjudicated cases inconsistently with ICMJE guidelines. Clinical investigators provided the most variable responses and had the lowest level of ICMJE awareness (49% [95% CI=42.9-59.4] vs 92% [95% CI=88.5-94.2] for other groups) and use (28% [95% CI=20.5-34.6] vs 61% [95% CI=55.7- 65.5] for other groups). Respondents were confident in their answers (mean score, 2.0 [95% CI=1.5-2.5] on a relative scale from 1: extremely confident to 6: not at all confident), regardless of their adjudication. Based on roundtable discussions with 15 editors and qualitative analysis of respondents’ answers, Medical Publications Insights and Practices Initiative (MPIP) developed supplemental guidance aimed at helping authors to set common rules for authorship early in a trial and document all trial contributions to increase transparency.

Table 2. Opinions of Respondents About an Example Authorship Scenario

aStatistically significant difference among 4 groups in frequencies of answers (χ29=64.28, P<.001).

b1 = extremely confident/frequent/important, 6 = not at all confident/frequent/important, respectively.

cNo statistically significant difference among the groups (one-way ANOVA, F3494=0.39, P=.760).

dStatistically significant difference among the groups (one-way ANOVA, F3494=15.05, P<.001); Tukey post hoc test: all pair-wise comparisons are statistically significant (P≤.046) except for clinical investigators vs journal editors (P=.956).

eStatistically significant difference among the groups (1-way ANOVA, F3494=4.54, P=.004). Tukey post hoc test: publication planners vs other 3 groups (P≤.038).

Conclusions

Groups that participate in the publishing process had differing opinions on adjudication of challenging real-world authorship scenarios. Our proposed supplemental guidance is designed to provide a framework to improve transparency when recognizing contributors to all clinical trial publications.

1Department of Research in Biomedicine and Health, University of Split School of Medicine, Split, Croatia, ana.marusic@mefst.hr; 2University of Split Faculty of Philosophy, Split, Croatia; 3Bristol-Myers Squibb, Princeton, NJ, USA; 4Merck, North Wales, PA, USA; 5Amgen, Thousand Oaks, CA, USA; 6Astellas, Northbrook, IL, USA; 7Envision Pharma and International Society for Medical Publication Professionals, Briarcliff Manor, NY, USA; 8Janssen R&D, LLC, Raritan, NJ, USA; 9AstraZeneca, Alderley Park, UK; 10Bristol-Myers Squibb, Princeton, NJ, USA; 11Takeda, Deerfield, IL, USA; 12Leerink Swann Consulting, Boston, MA, USA; 13GlaxoSmithKline, King of Prussia, PA, USA; 14Pfizer, New York, NY, USA; 15AstraZeneca, Wilmington, DE, USA

Conflict of Interest Disclosures

Ana Marušić and Darko Hren were supported by a grant from Medical Publishing Insights and Practices (MPIP) Initiative. All others are members of MPIP. Neil Lineberry is an external consultant employed by Leerink Swann Consulting and was paid by the MPIP Initiative for his work. Thomas Gesell serves on the MPIP Initiative Steering Committee as a representative of the International Society for Medical Publication Professionals, neither of which provides funding support to the MPIP Initiative. The other members of the MPIP Initiative Steering Committee are employees of the companies sponsoring the MPIP Initiative, as shown by their individual affiliations.

Funding/Support

Ana Marušić and Darko Hren received funding for the research project from the MPIP Initiative.

Multiauthorship Articles and Subsequent Citations: Does More Yield More?

Joseph Wislar,1 Marie McVeigh,2 Annette Flanagin,1 Mary Lange,2 Howard Bauchner1

Objective

To assess if articles published with large numbers of authors are more likely to be cited in subsequent works than articles with fewer authors.

Design

Research and review articles published in 2010 in the top 3 general medical journals (JAMA, Lancet, New England Journal of Medicine) and the top 3 general science journals (Nature, Proceedings of the National Academy of Sciences, Science) according to their rank by Impact Factor were extracted. The number of authors and other article characteristics (eg, article type and topic for the medical journal articles) were recorded. Citations per article were recorded through March 1, 2013.

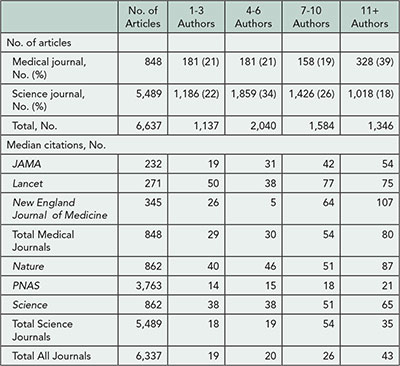

Results

A total of 6,337 research and review articles were published in the 6 journals in 2010 articles (848 in medical journals and 5,489 in science journals); the number of authors per article ranged from 1 to 659. The number of authors and articles were divided into 4 quartiles: 1-3, 4-6, 7-10, 11+ authors, respectively (Table 3). There was a median of 8 (IQR: 4-14) authors per medical journal article and 6 (IQR: 4-9) per science journal article. There were more articles among the medical journals than science journals with 11+ authors (39% vs 18%, respectively). Medical journal articles were cited a median of 50 (IQR: 25-100) times and the science journal articles were cited a median of 22 (IQR: 12-42) times during the follow-up period. Median number of citations was highest for articles in the highest quartile of number of authors: 80 vs 29 (P<.001) for medical journals and 35 vs 18 (P<.001) for science journals. Article types among medical journals included 290 observational studies, 259 randomized trials, 176 reviews, 89 case reports, and 34 meta-analyses. Top topics represented among the medical journals articles were infectious disease (14.7%), cardiovascular disease (12.5%), and oncology (11.3%). Preliminary analyses assessing article type and topic in medical journals articles did not reveal a consistent pattern for article type but did show a linear increase in numbers of authors for articles in cardiovascular disease, critical care medicine, obstetrics/gynecology, and oncology and inconsistent patterns for other topics.

Table 3. Number of Author by Quartile and Median Number of Citations, by Journal

Conclusion

Articles with 11 or more authors have higher citations than articles with fewer authors; this does not appear to be affected by article type, but there may be an association with article topic.

1JAMA, joseph.wislar@jamanetwork.org, Chicago, IL, USA; 2Thomson Reuters, Philadelphia, PA, USA

Conflict of Interest Disclosures

Joseph Wislar, Annette Flanagin, and Howard Bauchner are employed as editors with JAMA, one of the journals included in this study. Annette Flanagin, coordinator of the Peer Review Congress, had no role in the review of or decision to accept this abstract for presentation.

Funding/Support

There was no external funding for this study.

Citations

Coercive Citation and the Impact Factor in the Business Category of the Web of Science

Tobias Opthof,1,2 Loet Leydesdorff,3 Ruben Coronel1

Objective

Coercive citation is not unusual in journals within the business category of the Web of Science. Coercive citation is defined as pressure by the editor of a journal on an author to include references to the editor’s journal either before the start of the review process or after acceptance of the manuscript. The quantitative effects of this practice on Impact Factors and on the degree of journal self-citation have thus far not been assessed.

Design

We have quantified bibliographic parameters of the top 50 journals in the category Business of the Web of Science. Of these, 26 had previously been shown to exert citation coercion by Wilhite and Fong. We have compared these 26 journals with the other 24 journals.

Results

The averaged Impact Factors in 2010 of coercive journals and noncoercive journals were 2.665 ± 0.265 (mean ± SEM) vs 2.227 ± 0.134 (P=.141) including journal self-citations and 2.110 ± 0.279 vs 1.635 ± 0.131 (P=.123) without journal self-citations. Compared to noncoercive journals, coercive journals had a higher percentage of journal self-citations to all years (9.5% vs 6.7%, P<.25), a higher percentage of journal self-citations to the years relevant for the Impact Factor (based on the 2 preceding years; 19.5% vs 13.5%, P<.05), and a higher percentage of journal self-citations to the earlier years (8.0% vs 5.2%, P<.01). Within the group of coercive journals, the degree of coercive citation was positively and significantly correlated with the percentage of journal self-citations to the preceding 2 years relevant for the Impact Factor (r=.513, n=26, P<.005), but not to other years.

Conclusion

In business journals, coercive citation is effective for coercing editors in the sense that their journals show a higher percentage of journal self-citations to years relevant for the conventional Impact Factor.

1Department of Experimental and Clinical Cardiology, Academic Medical Center, Amsterdam, the Netherlands, t.opthof@inter.nl.net; 2Department of Medical Physiology, University Medical Center Utrecht, the Netherlands; 3Amsterdam School of Communication Research (ASCoR), University of Amsterdam, the Netherlands

Conflict of Interest Disclosures

None reported.

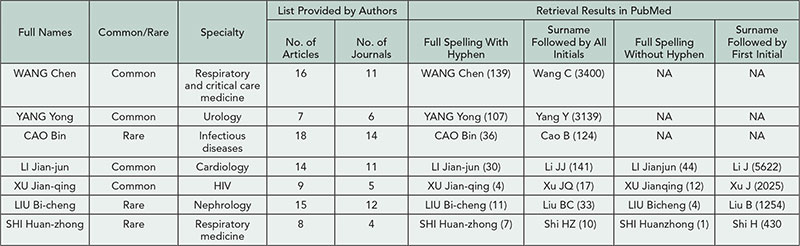

Do Views Online Drive Citations?

Sushrut Jangi,1,2 Jeffrey A. Eddowes,1 Jennifer M. Zeis,1 Jessica R. Ippolito,1 Edward W. Campion1

Objective

Biomedical journals have a 2-fold aim—to contribute literature that is highly cited and to present articles that are read widely. The first aim is measured by Impact Factor. The second aim is measurable using parameters such as Most Viewed online. We examined whether these publication aims are related: are the most-viewed articles subsequently the most cited?

Design

Citations for all 226 original research articles published in 2010 in the New England Journal of Medicine were analyzed through the end of 2012. Online usage data were analyzed for 2 time periods: (1) during the first year after publication and (2) from publication to the end of 2012. A linear regression was generated between number of views and citations during these 2 periods.

Results

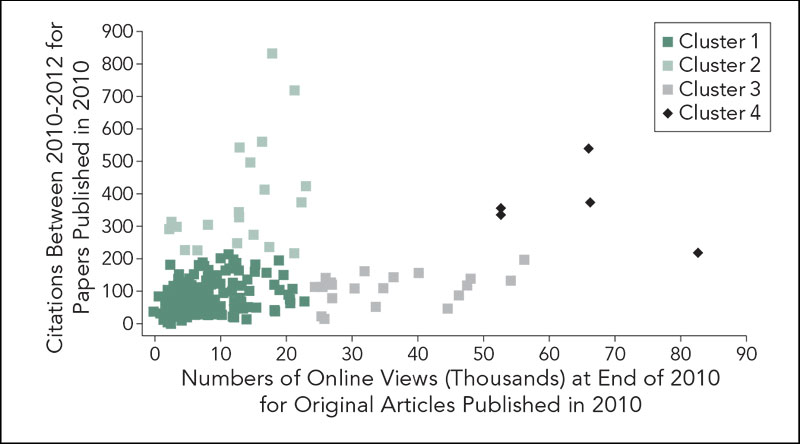

When number of online views at the end of 2010 was compared with citations between 2010-2012, the correlation between online views and citations was near 0 (linear regression slope near 0 with an R2 of .0015). The inability to create a robust linear regression occurred because these research articles segregated into 4 unique clusters with distinct characteristics (Figure 1). When the number of online views over the first 2 years of publication were compared with citations over the same time period, the association between views and citations was stronger (linear regression slope of 3 with an R2 of .3). Clusters were determined as follows: articles that fell into cluster 1 all were within the first standard deviation of citations and views. Papers that were beyond one standard deviation of citations but were normally viewed were assigned to cluster 2; papers that were beyond one standard deviation of online views but were normally cited were cluster 3; papers that were beyond one standard deviation in both views and citations were cluster 4. Of the 226 original research articles published in 2010, 178 fall into a core cluster 1; 20 into cluster 2; 21 in cluster 3; and 5 in cluster 4. Clustering prevents a robust linear regression (slope of .27 and R2 of .0015). Each cluster has distinct traits. Cluster 2 papers are primarily randomized control trials in oncology or other specialized fields that are rarely reported by the media; cluster 3 papers concern epidemiology, public health, and general medical practice and are moderately reported by the media; cluster 4 papers were most highly reported by the media.

Figure 1. Clustering of Original Research Articles

Conclusions

Highly viewed papers within the first year of publication of original research do not robustly predict citations over the following 2 years. Instead, papers that are highly cited become increasingly viewed over time. Furthermore, following the first year of publication, most original research falls into 4 distinct clusters of views and citations, reflecting the varying aims of biomedical publication.

1New England Journal of Medicine, Boston, MA, USA, sjangi@nejm.org; 2Beth Israel Deaconess Medical Center, Boston, MA, USA

Conflict of Interest Disclosures

None reported.

Uncited or Poorly Cited Articles in the Cardiovascular Literature

Ruizhi Shi,1 Isuru Ranasinghe,1 Aakriti Gupta,1 Behnood Bikdeli,1 Ruijun Chen,1 Natdanai Tee Punnanithinont,1 Julianna F. Lampropulos,1 Joseph S. Ross,1,2 Harlan M. Krumholz1,2

Objective

In an efficient system, virtually all studies worthy of publication would be cited in subsequent papers. We sought to determine the percentage of uncited or poorly cited articles in the cardiovascular literature, the factors associated with citations, and the trend in citations.

Design

We identified cardiovascular journals indexed in Scopus with 20 or more publications. We determined 5-year citations of each original article published in 2006 and the association between article and journal characteristics with likelihood of citation using multivariable logistic regression. To evaluate trends, we obtained similar cohorts for 2004, 2006, and 2008 with 4-year citations of each original article and compared the percents of uncited articles in these years.

Results

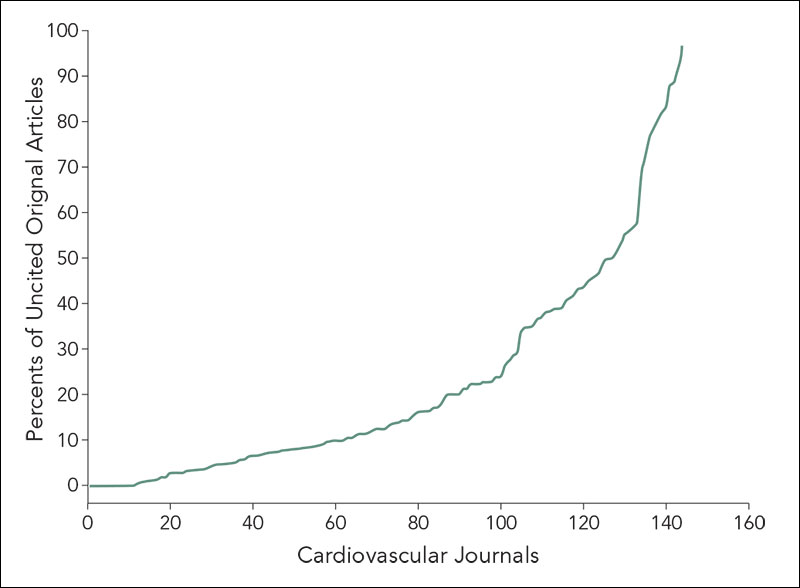

Among 144 cardiovascular journals, we identified a total of 18,411 original articles published in 2006. In the following 5 years, the median number of citations was 6 (IQR: 2-16). Of all articles, 2,756 (15.0%) articles were uncited and 6,122 (33.3%) had 1 to 5 citations. English language (OR 4.3, 95% CI 3.6-5.0), journal Impact Factor >4 (OR 5.6, 95% CI 4.6-6.9 compared with journal Impact Factor <1.0), per-person increase in number of authors (OR 1.1, 95% CI 1.1-1.1), and 3 or more author key words (OR 1.4, 95% CI 1.2-1.6) increased the likelihood being cited. Among the 144 journals, the interquartile range for the percent of uncited papers ranged from 7% to 40% (Figure 2). From 2004 to 2008, the overall volume of published articles increased (2004, n=13,880; 2006, n=18,411; 2008, n=19,184), including the volume of uncited publications at 4 years after publication (2004, n=2,393; 2006 n=3,134; 2008, n=3,337); however, the percents of uncited papers were not statistically different (17.2% vs 17.0% vs 17.4%, P value for trend =.4).

Figure 2. Distribution of Percents of Uncited Original Articles

Conclusion

Nearly half of the cardiovascular literature remains uncited or poorly cited after 5 years and are particularly concentrated in certain journals, suggesting substantial waste in some combination of the funding, pursuit, publication, or dissemination of cardiovascular science.

1Yale University Center for Outcomes Research and Evaluation, New Haven, CT, USA, harlan.krumholz@yale.edu; 2Yale University School of Medicine, New Haven, CT, USA

Conflict of Interest Disclosures

Joseph Ross reports that he is a member of a scientific advisory board for FAIR Health, Inc.

Funding/Support

Harlan Krumholz and Joseph Ross receive support from the Centers of Medicare & Medicaid Services to develop and maintain performance measures that are used for public reporting. Harlan Krumholz is supported by a National Heart, Lung, and Blood Institute Cardiovascular Outcomes Center Award (1U01HL105270-03). Joseph Ross is supported by the National Institute on Aging (K08 AG032886) and by the American Federation for Aging Research through the Paul B. Beeson Career Development Award Program.

Peer Review

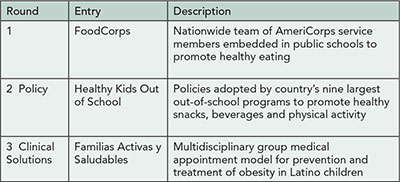

Engaging Patients and Stakeholders in Scientific Peer Review of Research Applications: Lessons From PCORI’s First Cycle of Funding

Rachael Fleurence,1 Joe Selby,1 Laura P. Forsythe,1 Anne Beal,1 Martin Duenas,1 Lori Frank,1 John P. A. Ioannidis,2 Michael S. Lauer3

Objective

The mission of the Patient-Centered Outcomes Research Institute (PCORI) is to fund research that helps people make informed health care decisions. Engagement of patients and stakeholders is essential in all aspects of our work, including research application review. PCORI’s first round of peer review was evaluated to measure the impact of different reviewer perspectives on merit review scores.

Design

In 2012 PCORI initiated a 2-phase review (phase 1: online review by 3 scientists using 8 criteria (Table 4); phase 2: review top one-third of applications by 2 additional scientists, 1 patient, 1 stakeholder (clinicians, providers, manufacturers, etc). Scientists provided overall scores based on the 8 criteria; patients/stakeholders focused on criteria 2, 4, and 7. Proposals were scored prior to an in-person discussion and final scores were assigned after discussion. We conducted (1) correlations and Bland-Altman tests to examine agreement between scientific and patient/stakeholder reviewers, (2) random forest analyses to identify unique contributors to final scores (lower depth implies higher correlation), and (3) postreview focus groups.

Results

In phase 2, there was limited agreement on overall scores between scientists and patients/stakeholders prediscussion (r=.18, P=.02), but no observable systematic bias; agreement was stronger postdiscussion (r=.90, P<.001). Random forest analyses indicate the strongest predictors of scientists’ final scores were patient/stakeholder final scores and scientist prediscussion scores (depth=1.033 and 1.861, respectively). The strongest predictors of patient/stakeholder final scores were scientific final scores, scientific prediscussion scores, and patient/stakeholder prediscussion scores (depth=1.084, 2.423, and 3.080, respectively). Twenty-five contracts were awarded after the 2-phase review; only 13 of these scored among the top 25 in phase 1. Postreview focus group themes included a collegial learning experience, a steep learning curve around PCORI’s new criteria, scientists’ appreciation of perspectives offered by patients/stakeholders, scientists’ concern about nonscientists’ level of technical expertise, and patients’/stakeholders’ experiences of being considered less authoritative than scientists.

Table 4. PCORI Merit Review Criteria for the Inaugural Funding Cycle

Conclusions

The projects funded differed after the 2-phase merit review compared to 1 phase of scientific review. Patient/ stakeholder reviewers may have been more likely to incorporate insights from scientists than vice versa. Further research is being conducted on the available data to deepen our understanding of the process.

1Patient-Centered Outcomes Research Institute (PCORI), Washington, DC, USA, rfleurence@pcori.org; 2Stanford University School of Medicine, Stanford, CA, USA; 3National Heart, Lung, and Blood Institute, Bethesda, MD, USA

Conflict of Interest Disclosures

None reported.

Editorial Triage: Potential Impact

Deborah Levine,1 Alexander Bankier,1 Mark Schweitzer,2 Albert de Roos,3 David C. Madoff,4 David Kallmes,5 Douglas S. Katz,6 Elkan Halpern,7 Herbert Y. Kressel8

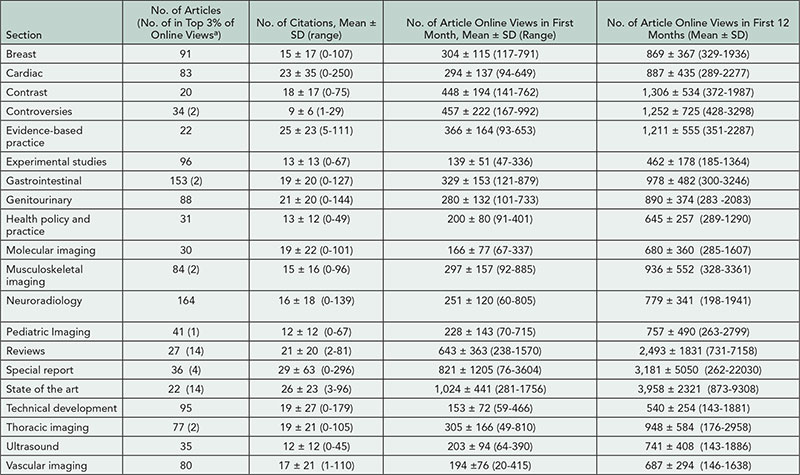

Objective

Increasing manuscript submissions threaten to overwhelm a biomedical journal’s ability to process manuscripts and overburden reviewers with manuscripts that have little chance of acceptance. Our purpose was to evaluate editorial triage.

Design

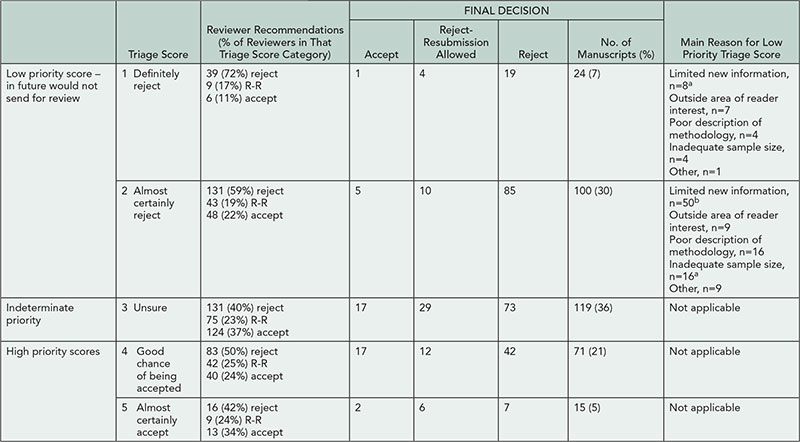

In a prospective study of original research manuscripts submitted to a single biomedical journal for an 8-week period beginning July 2012, 329 articles were processed with our normal procedures as well as with a parallel “background triage mode.” The editor in chief/deputy editor (EIC/DE) rated on a 5-point scale the likelihood of an article being accepted for publication (with scores of 1 “definitely reject” and 2 “almost certainly reject” considered “low priority” for publication). Editors noted reasons for low priority ratings. Manuscripts were sent for peer review in the typical fashion, with reviewers chosen by noneditor office staff. There typically were 4 to 8 weeks between initial triage and final decisions (based on standard peer review). The EIC who made the final decision was unaware of triage scores given by DEs; however, there were articles where the final and triage decisions were made by the EIC. Spearman correlation was used to correlate final decisions with triage scores and with reviewer mean scores.

Results

Triage scores, reviewer scores, and final outcomes are detailed in Table 5. Of 124 manuscripts scored as low priority, 6 (4.8%, CI 1.8%-10.2%) were ultimately accepted for publication (P<.0001, correlation .26). “Limited new information” was the primary reason for a low priority score for 57/124 (46%) manuscripts, and 5 manuscripts with low priority score that were ultimately accepted had this reason given. Individual EIC/DE triage scores were weakly to moderately correlated with final decision (r=-.1-.45, with overall EIC/DE group correlation of .24). Reviewer scores were moderately correlated with final decision (r=.62).

Table 5. Triage Scores and Final Decisions

R-R indicates reject with resubmission allowed.

a1 ultimately accepted.

b4 ultimately accepted.

Conclusions

Editorial peer review triage identified 38% (124/329) of submitted manuscripts as low priority, with lack of new information representing the most common reason for such scoring. Of submitted papers, 1.8% (6/329) would have been “erroneously” triaged, that is, manuscripts potentially worthy of acceptance but triaged as low priority. In our journal, editorial triage represents an efficient method of diminishing reviewer burden without a substantial loss of quality papers.

1Beth Israel Deaconess Medical Center, Department of Radiology, Boston, MA, USA, dlevine@rsna.org; 2The Ottawa Hospital, Department of Radiology, Ottawa, ON, Canada; 3Leiden University Medical Center, Department of Radiology, Leiden, South-Holland, the Netherlands; 4New York-Presbyterian Hospital/Weill Cornell Medical Center, Division of Interventional Radiology, New York, NY, USA; 5Mayo Clinic, Department of Radiology, Rochester, MN, USA; 6Winthrop University Hospital, Department of Radiology, Mineola, NY, USA; 7Massachusetts General Hospital, Institute for Technology Assessment, Boston, MA, USA; 8Radiological Society of North America, Radiology Editorial Office, Boston, MA, USA

Conflict of Interest Disclosures

None of the authors report any disclosures that pertain to the content of this abstract. Other disclosures that do not pertain to this research include royalties from Medrad/Bayer-Shering for endorectal coil (Herbert Kressel); consultant for Spiration, Olympus (Alexander Bankier); consultant for Hologic, expert testimony for Ameritox (Elkan Halpern).

Authors’ Assessment of the Impact and Value of Statistical Review in a General Medical Journal

Catharine Stack,1,2 John Cornell,3 Steven Goodman,4 Michael Griswold,5 Eliseo Guallar,6 Christine Laine,1,2 Russell Localio,7 Alicia Ludwig,2 Anne Meibohm,1,2 Cynthia Mulrow,1,2 Mary Beth Schaeffer,1,2 Darren Taichman,1,2 Arlene Weissman2

Objective

Statistical methods for clinical research are complex, and statistical review procedures vary across journals. We sought authors’ views about the impact of statistical review on the quality of their articles at one general medical journal.

Design

Corresponding authors of all articles published in Annals of Internal Medicine in 2012 that underwent statistical review received an online survey. Surveys were anonymous and authors were informed that individual responses would not be linked to their papers. Per standard procedures, all provisional acceptances and revisions received statistical review. We asked authors about the amount of effort needed to respond to the statistical review, the difficulty in securing the necessary statistical resources, and the degree to which statistical review had an impact on the quality of specific sections of and the overall published article. Authors of rejected articles were not surveyed because rejected papers rarely receive full statistical review.

Results

The online survey was completed by 74 of 94 (79%) corresponding authors. Response rates varied by study design (90% randomized trials, 83% cohort studies, 74% systematic reviews) and number of revisions (90% 3 revisions, 80% 2 revisions, 65% 1 revision). Published studies included reports of original research (73%), systematic reviews/meta-analysis (23%), and decision analysis (4%). Of papers, 21%, 49%, and 29% required 1, 2, and 3 or more revisions, respectively. Of the authors, 61% reported a moderate or large increase in the overall quality of the paper as a result of the statistical review process; 61% and 59% noted improvements to the statistical methods and results sections, respectively; and 19% reported improvements to the conclusions section. Sixty-four percent of authors indicated considerable effort was required to respond to the statistical editor’s comments. A similar proportion (65%) reported that the effort required was worth the improved quality. Thirty-two percent of authors reported having some difficulty in securing the statistical support needed to respond to the statistical editor’s comments, and 5% reported having a lot of difficulty.

Conclusions

The majority of authors whose papers received statistical review reported that the statistical review process improved their articles. Most authors reported that the effort required to respond to the statistical reviewer’s comments was considerable but worthwhile.

1Annals of Internal Medicine, Philadelphia, PA, USA, cstack@acponline.org; 2American College of Physicians, Philadelphia, PA, USA; 3University of Texas Health Science Center, San Antonio, TX, USA; 4Stanford University, Stanford, CA, USA; 5University of Mississippi, Jackson, MS, USA; 6Johns Hopkins University, Baltimore, MD, USA; 7University of Pennsylvania, Philadelphia, PA, USA

Conflict of Interest Disclosures

None reported.

Funding/Support

No external funding was provided for this study. Contributions of staff time and resources came from Annals of Internal Medicine.

Mentored Peer Review of Standardized Manuscripts as an Educational Tool

Victoria S. S. Wong,1 Roy E. Strowd III,2 Rebeca Aragón-García,3 Mitchell S. V. Elkind3

Objective

To determine whether mentored peer review of standardized scientific manuscripts with introduced errors is a feasible and effective educational tool for teaching neurology residents the fundamental principles of research methodology and peer review.

Design

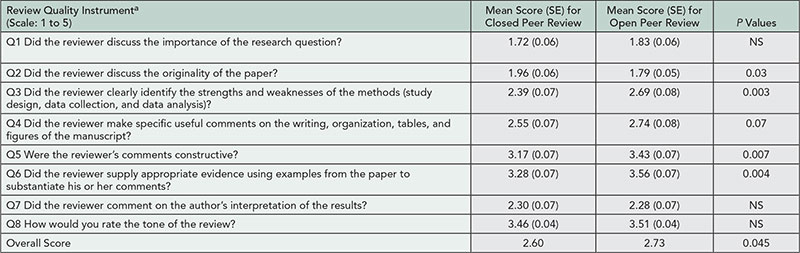

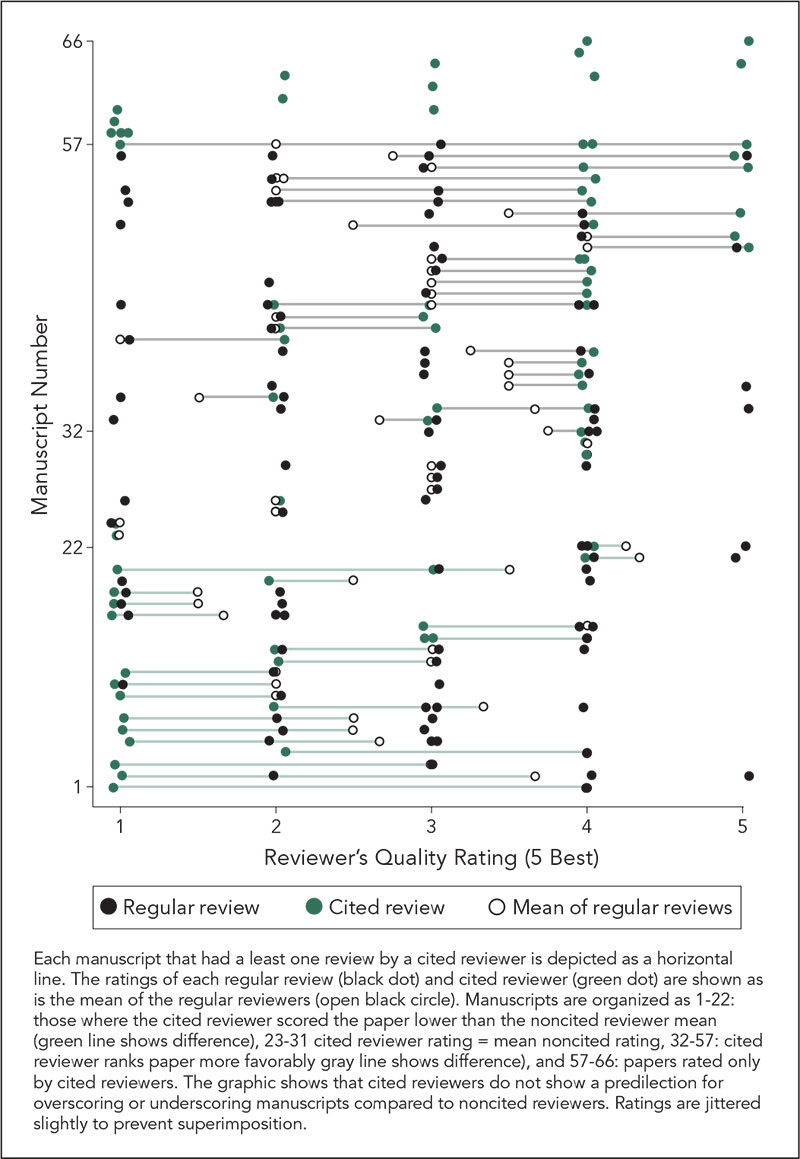

A partially blinded, randomized, controlled multicenter pilot study was designed. Neurology residents (PGY-3 and PGY-4) were recruited from 9 sites. Standardized manuscripts with introduced errors were created and distributed at 2-month intervals: a baseline manuscript, 3 formative manuscripts, and a final (postintervention) manuscript. All residents were asked to peer review these manuscripts. Residents were randomized at enrollment to receive or not receive faculty mentoring on appropriate peer review technique and manuscript assessment after each review. Pretests and posttests were administered to determine improvement in knowledge of research methodology before and after peer reviews. The Review Quality Instrument (RQI), a validated, objective measure to assess quality of peer reviews, was used to evaluate baseline and final reviews blinded to assigned group.

Results

Seventy-eight neurology residents were enrolled (mean age of 30.8 ± 2.6 years; 39 [50%] male; mean duration of 3.4 years [range 2-10] since medical school graduation). Mean pretest score was 13.2 ± 2.7 correct of 20 questions. Sixty-four residents (82% of enrolled; 30 nonmentored, 34 mentored) returned a review of the first manuscript, 49 (77% of active participants) returned the second, 35 (55%) returned the third, 28 (47%) returned the forth, and 45 (71%, 24 nonmentored, 21 mentored) returned a review of the final manuscript. Ten residents were withdrawn due to lack of participation, and 5 asked to be withdrawn. Preliminary RQI evaluation of the first manuscript reviews by a single reviewer revealed an average score of 26.4 ± 6.7 out of 40 points. The RQI evaluation of the reviews from the first and final manuscripts by 2 independent reviewers are pending.

Conclusion

This multicenter pilot study will determine whether peer review of standardized manuscripts with faculty mentoring is a feasible and potentially effective method for teaching neurology residents about peer review and research methodology.

1Oregon Health & Science University, Department of Neurology, Portland, OR, USA, vwongmd@gmail.com; 2Wake Forest School of Medicine, Department of Neurology, Winston-Salem, NC, USA; 3Columbia University, Department of Neurology, New York, NY, USA

Conflict of Interest Disclosures

Mitchell Elkind receives compensation from the American Academy of Neurology for serving as the associate editor for the Resident and Fellow Section.

Funding/Support

This project is funded by an Education Research Grant from the American Academy of Neurology Institute (AANI, the American Academy of Neurology’s education affiliate). The AANI is aware of this abstract submission, but otherwise has no role in the design and conduct of the study; collection, management, analysis, and interpretation of the data; or preparation, review, or approval of the abstract.

The Reporting of Randomized TrIals: Changes After Peer RevIew (CAPRI)

Sally Hopewell,1,2 Gary Collins,1 Ly-Mee Yu,1 Jonathan Cook,1,3 Isabelle Boutron,2 Larissa Shamseer,4 Milensu Shanyinde,1 Rose Wharton,1 Douglas G. Altman1

Objective

Despite wide use of peer reviewing, little is known about its impact on the quality of reporting of published research. From numerous reviews showing poor reporting in published research, it seems that peer reviewers frequently fail to detect important deficiencies. The aims of our study are to examine the (1) nature and extent of changes made to manuscripts after peer review, in relation to reporting of methodological aspects of randomized trials; and (2) type of methodological changes requested by peer reviewers and the extent to which authors adhere to these requests.

Design

This is a retrospective, before-and-after study. We included all primary reports of randomized trials published in BMC Medical Series journals in 2012. We chose these journals because they publish all submitted versions of a manuscript and corresponding peer review comments and author responses. By accessing the prepublication history for each published trial report, we examined any differences (ie, additions, changes, or subtractions) in reporting between the original and final submitted versions of the manuscript and second, whether or not specific CONSORT items were reported. From the prepublication history for each report, we also assessed peer reviewers’ comments with regard to reporting methodological issues. Our main outcome is the percentage improvement in reporting following peer review, measured as the number of CONSORT checklist items reported.

Results

We identified 86 primary reports of randomized trials. The median interval between the original and final submitted version of a manuscript was 146 days (range, 29-333) with 36 days (7-127) from final submission to online publication. The number of submitted versions of a manuscript varied (median 3; 2-7), with a median of two peer reviewers and reviewer rounds per manuscript. Changes between the original and final submitted version were common; overall the median proportion of words deleted from the original manuscript was 11% (1%-59%) and 20% (4%-68%) for words added.

Conclusions

There was substantial variation in terms of timescale and the scale of revisions between original and submitted versions. Further data extraction and an in-depth analysis of the nature and extent of these changes are under way.

1Centre for Statistics in Medicine, University of Oxford, Oxford, UK, sally.hopewell@csm.ox.ac.uk; 2Centre d’Epidémiologie Clinique, Université Paris Descartes, Paris, France; 3Health Services Research Unit, University of Aberdeen, Aberdeen, UK; 4Ottawa Hospital Research Institute, Ottawa, ON, Canada

Conflict of Interest Disclosures

None reported.

Ethical Issues and Misconduct

Identical or Nearly So: Duplicate Publication as a Separate Publication Type in PubMed

Mario Malički,1 Ana Utrobičić,2 Ana Marušić1

Objective

“Duplicate Publication” was introduced into Medical Subject Headings (MeSH) of the National Library of Medicine (NLM) in 1991 as a separate publication type and is defined as “work consisting of an article or book of identical or nearly identical material published simultaneously or successively to material previously published elsewhere, without acknowledgment of the prior publication.” Our aim was to assess how journals corrected duplicate publications indexed by NLM and how these corrections were visible in PubMed.

Design

The data set included 1,011 articles listed as “duplicate publication [pt]” in PubMed on January 16, 2013. We checked PubMed to identify if duplicate articles were linked with a Correction/Comment notice. We also checked the journals’ websites for published notices and identified the reasons provided for the duplications in those notices. The time from the duplicate article publication to the notice of duplication/retraction in PubMed and/or journals was also recorded.

Results

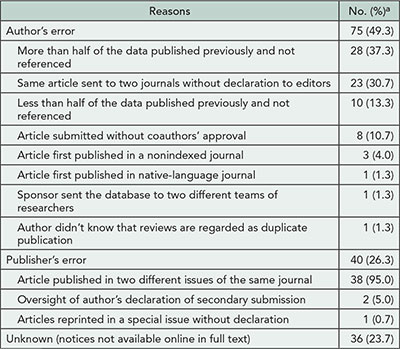

A total of 624 duplicate publications (61.7%) identified in PubMed lacked any notice in respective journals. There were 152 notices of 342 duplicate publications (ie, published twice or more times) found in journals and marked as Comments/Corrections in PubMed. The reasons for duplications are presented in Table 6

. Median time from duplicate publication to notice of duplication was 8 months (95% CI, 6-10). Of articles with notices, 130 of 152 were available online, but only 34 (26.1%) had links to the published notices of duplication. Of indexed duplicate publications, 24 (2.4%) articles were retracted: 10 due to publishers’ errors, 11 due to authors’ errors (3 notices could not be accessed). Of these retractions, 14 were marked as “retracted publication [pt]” in PubMed, and 10 more retractions were found only at journal websites.

Table 6. Reasons for Duplication of Articles With Published Notices of Duplicate Publication (n=152)

Percentages for statements within individual categories are to the total sum for the category.

Conclusions

More than half of duplicate publications identified in PubMed have not been corrected by journals. All stakeholders in research publishing should take seriously the integrity of the published record and take a proactive role in alerting the publishing community to redundant publications.

1University of Split School of Medicine, Department of Research in Biomedicine and Health, Split, Croatia, ana.marusic@mefst.hr; 2Central Medical Library, University of Split School of Medicine, Split, Croatia

Conflict of Interest Disclosures

None reported.

Fate of Articles That Warranted Retraction Due to Ethical Concerns: A Descriptive Cross-sectional Study

Nadia Elia,1 Elizabeth Wager,2 Martin R. Tramèr3

Objective

Guidelines on how to retract articles exist. Our objective was to verify whether articles that warranted retraction due to ethical concerns have been retracted, and whether this had been done according to published guidelines.

Design

Descriptive cross-sectional study, as of January 2013, of 88 articles by Joachim Boldt, published in 18 journals, which warranted retraction since the State Medical Association of Rheinland-Pfalz (Germany) was unable to confirm approval by an ethics committee. According to the recommendations of the Committee on Publication Ethics, we regarded a retraction as adequate when a retraction notice was published, linked to the retracted article, identified title and authors of the retracted article in its heading, explained the reason and who took responsibility for the retraction, and was freely accessible. Additionally, we expected the full text of retracted articles to be freely accessible and marked using a transparent watermark that preserved original content. Two authors extracted the data independently and contacted editors in chief for clarification in cases of inadequate retraction.

Results

Five articles (5.7%), from 1 journal, fulfilled all criteria for adequate retraction. Nine articles (10.2%) were not retracted (no retraction notice published, full-text article not marked). A total of 79 (90%) retraction notices were published, 76 (86%) were freely accessible, but only 15 (17%) were complete. Seventy-three (83%) full-text articles were marked as retracted, of which 14 (15.9%) had an opaque watermark hiding parts of the original content, and 11 (12.5%) had all original content deleted. Fifty-nine (67%) retracted articles were freely accessible. One editor in chief claimed personal problems to explain incomplete retractions; 8 blamed their publishers. One publisher regretted that no mechanism existed for previous publishers to undertake retractions that were required after the publisher had changed. Two publishers mentioned legal threats from Boldt’s coauthors that prevented them from retracting 6 articles.

Conclusions

Guidelines for retraction of articles are incompletely followed. The role of publishers in the retraction process needs to be clarified and standards are needed on how to mark the text of retracted articles. Legal safeguards are required to allow the retraction of articles against the wishes of authors.

1Division of Anaesthesiology, Geneva University Hospitals, Geneva, Switzerland, nadia.elia@hcuge.ch; 2Sideview, Princes Risborough, UK, 3Division of Anaesthesiology, Geneva University Hospitals, and Medical Faculty, University of Geneva, Geneva, Switzerland

Conflict of Interest Disclosures

Nadia Elia is associate editor, European Journal of Anaesthesiology. Elizabeth Wager is a coauthor of COPE retraction guidelines. Martin Tramèr is editor in chief, European Journal of Anaesthesiology. No other disclosures were reported.

Value of Plagiarism Detection for Manuscripts Submitted to a Medical Specialty Journal

Heidi L. Vermette,1 Rebecca S. Benner,1 James R. Scott1,2

Objective

To assess the value of a plagiarism detection system for manuscripts submitted to Obstetrics & Gynecology.

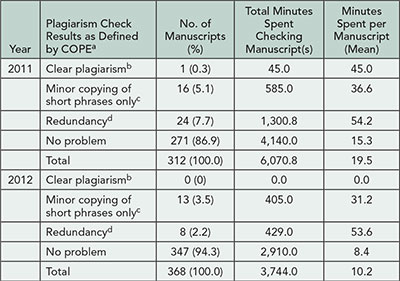

Design

All revised manuscripts identified as candidates for publication between January 24, 2011, and December 26, 2012, were evaluated using the CrossCheck plagiarism detection software. Outcomes were (1) number and percentage of manuscripts with clear plagiarism, minor copying of short phrases only, redundancy, or no problem as defined by the Committee on Publication Ethics (COPE); (2) time needed to check each manuscript; and (3) actions taken for manuscripts with violations.

Results

Clear plagiarism was detected in 1 (0.3%) of 312 manuscripts in 2011 and 0 of 368 manuscripts in 2012 (Table 7). Forty (12.8%) manuscripts in 2011 and 21 (5.7%) manuscripts in 2012 contained minor copying of short phrases only or redundancy. Our staff spent a mean time of 19.5 minutes per manuscript checking for plagiarism in 2011, and this decreased to 10.2 minutes per manuscript in 2012 (P<.001). The plagiarized manuscript, a case report, was rejected. A detailed description of the plagiarized content was included in the letter to the author. The authors of manuscripts with minor problems and redundancy were asked to rewrite or properly attribute the reused or redundant passages, and all of these manuscripts were eventually published.

Table 7. Detection Rate and Time Spent on Plagiarism Checking

aDefinitions from COPE available at http://publicationethics.org/files/u2/02A_plagiarism _submitted.pdf.

bPer COPE: “unattributed use of large portions of text and/or data, presented as if they were by the plagiarist.”

cPer COPE: for example, “in discussion of research paper from non-native language speaker; No misattribution of data.”

dPer COPE: “copying from author’s own work.”

Conclusions

CrossCheck is a practical tool for detecting duplication of exact phrases, but it is limited to searching the English-language literature accessible via various repositories and the Internet and does not replace careful peer review and editor assessment. Checking manuscripts for plagiarism represents a substantial time investment for staff. Two years of data from our journal indicate that the number of problems detected was low, but systematic assessment of manuscripts uncovered problems that otherwise would have appeared in print.

1Obstetrics & Gynecology, American College of Obstetricians and Gynecologists, Washington, DC, USA, rbenner@greenjournal.org; 2University of Utah School of Medicine, Department of Obstetrics and Gynecology, Salt Lake City, UT, USA

Conflict of Interest Disclosures

None reported.

Implementation of Plagiarism Screening for the PLOS Journals

Elizabeth Flavall,1 Virginia Barbour,2 Rachel Bernstein,1 Katie Hickling,2 Michael Morris1

Objective

PLOS publishes a range of journals, from highly selective to 1 that assesses submissions based only on objective criteria. Overall the journals receive more than 5,000 submissions per month. We aimed to assess the quantity of submitted manuscripts with potential plagiarism issues along with the staffing requirements and optimal procedures for implementing plagiarism screening using CrossCheck/iThenticate software across the PLOS journals.

Design

Consecutively submitted research articles were screened until the following numbers were reached: PLOS Medicine (n=203), PLOS Pathogens (n=250), and PLOS ONE (n=241). Articles were screened at initial submission prior to any revision requests. Initial screening was performed by junior staff members, and potential issues were elevated in house for further screening. Manuscripts were classified as major problem, minor problem, and no problem. Extent, originality, and context were assessed using guidance from the Committee on Publication Ethics (COPE) Discussion Document “How should editors respond to plagiarism?” Manuscripts with major problems were flagged but otherwise continued through the review process as normal. Any flagged manuscripts that were not rejected in the first round of review were elevated to journal editors and managers for follow-up.

Results

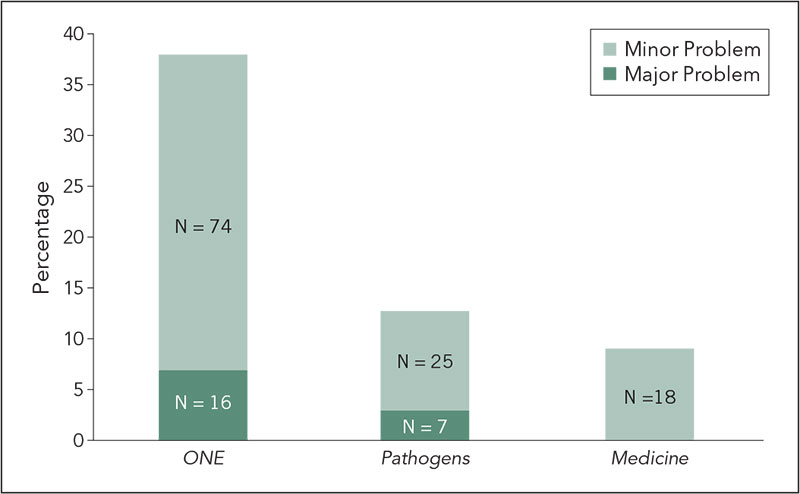

The percent of manuscripts classified as having any problem (major or minor) are shown in Figure 3

Of the manuscripts classified as major problem, almost all were rejected in the first round of review for reasons unrelated to the screening results. Only 1 manuscript with major problems, submitted to PLOS Pathogens, was elevated to the editors for follow-up as it was not rejected following peer review. Minor problems consisted primarily of small instances of self-plagiarism and attributed copying; follow-up was deemed unnecessary for the purposes of this study. The time per manuscript for screening was on average 7 minutes (range, 2-60 minutes). More time was spent on articles with problems. The total screening time per journal over the 3 month pilot was PLOS Medicine: 16 hours, PLOS Pathogens: 29 hours, and PLOS ONE: 42 hours.

Figure 3. Percent of Manuscripts Classified as Having a Problem When Screened for Plagiarism

Conclusion

Based on the results of this study, we have determined that plagiarism screening is feasible at PLOS and have made recommendations for the optimal screening procedures on the different journals.

1PLOS, San Francisco, CA, USA, eflavall@plos.org; 2PLOS, Cambridge, UK

Conflict of Interest Disclosures

Virginia Barbour spoke on behalf of the Committee on Publication Ethics, of which she is Chair, at the 5th International Plagiarism Conference in 2012, which was organized by Plagiarism Today, and which is part of the company that makes iThenticate software. The authors report no other conflicts of interest.

Funding/Support

The authors are employees of PLOS; this work was conducted during their salaried time.

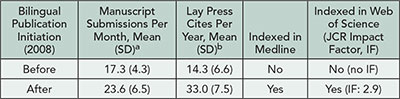

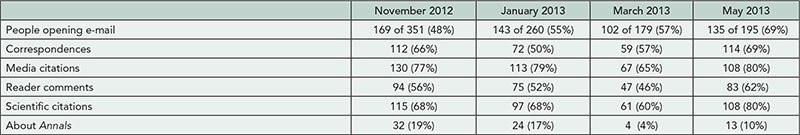

Publication Ethics: 16 Years of COPE

Irene Hames,1 Charon A. Pierson,2 Natalie E. Ridgeway,3 Virginia Barbour4

Objective

The Committee on Publication Ethics (COPE) holds a quarterly forum in which journal editors from its 8,500-strong membership can bring publication-ethics cases for discussion and advice. Since it was established in 1997, COPE has amassed a collection of almost 500 cases. We set out to develop a more comprehensive classification scheme and to classify all the cases, providing a finer level of detail for analysis. We wanted to determine trends and establish whether the analysis could be used to guide the development of new ethical guidelines.

Design

A new taxonomy was developed, comprising 18 main classification categories and 100 key words. Cases were assigned up to 2 classification categories to denote the main topics and up to 10 key words to cover all the issues. Classification and key word coding denotes that a topic was raised and discussed, not that a particular form of publication misconduct had occurred.

Results

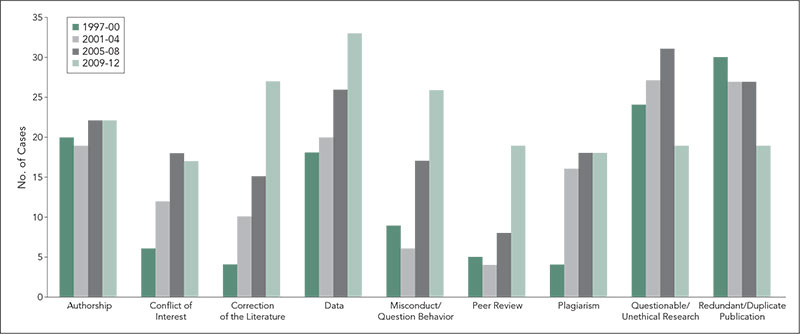

Between 1997 and the end of 2012, 485 cases were brought to COPE. These received 730 classification categories. The number of cases presented annually varied (from 16 to 42), but with no clear pattern, and there was no increase in cases when COPE’s membership increased from 350 to about 3,500 in 2007-2008. The number of classifications has, however, increased gradually for cases, from a mean of 1.3 per case 1997-2000 to 1.7 per case 2009-2012. Authorship and plagiarism have been and remain major topics (Figure 4

). Categories of cases that have increased most noticeably are correction of the literature, data, misconduct/questionable behavior, conflicts of interest, and peer review. Cases involving questionable/unethical research and redundant/duplicate publication remain prominent, but have been decreasing in recent years.

Figure 4. Classification of COPE Cases, 1997-2012 (for Categories With More Than 7 Classifications in a 4-Year Period)

Conclusions

The increasing number of case classification categories over time suggests an increasing complexity in the cases being brought to COPE. Cases in a number of categories are increasing. A key word analysis is being undertaken to provide a greater level of detail and to determine whether new guidelines could effectively be developed in any of those areas. The study is also being used to identify cases for inclusion in educational packages of publication-ethics cases for use by beginners through to experienced groups.

1Irene Hames Consulting, York, UK, irene.hames@gmail.com; 2Journal of the American Association of Nurse Practitioners; Charon A. Pierson Consulting, El Paso, TX, USA; 3Committee on Publication Ethics (COPE), UK; 4PLOS Medicine, Cambridge, UK

Conflict of Interest Disclosures

Irene Hames reports being a COPE Council member, director, and trustee since November 2010 (unpaid); and owner of Irene Hames Consulting (an editorial consultancy advising and informing the publishing, higher education, and research sectors); Charon Pierson reports being a COPE Council member since March 2011 (unpaid); and owner of Charon A. Pierson Consulting (paid consultant for geriatric education grants and online course development in gerontology at various universities). Natalie Ridgeway reports being the COPE Operations Manager since March 2010 (paid employee). Virginia Barbour reports being the chair of COPE since March 2012 and was previously COPE Secretary and Council Member (all unpaid).

Funding/Support

There is no funding support for this project; it is being carried out on a voluntary basis; all expenses necessary to carry out the project are being covered by COPE, as are the costs involved in attending the Congress for presentation of the study.

Monday, September 9

Abstracts from day 2 of the Seventh International Congress on Peer Review and Biomedical Publication will be posted at 8:00AM CST on Monday September 9.

Bias

Underreporting of Conflicts of Interest Among Trialists: A Cross-sectional Study

Kristine Rasmussen,1 Jeppe Schroll,1,2 Peter C. Gøtzsche,1,2 Andreas Lundh1

Objective

To determine the prevalence of conflicts of interest (COI) among non–industry-employed Danish physicians who are authors of clinical trials and to determine the number of undisclosed conflicts of interest in trial publications.

Design

We searched EMBASE for papers with at least 1 Danish author. Two assessors included the 100 most recent papers of drug trials published in international journals that adhere to the ICMJE’s manuscript guidelines. For each paper, 2 assessors independently extracted data on trial characteristics and author COI. We calculated the prevalence of disclosed COI among non–industry-employed Danish physician authors and described the type of COI. We compared the COI reported in the papers to those reported on the publicly available Danish Health and Medicines Authority’s disclosure list to identify undisclosed COI.

Results

Preliminary analysis of the first 50 included papers found 27 papers with industry sponsorship, 14 with mixed sponsorship, and 9 with nonindustry sponsorship. Of a total of 563 authors, 171 (30%) were non–industry-employed Danish physicians. Forty-four (26%) of these authors disclosed 1 or more COI in the journal. Among the 171 authors, 19 (11%) had undisclosed COI related to the trial sponsor or manufacturer of the drug being studied, and 45 (26%) had undisclosed COI related to competing companies manufacturing drugs for the same indication as the trial drug. Full analysis of all 100 trials and further exploration of data will be presented at the conference.

Conclusions

Our preliminary results suggest that there is substantial underreporting of COI in clinical trials. Publicly available disclosure lists may assists journal editors in ensuring that all relevant COI are disclosed.

1The Nordic Cochrane Centre, Department of Rigshospitalet, Copenhagen, Denmark, al@cochrane.dk; 2Faculty of Health and Medical Sciences, University of Copenhagen, Denmark

Conflict of Interest Disclosures

None reported.

Funding/Support

This study received no external funding. The Nordic Cochrane Centre provided in-house resources.

Outcome Reporting Bias in Trials (ORBIT II): An Assessment of Harm Outcomes

Jamie Kirkham,1 Pooja Saini,1 Yoon Loke,2 Douglas G. Altman,3 Carrol Gamble,1 Paula Williamson1

Objective

The prevalence and impact of outcome reporting bias (ORB), whereby outcomes are selected for publication on the basis of the result, have previously been quantified for benefit outcomes in randomized controlled trials (RCTs) on a cohort of systematic reviews. Important harm outcomes may also be subject to ORB where trialists prefer to focus on the positive benefits of an intervention. The objectives of this study were (1) estimate the prevalence of selective outcome reporting of harm outcomes in a cohort of both Cochrane reviews and non-Cochrane reviews, and (2) understand the mechanisms that may lead to incomplete reporting of harms data.

Design

A classification system for detecting ORB for harm outcomes in RCTs and nonrandomized studies was developed and applied to both a cohort of Cochrane systematic reviews and non-Cochrane reviews that considered the synthesis of specific harms data as their main objective. An e-mail survey of trialists from the included trials in the cohort of reviews was also undertaken to examine how harms data are collected and reported in clinical studies.

Results

A total of 234 reviews were identified for the non-Cochrane review cohort and 244 new reviews for the Cochrane review cohort. In 77% (180/234) of the non-Cochrane reviews, there was suspicion of ORB in at least 1 trial. Forty-nine percent (89/180) could not be fully assessed for ORB due to shortcomings in the review reporting standards. In the Cochrane review cohort, many reviews also were not assessable as harm outcomes were poorly specified. Study findings from the reviews in which a full assessment for ORB could be carried out for both the cohorts will be presented. Responses from the trialist survey and an example of how ORB can influence the benefit-harm ratio will also be presented.

Conclusions

Trade-off between benefits and harms is very important. Making informed decisions that consider both benefits and harms of an intervention in an unbiased way is essential to make reliable benefit-harm predictions.

1Department of Biostatistics, University of Liverpool, Liverpool, UK, jjk@liv.ac.uk; 2School of Medicine, University of East Anglia, Norwich, UK; 3Centre for Statistics in Medicine, University of Oxford, Oxford, UK

Conflict of Interest Disclosures

Yoon Loke is a co-convenor of the Cochrane Adverse Effects Methods Group. No other disclosures were reported.

Funding/Support

The Outcome Reporting Bias In Trials (ORBIT II) project is funded by the Medical Research Council (MRC research grant MR/J004855/1). The funders had no role in the study design, data collection, and analysis.

Systematic Review of Evidence for Selective Reporting of Analyses

Kerry Dwan,1 Paula R. Williamson,1 Carrol Gamble,1 Julian P. T. Higgins,2 Jonathan A. C. Sterne,2 Douglas G. Altman,3 Mike Clarke,4 Jamie J. Kirkham1

Objective

Selective reporting of information or discrepancies in trials may occur for many aspects of a trial. Examples include the selective reporting of outcomes and the selective reporting of analyses (eg, subgroup analyses or per protocol rather than intention-to-treat analyses). Selective reporting bias occurs when the inclusion of analyses in the report is based on the results of those analyses. Discrepancies occur when there are changes between protocol and publication. The objectives of this study were (1) review and summarize the evidence from studies that have assessed discrepancies or the selective reporting of analyses in randomized controlled trials and (2) compare current reporting guidelines to identify where improvement is needed.

Design

Systematic review of studies that have assessed discrepancies or the selective reporting of analyses in randomized controlled trials. The Cochrane methodology register, Medline, and PsycInfo were searched in May 2013. Cohorts containing randomized controlled trials (RCTs) were eligible. This review provides a descriptive summary of the included empirical studies. Along with the collaboration with experts in this area, current guidelines, such as Consolidated Standards of Reporting Trials (CONSORT) and International Conference on Harmonisation (ICH), have been compared to identify the specific points that address the appropriate reporting of a clinical trial with respect to outcomes, outcome measures, subgroups, and analyses and to assess whether improvements are needed.

Results

Eighteen studies have been included in this review. Ten compare details within published reports, 4 compare protocols to publications, and 4 compare company documents or documents submitted to regulatory agencies with publications. The studies consider discrepancies in statistical analyses (7); subgroup analyses (9); and composite outcomes (2). No studies considered selective reporting. There were discrepancies in statistical analyses in 22% to 88% of RCTs, in unadjusted vs adjusted analyses (46% to 82%), and in subgroup analyses (31% to 100%). Composite outcomes were inadequately reported.

Conclusion

This work highlights the evidence of selective reporting and discrepancies and demonstrates the importance of prespecifying analysis and reporting strategies during the planning and design of a clinical trial, for the purposes of minimizing bias when the findings are reported.

1University of Liverpool, Department of Biostatistics, Liverpool, UK, kdwan@liverpool.ac.uk; 2University of Bristol, Bristol, UK; 3University of Oxford, Oxford, UK; 4Queen’s University, Belfast, UK

Conflict of Interest Disclosures

Kerry Dwan is a coauthor of the Outcome Reporting Bias In Trials (ORBIT) study. Paula Williamson is a coauthor of one of the included studies in the review and coauthor of the Outcome Reporting Bias In Trials (ORBIT) study. Carrol Gamble is a coauthor of the Outcome Reporting Bias In Trials (ORBIT) study. Douglas G. Altman is a coauthor of one of the included studies in the review, coauthor of the Outcome Reporting Bias In Trials (ORBIT) study, and a coauthor of the CONSORT statement. Jamie Kirkham is a coauthor of the Outcome Reporting Bias In Trials (ORBIT) study. Julian Higgins, Jonathan Sterne, and Mike Clarke report no conflicts of interest.

Funding/Support

The MRC Network of Hubs for Trial Methodology Research. The sponsor has no role in this work.

Impact of Spin in the Abstract on the Interpretation of Randomized Controlled Trials in the Field of Cancer: A Randomized Controlled Trial

Isabelle Boutron,1-4 Douglas G. Altman,5 Sally Hopewell,1,4,5 Francisco Vera-Badillo,6 Ian Tannock,6 Philippe Ravaud1-4,7

Objective

Spin is defined as a specific way of reporting to convince readers that the beneficial effect of the experimental treatment is greater than is shown by the results. The aim of this study is to assess the impact of spin in abstracts of randomized controlled trials (RCTs) with non–statistically significant results in the field of cancer on readers’ interpretation.

Design

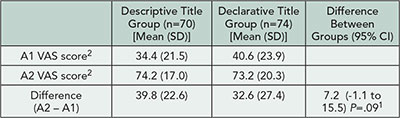

A 2-arm parallel-group RCT comparing the interpretation of results in abstracts with or without spin. We selected from a collection of articles identified in previous work a sample of reports describing negative (ie, statistically nonsignificant primary outcome) RCTs with 2 parallel arms evaluating treatments in the field of cancer and having spin in the abstract conclusion. Selected abstracts were rewritten by 2 researchers according to specific guidelines to remove spin. All abstracts were presented in the same format without the identifying authors or journal name. The names of treatments were masked by using generic terms (eg, experimental treatment A). Corresponding authors (n=300) of clinical trials indexed in PubMed and blinded to the objectives of our study will be randomized using a centralized computer-generated randomization to evaluate 1 abstract with spin or 1 abstract without spin. The primary endpoint is the interpretation of abstract results by the participants. After reading each abstract participants will answer the following question: “Based on this abstract, do you think treatment A would be beneficial to patients?” (answer: numerical scale from 0-10)

Results

Three hundred participants were randomized; 150 assessed an abstract with spin and 150 an abstract with no spin. From abstracts with spin, the experimental treatment was rated as being more beneficial (scale 0-10, mean [SD] = 3.6 [2.5] vs 2.9 [2.6]; P=.02), the trial was rated as less rigorous (scale 0-10, mean [SD] = 4.5 [2.4] vs 5.1 [2.5]; P =.04) and participants were more interested in reading the full-text article (scale 0-10, mean [SD] = 5.1 [3.2] vs 4.3 [3.0]; P =.0311). There was no statistically significant difference for the importance of the study (scale 0-10, mean [SD] = 4.6 [2.4] vs 4.9 [2.4]; P =.17) and the need to run another trial (scale 0-10, mean [SD] = 4.8 [2.9] vs 4.2 [2.9]; P =.06).

Conclusion

Spin in abstracts of RCTs in the field of cancer may have an impact on the interpretation of these trials.

1INSERM U738, Paris, France; 2Centre d’Épidémiologie Clinique, AP-HP (Assistance Publique des Hôpitaux de Paris), Hôpital Hôtel Dieu, Paris, France, isabelle.boutron@htd.aphp.fr; 3Paris Descartes University, Sorbonne Paris Cité, Faculté de Médecine, Paris, France; 4French Cochrane Center, Paris, France; 5Centre for Statistics in Medicine, Oxford University, Oxford, UK; 6University of Toronto, Toronto, ON, Canada; 7Department of Epidemiology, Columbia University Mailman School of Public Health, New York, NY, USA

Conflict of Interest Disclosures

None reported.

Funding/Support

The study received a grant from the French Ministry of Health (Programme Hospitalier de Recherche Clinique cancer) and the Fondation pour la Recherche Médicale (FRM, Equipe Espoir de la Recherche 2010). The funder had no role in the design and the conduct of this study.

Publication Bias

Authors’ Reasons for Unpublished Research Presented at Biomedical Conferences: A Systematic Review

Roberta W. Scherer, Cesar Ugarte-Gil

Objective

Only about half of studies presented in conference abstracts are subsequently published in full. Reasons for not publishing abstract results in full are often attributed to the expectation of journal rejection. We aimed to systematically review studies that asked abstract authors for reasons for failing to publish abstract results in full.

Design

We searched Medline, EMBASE, the Cochrane Library, Web of Science, and references cited in eligible studies in November 2012 for studies examining full publication of results at least 2 years after presentation at a conference. We included studies if investigators contacted abstract authors for reasons for nonpublication. We independently extracted information on methods used to contact abstract authors, study design, and reasons for nonpublication. We calculated a weighted mean average of the proportion of type of reason, weighted by total number of responses by study.

Results

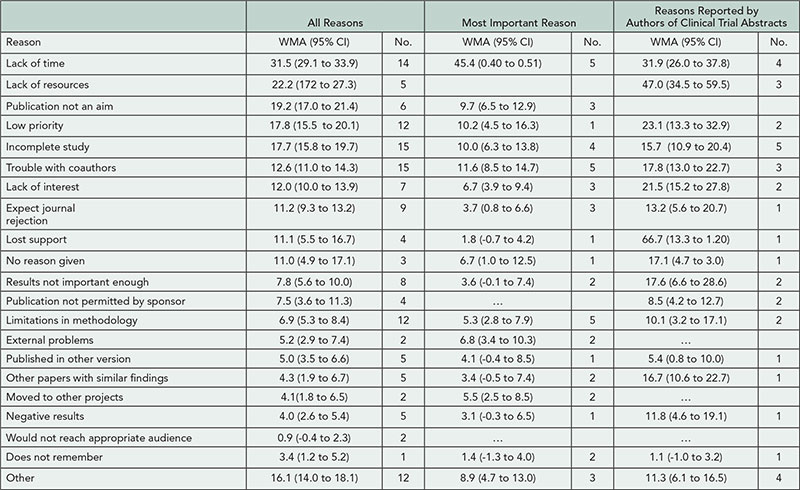

We identified 27 (of 367) studies published between 1992 and 2011 that were eligible for this study. The mean full publication rate was 56% (95% CI, 55 to 57%; n = 24); 7 studies reported on abstracts describing clinical trials. Investigators typically sent a closed-ended questionnaire with free text options to the lead and then successive authors until receiving a response. Of 24 studies that itemized reported reasons, 6 collected information on the most important reason. Lack of time comprised 31.5% of reasons in studies that had included this as a reason and 45.4% of the most important reason (Table 8

). Other commonly stated reasons were lack of resources, publication not an aim, low priority, incomplete study, and trouble with coauthors. Limitations of these results include heterogeneity across studies and self-report of reasons by authors.

Table 8. Proportion of Reasons for Nonpublication by Total Number of Reasons Reported

Columns include studies reporting multiple reasons, the most important reason, or the reasons reported by authors of clinical trial abstracts. WMA indicates weighted mean average.

Conclusion

Across medical specialties, the main reasons for not subsequently publishing an abstract in full lies with factors related to the abstract author rather than with a journal.

Johns Hopkins Bloomberg School of Public Health, Baltimore, MD, USA, rscherer@jhsph.edu

Conflict of Interest Disclosures

None reported.

Funding/Support

Support was provided by the National Eye Institute, National Institutes of Health (U01EY020522-02). The sponsor had no input in the design or conduct of this study.

Role of Editorial and Peer Review Processes in Publication Bias: Analysis of Drug Trials Submitted to 8 Medical Journals

Marlies van Lent,1 John Overbeke,2 Henk Jan Out1

Objective

Positive publication bias has been widely addressed. It has mainly been ascribed to authors and sponsors failing to submit negative studies, but may also result from lack of interest from editors. In this study, we evaluated whether submitted manuscripts with negative outcomes were less likely to be published than studies with positive outcomes.

Design

A retrospective study of manuscripts reporting results of randomized controlled trials (RCTs) submitted to 8 medical journals between January 1, 2010, and April 30, 2012, was done. We included 1 general medical journal (BMJ) and 7 specialty journals (Annals of the Rheumatic Diseases, British Journal of Ophthalmology, Diabetologia, Gut, Heart, Journal of Hepatology, and Thorax). We selected journals indexed with the highest Impact Factors within subject categories, according to Institute for Scientific Information Journal Citation Report 2011, and that had published a substantial number of drug RCTs in 2010-2011. Original research manuscripts were screened and those reporting results of RCTs were included, if at least 1 study arm assessed the efficacy or safety of a drug intervention and a statistical test was used to evaluate treatment effects. Manuscripts were either outright rejected, rejected after external peer review, or accepted for publication. Trials were classified as nonindustry, industry-supported, or industry-sponsored, and outcomes as positive or negative, based on predefined criteria.

Results

Of 15,972 manuscripts submitted, we identified 472 drug RCTs (3.0%), of which 98 (20.8%) were accepted for publication. Among submitted drug RCTs, 287 (60.8%) had positive and 185 (39.2%) negative results. Of these, 135 (47.0%) and 86 (46.5%), respectively, were rejected immediately and 91 (31.7%) and 61 (33.0%) after peer review. In total, compared to the number of submitted manuscripts, 60 (20.9%) positive studies were published compared to 38 (20.5%) negative studies. One positive study was withdrawn by authors before editorial decisions were made. Nonindustry trials (n=213) had positive outcomes in 138 manuscripts (64.8%), compared to 78 (70.9%) in industry-sponsored studies (n=110). Industry-supported trials (n=149) were positive in 71 manuscripts (47.7%) and negative in 78 manuscripts (52.3%).

Conclusion

Submitted manuscripts on drug RCTs with negative outcomes are not less likely to be published than those with positive outcomes.

1Clinical Research Centre Nijmegen, Department of Pharmacology–Toxicology Radboud University Nijmegen Medical Centre, Nijmegen, the Netherlands, M.vanLent@pharmtox.umcn.nl; 2Medical Scientific Publishing, Department of Primary and Community Care, Radboud University Nijmegen Medical Centre, Nijmegen, the Netherlands

Conflict of Interest Disclosures

Henk Jan Out is an employee of Teva Pharmaceuticals next to his professorship at the university. John Overbeke is the immediate past-president of the World Association of Medical Editors (WAME). Marlies van Lent reports no conflicts of interest.

Funding/Support

This research was supported by an unrestricted educational grant from MSD. MSD (Merck, Sharp & Dohme) B.V. is a Dutch subsidiary of Merck & Co, Inc located in Oss, the Netherlands. The funder had no role in the study’s design, data collection and analysis, or the preparation of this abstract.

Accessing Internal Company Documents for Research: Where Are They?

L. Susan Wieland,1 Lainie Rutkow,2 S. Swaroop Vedula,3 Christopher N. Kaufmann,2 Lori Rosman,4 Claire Twose,3,4 Nirosha Mahendraratnam,2 Kay Dickersin2

Objective

Internal pharmaceutical company data have attracted interest because they have been shown to sometimes differ from what is publicly reported, and because they often make unpublished data available to researchers. However, internal company documents are not readily available, and researchers have obtained data through litigation and requests to regulatory authorities. In contrast, repositories of internal tobacco industry documents, created through massive litigation, have supported diverse and informative research. Our objective was to describe sources of internal corporate documents that had been used in health research, so that we document where these important data, that could be useful to researchers, are located.

Design

Although our main interest was pharmaceutical industry documents, our initial search strategy was designed to identify research articles that used internal company documents from any industry. We searched PubMed and EMBASE, and 2 authors independently reviewed retrieved records for eligibility. We checked our findings against the Tobacco Documents Bibliography (http://www.library.ucsf.edu/tobacco/docsbiblio), citations to included articles, and lists from colleagues. When we discovered that we had missed many articles, informationists redesigned and ran an additional search to identify articles using pharmaceutical documents.

Results

Our initial electronic searches retrieved 9,305 records, of which 357 were eligible for our study. Ninety-one percent (325/357) used tobacco, 5% (17/357) pharmaceutical, and 4% (15/357) other industry documents. Most articles (325/357) posed research questions about the strategic behavior of the company. Despite extensive testing, our search did not retrieve all known studies: we missed 41% of articles listed in the Tobacco Documents Bibliography and reference lists led to 4 additional eligible pharmaceutical studies. Our redesigned search yielded 26,605 citations not identified by the initial search, which we decided was an impractical number to screen.

Conclusions

Searching for articles using internal company documents is difficult and resource-intensive. We suggest that indexed and curated repositories of internal company documents relevant to health research would facilitate locating and using these important documents.

1Brown University, Providence, RI, USA; 2Johns Hopkins Bloomberg School of Public Health, Baltimore, MD, USA, kdickers@jhsph.edu; 3Johns Hopkins University, Baltimore, MD, USA; 4William H. Welch Medical Library, Baltimore, MD, USA

Conflict of Interest Disclosures

None reported.

Trial Registration

Publication Agreements or “Gag Orders”? Compliance of Publication Agreements With Good Publication Practice 2 for Trials on ClinicalTrials.gov

Serina Stretton,1 Rebecca A. Lew,1 Luke C. Carey,1 Julie A. Ely,1 Cassandra Haley,1 Janelle R. Keys,1 Julie A. Monk,1 Mark Snape,1 Mark J. Woolley,1 Karen L. Woolley1-3

Objective

Good Publication Practice 2 (GPP2) recognizes the shared responsibility of authors and industry sponsors to publish clinical trial data and confirms authors’ freedom to publish. We quantified the extent and type of publication agreements between industry sponsors and investigators for phase 2-4 interventional clinical trials on ClinicalTrials.gov and determined whether these agreements were GPP2 compliant.

Design

Trial record data were electronically imported on October 7, 2012, and trials were screened for eligibility (phase 2-4, interventional, recruitment closed, results available, first received after November 10, 2009, any sponsor type, investigators not sponsor employees). Publication agreement information was manually imported from the Certain Agreements field. Two authors independently categorized agreement information for GPP2 compliance, resolving discrepancies by consensus. An independent academic statistician conducted all analyses.

Results

Of the 484 trials retrieved, 388 were eligible for inclusion and 96 were excluded (12 trials that were still active and 84 trials with investigators who were sponsor employees). Of the eligible trials, 81% (313/388) reported publication agreement information in the Certain Agreements field. Significantly more publication agreements reported on ClinicalTrials.gov were GPP2 compliant than noncompliant (74% [232/313] vs 26% [81/313], χ2 P<.001). Reasons for GPP2 noncompliance were insufficient, unclear, or ambiguous information reported (48%, 39/81), sponsor-required approval for publication (36%, 29/81), sponsor-required text changes (9%, 7/81), and sponsor bans on publication (7%, 6/81). Drug trials (180/255) were significantly less likely to have GPP2-compliant agreements than other trials (52/58; relative risk 0.79, 95% CI 0.70-0.89, P=.003). Publication agreement compliance varied among affiliates of the same sponsor. Follow-up of agreements with insufficient information and a contact e-mail (response rate, 12.5% [4/32]) revealed 2 additional agreements banning publication, 1 requiring approval, and 1 GPP2-compliant agreement.

Conclusions

This study investigated publication agreements using the largest, international, public-access database of publication agreements. Most, but not all, publication agreements for clinical trials were consistent with GPP2. Although “gag orders” forbidding publication were infrequent, any such ban is unacceptable. Sponsors and their affiliates must ensure that publication agreements confirm authors’ freedom to publish data. Sponsors should also audit agreement information reported on ClinicalTrials.gov for compliance with GPP2 and for consistency with other publication agreement information.

1ProScribe Medical Communications, Noosaville, Queensland, Australia, ss@proscribe.com.au; 2University of the Sunshine Coast, Maroochydore DC, Queensland, Australia; 3University of Queensland, Brisbane, Queensland, Australia

Conflict of Interest Disclosures

None reported.

Reporting of Results in ClinicalTrials.Gov and Published Articles: A Cross-sectional Study

Jessica E. Becker,1 Harlan M. Krumholz,2 Gal Ben-Josef,1 Joseph S. Ross2

Objective

In 2007, the US Federal Drug Administration (FDA) Amendments Act expanded requirements for ClinicalTrials.gov, a public clinical trial registry maintained by the US National Library of Medicine, mandating results reporting within 12 months of trial completion for all FDA-regulated drugs. We compared clinical trial results reported on ClinicalTrials.gov with corresponding published articles.

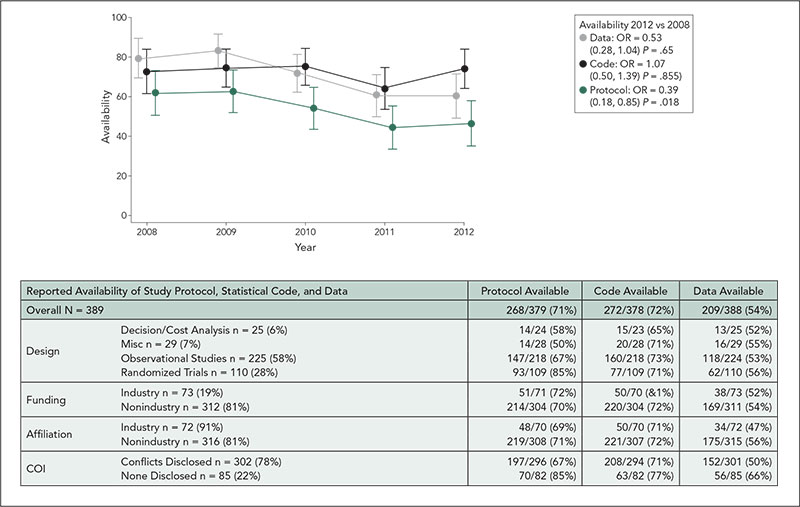

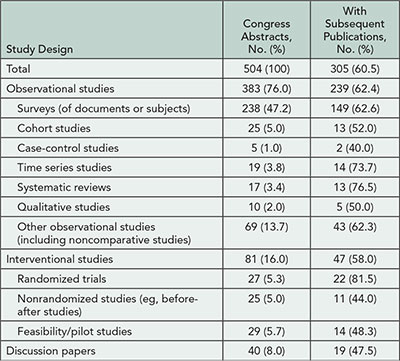

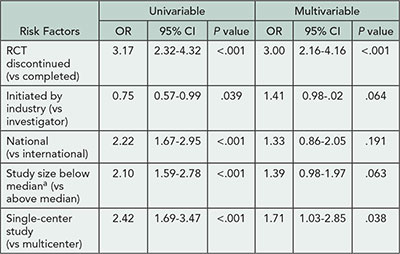

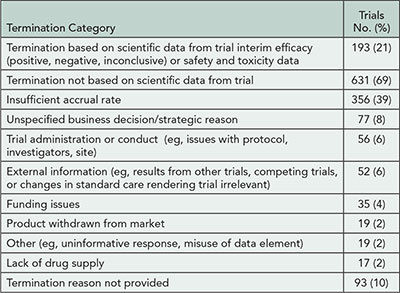

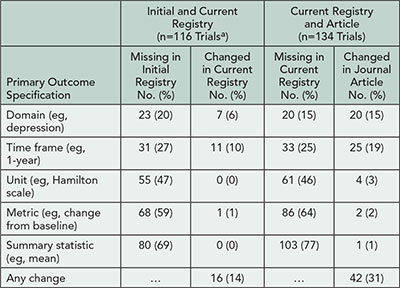

Design