Quantifying and Assessing the Use of Generative AI by Authors and Reviewers in the Cancer Research Field

Abstract

Daniel S. Evanko,1 Michael Di Natale1

Objective

This study assessed the ability to reliably detect the use of generative artificial intelligence (genAI) by authors and reviewers in the field of cancer research, quantified this usage over time, and measured the impact of a change in policy on use of genAI by reviewers.

Design

In this cross-sectional study, original research manuscripts and reviewer comments submitted to 10 journals of the American Association for Cancer Research from 2021 through 2024 were analyzed for the presence of AI-generated text. The abstract, methods section, and reviewer comments were extracted from the submission system along with author and reviewer metadata. These sections were chosen because (1) the plain text was stored in the submission system, thus eliminating the need for extraction from files, and (2) we hypothesized that authors would most likely use genAI in the abstract and had observed that methods can be prone to false positives. Text was analyzed using an AI detection tool and classified by average AI likelihood score. Discrimination between full de novo generation of text, language translation, and editing was not possible.

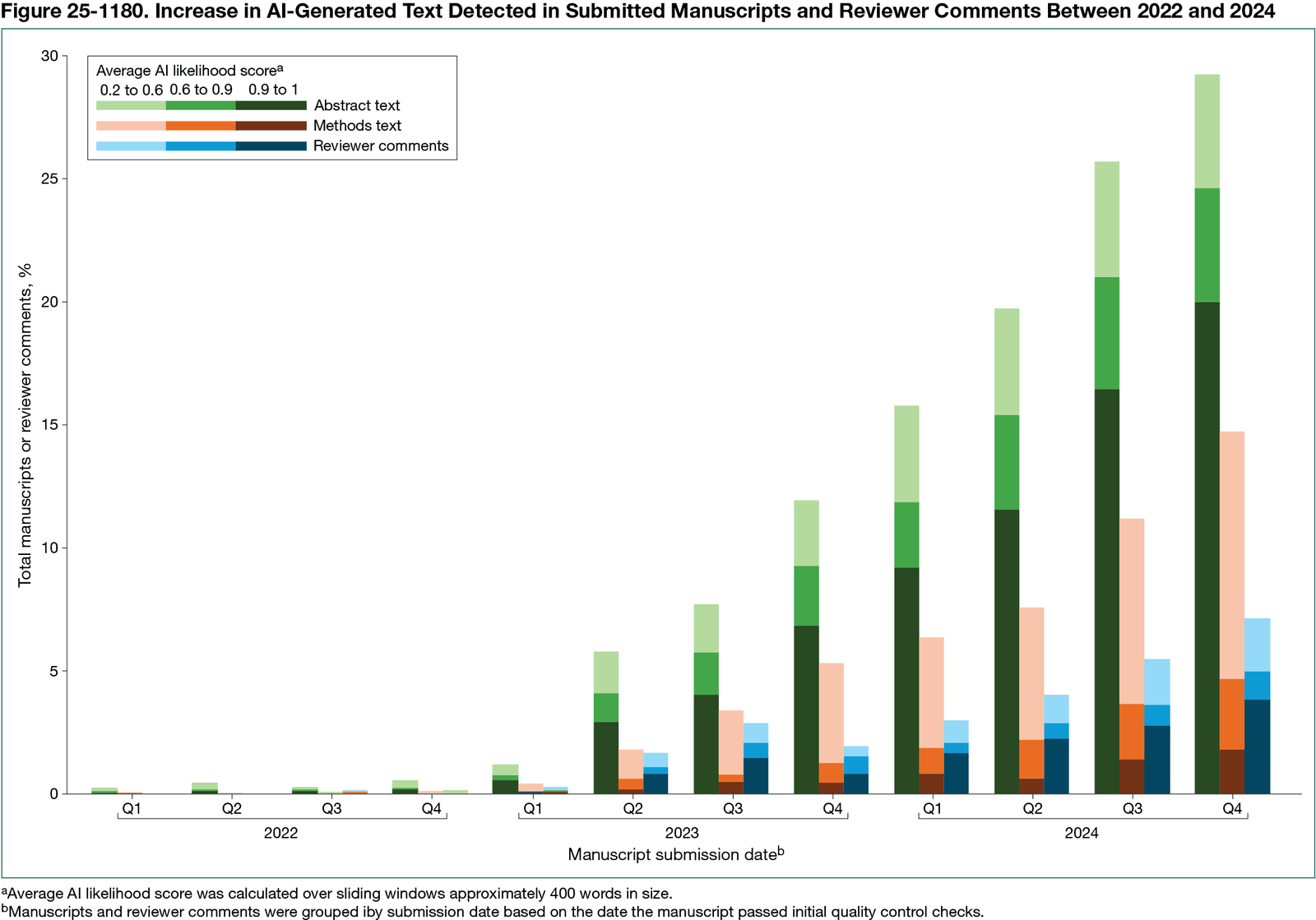

Results

We analyzed 46,500 abstracts, 46,021 methods sections, and 29,544 reviewer comments. In 18,467 manuscripts submitted from 2021 through Q3 2022, only 7 abstracts—and no methods or reviewer comment text—were scored as highly likely to contain AI-generated text (AI likelihood score, 0.9 to 1). There were very few false positives (abstract: 0.22%, 40/18,467; methods: 0.07%, 13/18,303; reviewer comments: 0.03%, 4/12,908). Detection of AI-generated text in all 3 sources started increasing dramatically beginning in Q1 2023 after the public release of ChatGPT in November 2022 (Figure 25-1180). Detections in reviewer comments dropped by >50% (from 46 to 34) in Q4 2023 after the journals implemented a policy prohibiting use of genAI for peer review before resuming a linear upward trend. In aggregate, the presence of AI-generated text in 2024 varied greatly between abstracts (23%, 2749/11,959), methods text (10%, 1209/11,875), and reviewer comments (4.8%, 348/7211). There was also substantial journal-dependent variation, from 12% to 15% of abstracts at 3 journals (183/1464, 29/214, 32/214) to 28% to 33% of abstracts at 2 journals (203/716, 438/1342). Authors affiliated with institutions in English-speaking countries were less than half as likely to use genAI compared with other authors. The presence of AI-generated text was associated with a substantially higher rejection rate before peer review regardless of institution location. There was a slight association of AI-generated text with lower-rated reviewer comments.

Conclusions

AI-generated text can be detected in manuscripts and reviewer comments with virtually no false positives. GenAI usage is increasing rapidly, but journal policies to regulate usage can have a limited impact. Usage varies considerably between journals and the type of text and appears to be associated with different editorial outcomes.

1American Association for Cancer Research, Philadelphia, PA, US, daniel.evanko@aacr.org.

Conflict of Interest Disclosures

Daniel S. Evanko is a member of the Peer Review Congress Advisory Board but was not involved in the review or decision for this abstract.

Acknowledgment

We thank John Ho for his assistance collecting the data and entering it into the AI detection tool to obtain the AI likelihood scores.