Abstract

Performance and Practicality of a Randomized Clinical Trial Classifier in Systematic Literature Reviews vs a Traditional Approach

Ambar Khan,1 Ania Bobrowska,2 Chloe Coelho,2 Hannah Frost,2 Swati Kumar,2 Hannah Russell,3 Anna Noel-Storr,4 Molly Murton2

Objective

This study aimed to evaluate a traditional (validated search filter) vs machine learning (classifier) approach for identifying randomized clinical trials (RCTs), in the context of a real-life systematic literature review (SLR) project.

Design

A real-life SLR was conducted following standard practices as recommended by the Cochrane Handbook,1 covering all identification stages from electronic database searches to full-text review, to identify RCTs on fibrodysplasia ossificans progressiva (FOP; a rare disease). Two approaches were compared for the searches conducted in January 2025: (1) combining population search terms with the Scottish Intercollegiate Guidelines Network (SIGN) RCT search filter (“filter” approach) and (2) using population search terms only and running the results through the Cochrane RCT classifier at a 99% recall threshold (“classifier” approach). All subsequent stages were conducted in an identical manner by the same review team, with both abstracts and full texts dual-screened by independent reviewers against prespecified eligibility criteria. The time taken for each approach was measured and reviewer feedback was gathered via a modified NASA Task Load Index. Performance metrics for each approach were calculated, including the accuracy, sensitivity, specificity, and precision. McNemar test was used to assess statistical significance of the differences in the performance metrices, and a P value <.05 was considered statistically significant.

Results

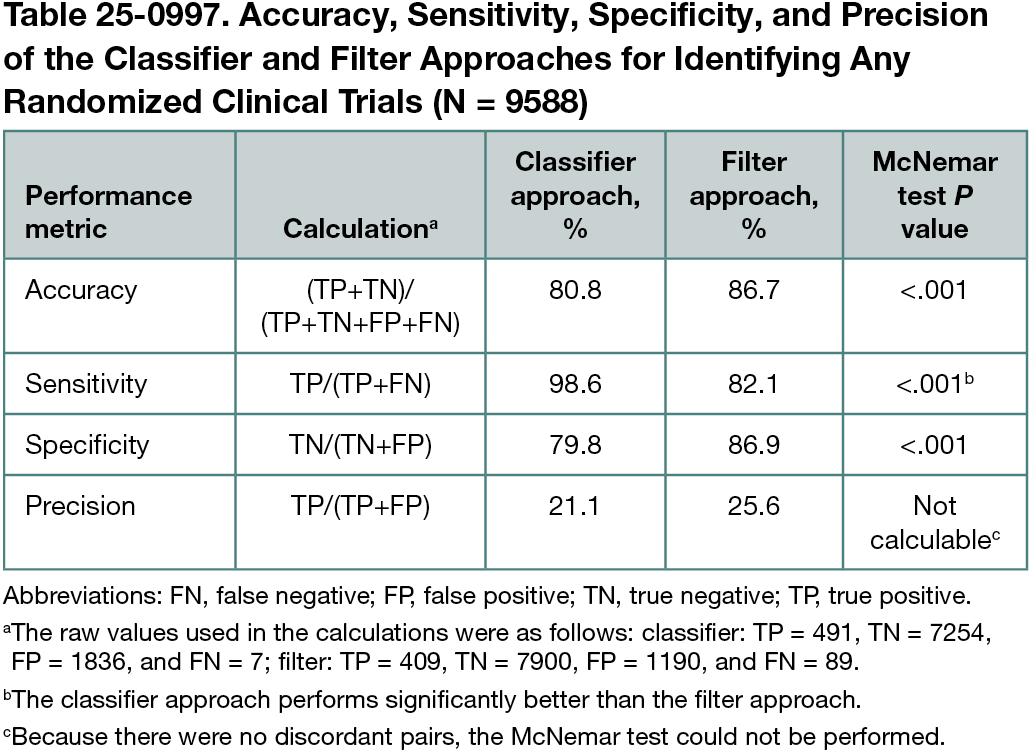

Prior to application of the classifier or filter, 9588 records were found for the FOP population. After application of the classifier or filter, 2327 and 1582 abstracts were identified as RCTs, respectively. At the end of the full-text review stage for each approach, the same 10 papers reporting on FOP RCTs were identified, demonstrating equal efficacy in terms of finding relevant papers in the context of a real-life SLR. However, when looking more generally at the classification of RCT vs non-RCT publications within the 9588 records (irrespective of ultimate inclusion in the SLR), sensitivity was significantly higher for the classifier vs the filter (McNemar test P < .001; Table 25-0997). The trade-off for higher sensitivity of the classifier was that it had a significantly lower specificity and therefore required 31% more time than the filter approach (25.79 vs 17.86 hours). This also resulted in a less positive user experience in effort and frustration domains.

Conclusions

For SLRs, where the identification of all relevant evidence is crucial, the performance of the classifier was superior to a traditional search filter. However, this was at the cost of increased time and reduced specificity. Further testing of the application of machine learning classifiers to augment literature review processes in real-life projects (including other, larger disease areas) is required. The risk of bias in this research, stemming from the researchers being unblinded to the approach, should also be noted.

Reference

1. Higgins J, Thomas J, Chandler J, Cumpston M, Li T, Page MJ, et al. Cochrane Handbook for Systematic Reviews of Interventions Version 6.3. Cochrane; 2022.

1Costello Medical, London, UK; 2Costello Medical, Cambridge, UK, molly.murton@costellomedical.com; 3Costello Medical, Manchester, UK; 4Cochrane, London, UK.

Conflict of Interest Disclosures

Ambar Khan, Ania Bobrowska, Chloe Coelho, Hannah Frost, Swati Kumar, Hannah Russell, and Molly Murton reported being employees of Costello Medical. No other disclosures were reported.

Funding/Support

This study was funded by Costello Medical.

Role of the Funder/Sponsor

Costello Medical supported all aspects of the study, including the design and conduct of the study; collection, management, analysis, and interpretation of the data; preparation, review, or approval of the abstract; and the decision to submit the abstract for presentation.