Perception of Open Science Practices on Reproducibility Among Reviewers of Grant Proposals at Research Funding Organizations

Abstract

Ayu Putu Madri Dewi,1 Nicholas J. DeVito,2 Gowri Gopalakrishna,1,3 Inge Stegeman,4,5 Mariska Leeflang1

Objective

To improve research reproducibility, referees of grant proposals could assess the expected reproducibility of a proposed project. A simple Open Science checklist may assist referees in assessing whether referees are more capable of predicting the reproducibility of research proposals with an Open Science checklist than without one.1

Design

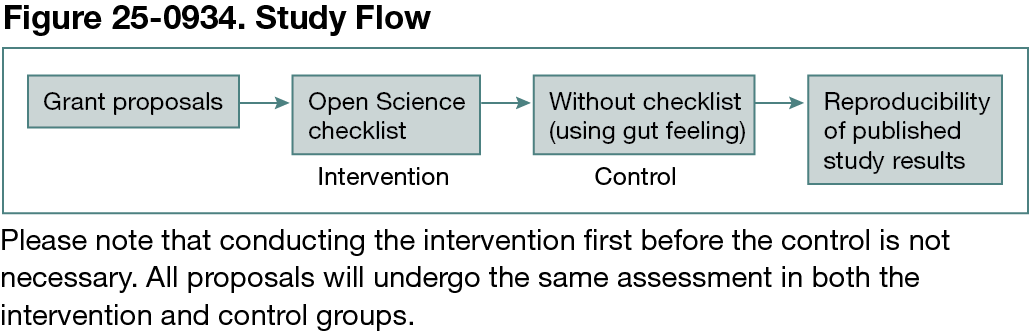

This is a nonrandomized controlled trial and a comparative prediction study using a minimum of 100 granted research proposals and their corresponding published outputs (α = 5%, power = 80%, correlation = 0.6).2 The study focuses on empirical research with quantitative data across diverse fields. A mock grant review was started on April 7, 2025, with a minimum of 4 referees divided into 2 groups: one using an Open Science checklist and the other not. Each proposal will be assessed under both conditions (Figure 25-0934). The checklist, developed in a previous study3 and adapted for grant proposal evaluation, serves as the intervention. Referees are blinded to final study outcomes to minimize bias. The primary outcome is predictive accuracy, assessed using the area under the curve (AUC) from receiver operating characteristic (ROC) analysis. Referees’ predictions on the actual reproducibility of the published studies will be compared. Open Science scores are numerical (0-14), while reproducibility outcomes are binary (yes or no). The AUC from logistic regression will be used and visualized in ROC curves. Comparative predictive accuracy will be calculated for both groups. Sensitivity analyses will explore variations in checklist scoring and study reproducibility across different research fields. A database including grant proposal data, Open Science scores, reproducibility status, and analytical code in R will be shared in an open repository. We hypothesize that referees without the checklist will predict reproducibility no better than random chance (with an AUC of approximately 0.50), while those using the checklist will achieve an AUC of approximately 0.65, indicating improvement while acknowledging that the checklist is not a perfect tool.

Results

As of June 5, 2025, a total of 15 mock grant reviewers have completed 89 mock reviews across 60 unique grant proposals: 46 reviews in the checklist group and 43 in the control group. Twenty-nine proposals were reviewed in both groups, forming a fully paired subset. Analysis is ongoing.

Conclusions

We will share our results with funding agencies to explore integrating the Open Science checklist into grant review processes to enhance reproducibility.

References

1. Parker TH, Griffith SC, Bronstein JL, et al. Empowering peer reviewers with a checklist to improve transparency. Nat Ecol Evol. 2018;2(6):929-935. doi:10.1038/s41559-018-0545-z

2. Fraser H, Bush M, Wintle BC, et al. Predicting reliability through structured expert elicitation with the repliCATS (Collaborative Assessments for Trustworthy Science) process. PLoS One. 2023;18(1):e0274429. doi:10.1371/journal.pone.0274429

3. Dewi APM, Rethlefsen M, Schroter S, et al. Measuring the efficacy of an intervention to improve reproducibility of scientific manuscripts: study protocol for a randomized controlled trial. OSFHOME. October 31, 2024. Accessed July 2, 2025. https://osf.io/b5g6y

1Amsterdam UMC, Epidemiology and Data Science Department, Amsterdam, The Netherlands; 2Bennett Institute for Applied Data Science, Nuffield Department of Primary Care Health Sciences, University of Oxford, Oxford, UK, nicholas.devito@phc.ox.ac.uk; 3Department of Epidemiology, Faculty of Health, Medicine, and Life Sciences Maastricht University, The Netherlands; 4Department of Otorhinolaryngology and Head & Neck Surgery University Medical Center Utrecht, The Netherlands; 5Brain Center, University Medical Center Utrecht, The Netherlands.

Conflict of Interest Disclosures

None reported.