Citation Context Analysis of Retracted Articles: Leveraging Retraction Reasons to Track Unreliability in Citing Literature

Abstract

Yagmur Ozturk,1 Frédérique Bordignon,2,3 Cyril Labbé,1 François Portet1

Objective

To address the challenge of tracking the use of unreliable sources in scientific literature, we analyzed citations to retracted articles (CRAs) using retraction reasons (RRs) and citation context (CC) analysis. Our aim was to inform the development of automated tools for postpublication peer review (PPPR) by identifying instances in which a CRA is not only an unreliable source, but may also introduce unreliability into the citing work itself, depending on the way it is cited. We examined the degree to which the content of the citation context aligns with the RR, indicating potential risk for the integrity of the citing article.

Design

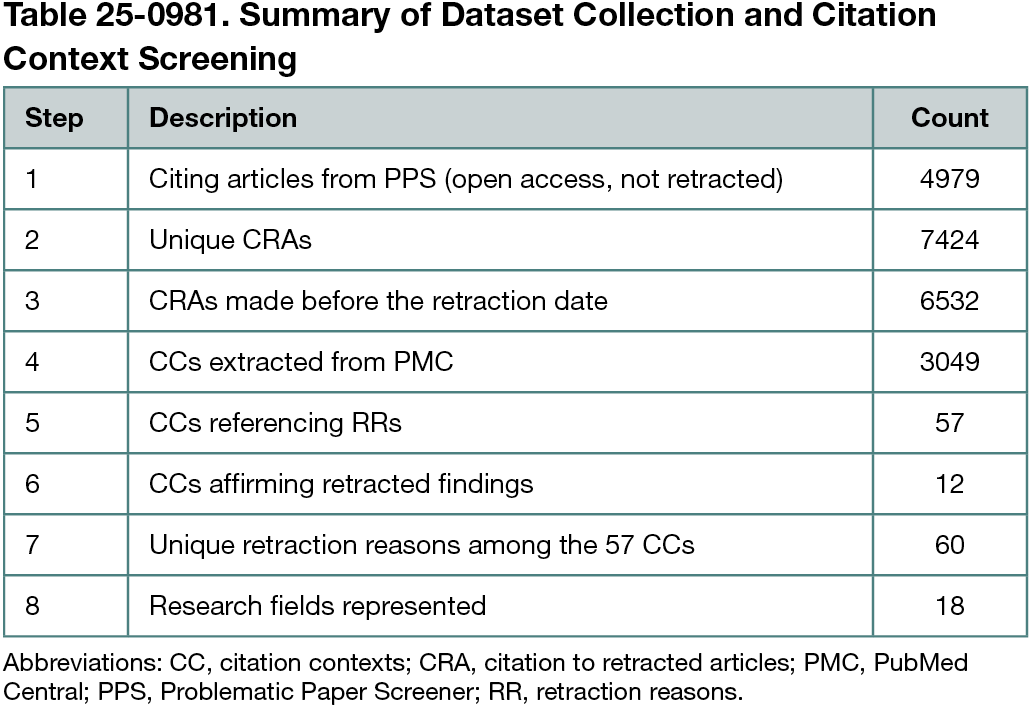

RRs were obtained from the Retraction Watch database1 for a set of 22,558 publications that cite retracted sources accessed from the Problematic Paper Screener.2 We selected 29 of 108 retraction reasons that directly relate to scientific content of the article (eg, unreliable or fabricated data or results). To ensure access to CCs, we limited our dataset to open access citing articles and excluded those that had themselves been retracted. This yielded 4979 citing articles and 7424 unique CRA instances; 88% of these were preretraction citations. We then limited the rest of the analysis to a subset of 3049 CCs from the PubMed Central Open Access corpus.3 We used regular expressions to search for terms referencing concepts related to the retraction reasons (eg, “data,” “results,” “findings”) and manually validated their use in the CCs.

Results

Of 3049 CCs, we identified 57 that explicitly mentioned the exact issue mentioned in the RR. Among these, 12 emphasized similarity or consistency with the retracted findings, which are instances we interpret as potential indicators of unreliability in the citing paper, meriting PPPR. These 57 CCs span 18 research fields and involve 60 distinct RRs (some overlapping with the selected 29). The cases are distributed across a range of publishers and fields, offering a basis to be used in the development of automatic systems.

Conclusions

While retracted publications are unreliable sources, we argue that how the retracted work is used and why it was retracted matter more than the fact that a retracted reference is found in a publication. If the retracted source is used to support the citing work, this calls for a secondary assessment when it is detected. By combining RRs with CC analysis, our approach informs a practical method to flag such cases for PPPR and supports the development of tools for large-scale CRA assessment.

References

1. The Center for Scientific Integrity. Retraction Watch Database.

2. Cabanac G, Labbé C, Magazinov A. The ‘Problematic Paper Screener’ automatically selects suspect publications for post-publication (re)assessment. arXiv. Preprint posted online October 7, 2022. doi:10.48550/arXiv.2210.04895

3. National Library of Medicine. PMC Open Access Subset. Accessed January 30, 2025. https://pmc.ncbi.nlm.nih.gov/tools/openftlist/

1Univ. Grenoble Alpes, CNRS, Grenoble INP, LIG, Grenoble, France, yagmur.ozturk@univ-grenoble-alpes.fr; 2Ecole Nationale des Ponts et Chaussées, Institut Polytechnique de Paris, Marne-la-Vallée, France; 3LISIS, INRAE, Univ Gustave Eiffel, CNRS, Marne-la-Vallée, France.

Conflict of Interest Disclosures

None reported.

Funding/Support

We acknowledge the NanoBubbles project that has received Synergy grant funding from the European Research Council within the European Union’s Horizon 2020 program (grant agreement number 951393) (https://cordis.europa.eu/project/id/951393).