Abstract

Characterizing Major Issues in ClinicalTrials.gov Results Submissions

Heather D. Dobbins,1 Cassiah Cox,1 Tony Tse,1 Rebecca J. Williams,1 Deborah A. Zarin1

Objective

The ClinicalTrials.gov results database provides public access to aggregate results data for nearly 27,000 clinical studies (as of June 2017). Results submissions must include all prespecified primary and secondary outcomes, all serious adverse events, and may not include subjective narratives. Results submissions are validated using 3 components: (1) structured data elements, (2) automated system-based checks, and (3) manual review by ClinicalTrials.gov staff. Since 2009, ClinicalTrials.gov has refined manual review criteria covering major issues that must be corrected or addressed as well as advisory issues; the goal is to avoid apparent errors, deficiencies, or inconsistencies and to ensure complete, sensible entries that can be understood by readers of the medical literature. We set out to characterize the type and frequency of major issues identified by manual review in results submissions.

Design

A sample of initial results submissions were first reviewed by ClinicalTrials.gov staff per standard review procedures, then a second reviewer examined a convenience subsample to assess agreement and categorize major issues using categories derived from the results review criteria. Major issues were only counted once per submission.

Results

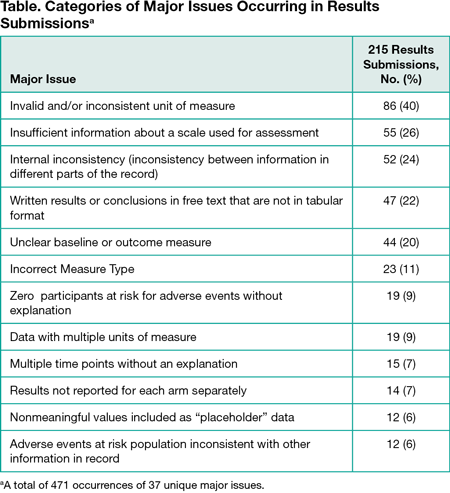

Among 358 initial results submissions in the 4 weeks between July 19, 2015 and August 15, 2015, 240 had major issues. In a convenience subsample of 215 submissions (114 [53%] nonindustry and 101 [47%] industry) we identified 471 occurrences of 37 unique major issue categories with a mean (SD) of 2.2 (1.3) unique major issues overall (1.9 [1.2] industry and 2.5 [1.4] non-industry). The top 12 major issue categories accounted for 398 (85%) occurrences of all major issues (Table). The top 5 unique major issues (occurring in ≥20%) in submissions had an invalid and/or inconsistent unit of measure (86 [40%]), insufficient information about a scale (55 [26%]), internal inconsistency (52 [24%]), written results or conclusions (47 [22%]), and unclear baseline or outcome measure (44 [20%]).

Conclusions

Most major issues identified in a convenience sample of results submissions at ClinicalTrials.gov could be described with only 12 categories. Limitations of this analysis include the use of a convenience sample and assessment of major issues by 2 people sequentially rather than independently. Further research is needed to confirm the generalizability of these findings, with an aim of improving the validation process, developing targeted support materials, and improving results reporting on the platform.

1ClinicalTrials.gov National Library of Medicine, National Institutes of Health, Bethesda, MD, USA, heather.dobbins@nih.gov

Conflict of Interest Disclosures:

All authors report working for ClinicalTrials.gov. Dr Zarin is a member of the Peer Review Congress Advisory Board but was not involved in the review or decision of this abstract.

Funding/Support:

Supported by the Intramural Research Program of the National Library of Medicine, National Institutes of Health.

Role of the Funder:

Four authors are full-time employees (HDD, TT, RJW, DZ), and 1 author is a contractor (CC) of the National Library of Medicine. The National Library of Medicine has approved this submission.